Within the dynamic panorama of Synthetic Intelligence, Transformer neural networks have emerged as a mind-blowing innovation, essentially altering the strategy to sequence modeling. Launched by Vaswani et al. in 2017, the Transformer structure represents a departure from standard recurrent and convolutional neural networks, prioritizing parallelization and a spotlight mechanisms.

On the core of Transformer networks lies the idea of self-attention, a mechanism that revolutionizes how fashions course of sequential knowledge. In contrast to conventional fashions that course of sequences sequentially, Transformers can consider all parts concurrently, making them inherently parallelizable and considerably quicker. This potential to weigh the significance of various parts inside a sequence permits Transformers to seize complicated patterns and relationships effectively.

The eye mechanism permits Transformer fashions to concentrate on related components of the enter sequence, facilitating the seize of long-range dependencies in knowledge. By attending to completely different components of the enter sequence with various weights, Transformers excel at duties requiring contextual understanding, equivalent to language translation, textual content summarization, and sentiment evaluation.

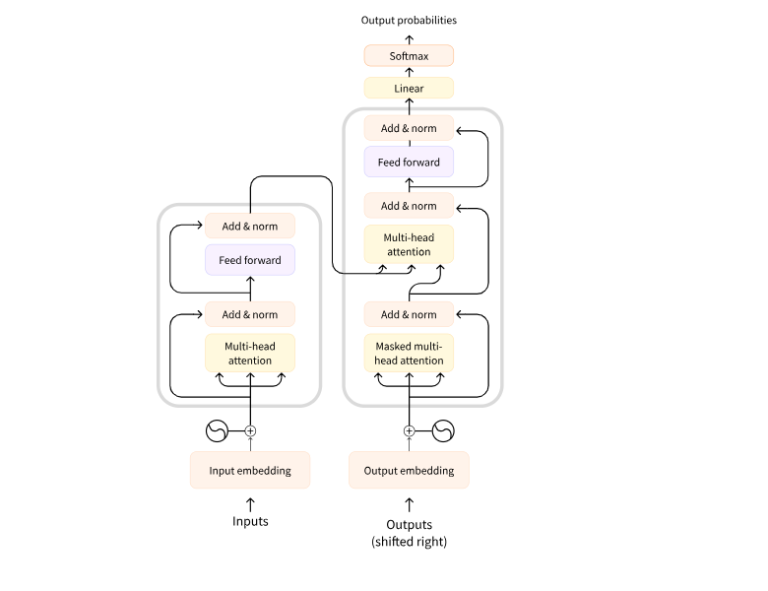

The Transformer structure contains an encoder-decoder framework, with every consisting of a number of layers of self-attention mechanisms and feedforward neural networks. This modular design permits straightforward adaptation to numerous duties by adjusting the structure and coaching process. Moreover, residual connections and layer normalization ensures secure coaching and facilitates gradient propagation throughout layers.

Some of the celebrated Transformer fashions is BERT (Bidirectional Encoder Representations from Transformers). Launched by Devlin et al. in 2018, BERT achieved state-of-the-art outcomes on numerous NLP duties by pretraining a big Transformer mannequin on huge corpora of textual content knowledge and fine-tuning it on particular downstream duties. By leveraging bidirectional context, BERT realized wealthy representations of phrases, surpassing earlier approaches relying solely on shallow phrase embeddings.

Whereas initially designed for NLP duties, Transformer fashions have functions in various domains equivalent to laptop imaginative and prescient and speech recognition. Imaginative and prescient Transformer (ViT), proposed by Dosovitskiy et al. in 2020, demonstrated outstanding efficiency on picture classification duties by treating photographs as sequences of patches and making use of the Transformer structure. Equally, fashions like Speech Transformer have proven promise in transcribing and understanding speech, leveraging self-attention mechanisms to seize temporal dependencies in audio knowledge.

Transformer neural networks symbolize a paradigm shift in synthetic intelligence, providing a robust framework for sequence modeling and pure language processing. As analysis on this discipline progresses and computational sources change into extra accessible, the potential of Transformer fashions to revolutionize AI throughout various domains stays immense. With their potential to seize long-range dependencies, deal with parallel processing, and adapt to numerous duties, Transformers are poised to proceed driving innovation and reshaping the AI panorama for years.