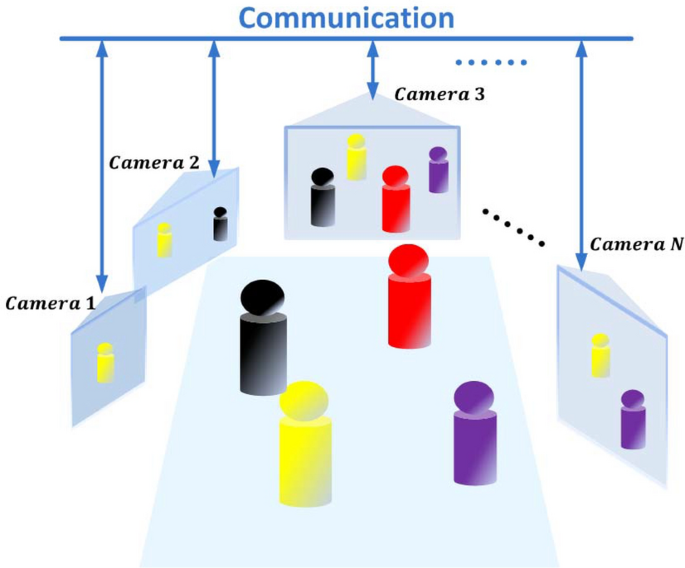

As a knowledge scientist, I used to be lately engaged by a safety firm specializing in surveillance. Their preliminary briefing revealed an in depth community of cameras at every website, every using its personal detection mannequin. My preliminary assumption — that they sought an ensemble mannequin to establish essentially the most possible objects — proved incorrect.

Intriguingly, the corporate’s focus lies not in what the cameras seize, however in what they could miss. Their goal is to arrange for a wide selection of situations with significant chances, recognizing that low-likelihood occasions can diminish the vigilance of response groups. My job, subsequently, is to information them in prioritizing instances that warrant the workforce’s consideration. Moreover, in conditions the place uncertainty is minimal, fewer instances require scrutiny, thereby mitigating the persistent concern of false alarms within the safety sector.

The crux of their request entails leveraging conformal prediction — a complicated statistical method in machine studying that quantifies predictive uncertainty. In contrast to typical strategies that yield single-point estimates, conformal prediction generates prediction intervals or units that embody the true worth with a specified likelihood. This strategy proves notably helpful in domains demanding dependable uncertainty estimates, comparable to medical diagnostics, monetary modeling, and autonomous car methods.

Conformal prediction’s energy lies in its skill to adapt to numerous fashions and knowledge distributions by harnessing historic knowledge and mannequin residuals. This flexibility renders it a strong software for enhancing decision-making processes, permitting safety personnel to focus their efforts extra successfully and effectively.

To proceed with the above course of, we have to assume that we now have the likelihood for every label. For binary labeling, I’ve written about this in this post. Subsequent, we’ll prolong this strategy to multi-class calibration. Taking this into multi-labeling follows this path.

In multi-label calibration, varied strategies might be employed to make sure correct likelihood estimates for every label. One widespread strategy is isotonic regression, which entails becoming a non-decreasing operate to the expected chances, aligning them extra intently with the true label frequencies. One other method is Platt scaling, the place a logistic regression mannequin is fitted to the outputs of a base classifier to provide calibrated chances.

Ensemble strategies like Bayesian Binning into Quantiles (BBQ) partition the likelihood house into bins and use Bayesian methods to assign chances. Moreover, temperature scaling, a variant of Platt scaling, introduces a single parameter to regulate the softmax outputs of a neural community, making certain the expected chances higher signify the true likelihoods. For the rest of this publish, we’ll assume the information is calibrated.

The calibrated knowledge supplies a distribution of chances for every doable end result for every prediction. For such a distribution, we outline the time period protection. This may be outlined in two alternative ways:

- okay Protection: After we contemplate the okay commonest values, the share of information that corresponds to those values is known as okay protection.

- p Protection: p protection is outlined because the minimal variety of distinctive values wanted to be chosen to realize a okay protection that exceeds a given threshold, p.

One may view protection as a categorical interpretation of a confidence interval from fundamental statistics. We’re utilizing the definitions launched in this article.

It’s price noting that if the mannequin has excessive confidence within the prediction, for a hard and fast p, fewer distinct values are wanted. Conversely, the okay values will most certainly cowl a better p. This perception is a key level of significance for the conformal prediction methodology.

Returning to the safety agency, the implementation of conformal prediction has confirmed to be extremely helpful. This method supplies a partial set of predictions with excessive likelihood, which permits the workforce to pay attention their efforts on inspecting solely the most certainly instances. By narrowing down the scope, the workforce can allocate their sources extra effectively and successfully.

Moreover, in situations the place the predictions have been made with larger confidence, the variety of instances requiring additional investigation was considerably decreased. This contrasts with conditions the place the prediction confidence was decrease, necessitating a extra in depth assessment. The flexibility to distinguish and prioritize instances based mostly on confidence ranges has streamlined the workflow and decreased the general workload.

I’m happy to report that the safety agency was very happy with the outcomes. Using conformal prediction not solely improved their operational effectivity but in addition enhanced the accuracy of their risk detection processes. This optimistic end result underscores the worth of integrating superior predictive methods into safety operations, paving the way in which for extra proactive and focused threat administration methods.

Conformal prediction is gaining traction throughout varied scientific disciplines, notably in areas involving advanced organic methods and medical analysis. For instance, genomic sequences and cell biology. This statistical method supplies a framework for making predictions with quantifiable confidence ranges, enhancing the reliability and interpretability of predictive fashions. In fields starting from molecular biology to medical medication, conformal prediction aids researchers in making extra knowledgeable choices by providing a scientific strategy to uncertainty quantification. It helps in predicting varied outcomes, from molecular interactions to affected person responses, with accompanying measures of certainty. This functionality is particularly helpful in domains the place experimental validation is dear or time-consuming, because it permits researchers to prioritize essentially the most promising avenues for additional investigation. By incorporating conformal prediction, scientists can develop extra sturdy fashions, enhance the effectivity of analysis processes, and finally improve the trustworthiness of their findings. This strategy is proving notably helpful in situations the place understanding the boldness of predictions is essential for making important choices or advancing scientific data.

Multi-label prediction is turning into more and more widespread in various machine-learning functions. When mixed with conformal prediction, it gives:

- Enhanced reliability and interpretability of predictions

- Refined evaluation capabilities

- Improved decision-making throughout varied fields

- A twin operate as each an output methodology and a metric for goodness-of-fit

This strategy is especially helpful in areas comparable to advice methods, autonomous driving, cybersecurity, and medical diagnostics, the place it could actually result in higher outcomes and deeper insights.

Conformal prediction will also be generalized for steady goal vectors. On this case, quantile regression can be utilized. For every prediction, this methodology supplies a protection interval, which might be considered a dynamic confidence interval.

When utilized to steady goal vectors:

- Conformal prediction makes use of quantile regression methods

- It produces protection intervals for every prediction

- These intervals operate as dynamic confidence intervals

- The tactic maintains the important thing advantages of conformal prediction, comparable to distribution-free validity

This strategy permits for uncertainty quantification in regression duties, offering extra informative predictions than level estimates alone.

To conclude this weblog publish, let’s delve right into a sensible implementation of conformal prediction utilizing Python. We’ll leverage the nonconformist bundle, an extension of scikit-learn that makes a speciality of conformal prediction methods. To get began, set up the mandatory libraries with the next command:

pip set up scikit-learn nonconformist

For our demonstration, we’ll apply a multi-label Random Forest mannequin to the basic Iris dataset. This instance will showcase how conformal prediction might be built-in right into a real-world machine-learning workflow, offering helpful insights into prediction uncertainty.

Right here’s the code to implement this strategy:

import numpy as np

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from nonconformist.base import ClassifierAdapter

from nonconformist.nc import ClassifierNc, MarginErrFunc

from nonconformist.icp import IcpClassifier

# Load dataset

knowledge = load_iris()

X, y = knowledge.knowledge, knowledge.goal

# Break up the information into coaching, calibration, and take a look at units

X_train, X_temp, y_train, y_temp = train_test_split(X, y, test_size=0.4, random_state=42)

X_cal, X_test, y_cal, y_test = train_test_split(X_temp, y_temp, test_size=0.5, random_state=42)

# Prepare a classifier

mannequin = RandomForestClassifier(n_estimators=100, random_state=42)

mannequin.match(X_train, y_train)

# Arrange conformal predictor

nc = ClassifierNc(ClassifierAdapter(mannequin), err_func=MarginErrFunc())

icp = IcpClassifier(nc)

# Match the conformal predictor to the coaching knowledge

icp.match(X_train, y_train)

# Calibrate the conformal predictor with the calibration set

icp.calibrate(X_cal, y_cal)

# Make predictions

prediction = icp.predict(X_test, significance=0.05)

# Print predictions

print(“Predictions:”, prediction)

print(“Predictions outcomes:”,np.imply([p[yy] for p, yy in zip(prediction,y_test )]))