Hey there! earlier than we discover optimizers in PyTorch. Let’s attempt to perceive What’s PyTorch and Why do we want it?

What & Why

What’s PyTorch?

PyTorch is an open-source machine studying library primarily based on the Torch library, primarily developed by Fb’s AI Analysis lab. It’s a highly effective and versatile framework for constructing and coaching deep neural networks and different machine studying fashions.

Why PyTorch?

4/5 knowledge scientists advocate PyTorch for it’s,

- Pythonic and intuitive design, carefully following Python syntax, making it straightforward to study and use.

- Versatile and expressive, permitting dynamic computation graphs and on-the-fly modifications.

- Robust GPU acceleration capabilities, leveraging CUDA and cuDNN for environment friendly tensor operations.

- Dynamic computation graphs, enabling simpler debugging and extra pure management stream.

- Automated differentiation (autograd) for environment friendly backpropagation and gradient computations.

- Massive and energetic group, with a wealthy ecosystem of libraries and sources.

- Interoperability with different common Python libraries like NumPy and Pandas.

- Deployment and manufacturing help, facilitating the mixing of fashions into real-world purposes.

P.S. The remaining 1? Nicely, let’s simply say they’re nonetheless caught within the Stone Age, making an attempt to coach their fashions with abacuses and service pigeons.

Now you may need a floor degree understanding of what’s PyTorch and why will we use it. Now let’s dive deep into following matters,

What’s Optimizer in PyTorch?

In PyTorch, an optimizer is a key part accountable for updating the weights and biases of a neural community in the course of the coaching course of. It performs a vital position in minimizing the loss perform and bettering the mannequin’s efficiency.

An optimizer is an algorithm that adjusts the mannequin’s parameters (weights and biases) within the route that minimizes the loss perform. The loss perform is a measure of the mannequin’s error or the distinction between the anticipated output and the precise output. By minimizing the loss perform, the optimizer helps the mannequin study from the coaching knowledge and enhance its accuracy.

Who do we want it?

We want optimizers in PyTorch as a result of:

1. They replace the big variety of weights and biases in neural networks throughout coaching.

2. They use gradients from backpropagation to find out modify the parameters.

3. Completely different optimizers have methods to enhance convergence pace, stability, and navigate complicated loss landscapes.

4. Some optimizers incorporate regularization methods to stop overfitting.

5. Optimizers have hyperparameters that may be tuned for higher efficiency.

So, optimizers are important algorithms that allow environment friendly and efficient coaching of deep neural networks by updating parameters primarily based on gradients and using methods to enhance convergence and generalization. Which might successfully defend the mannequin from over or underfitting points.

What are forms of Optimizers?

Some generally used optimizers in PyTorch embody:

- Stochastic Gradient Descent (SGD)

- Adam (Adaptive Second Estimation)

- RMSprop (Root Imply Sq. Propagation)

- Adagrad (Adaptive Gradient Algorithm)

- Adadelta (Adaptive Studying Fee Technique)

- AdamW (Adam with Weight Decay)

The place to make use of what?

- Stochastic Gradient Descent (SGD): SGD is like taking small steps downhill to seek out the bottom level in a valley. You begin someplace random and take steps within the route that makes the slope steepest, progressively getting nearer to the bottom level. It’s easy and dependable, however it would possibly zigzag a bit on the way in which down.

- Adam (Adaptive Second Estimation): Adam is sort of a good traveler who adjusts their step measurement primarily based on how steep the slope is and how briskly they’re shifting. It’s adaptive and environment friendly, making certain smoother and quicker descent down the valley. Consider it as discovering the optimum path downhill whereas adjusting your tempo alongside the way in which.

- RMSprop (Root Imply Sq. Propagation): RMSprop is like smoothing out the trail downhill by averaging out the steepness of the slope over time. It prevents sudden jumps in step measurement, making certain a extra steady descent. It’s like strolling downhill on a bumpy street however with fewer bumps alongside the way in which.

- Adagrad (Adaptive Gradient Algorithm): Adagrad is like protecting monitor of how steep the slope is at every step and adjusting your step measurement accordingly. It provides extra weight to parameters with bigger gradients, making certain quicker progress within the steeper elements of the valley. It’s like being extra cautious on steep slopes and taking greater steps on gentler slopes.

- Adadelta (Adaptive Studying Fee Technique): Adadelta is like dynamically adjusting your step measurement primarily based on not solely the steepness of the slope but in addition how a lot progress you’ve made thus far. It adapts to altering situations and ensures a extra constant tempo downhill. It’s like adjusting your tempo primarily based on each the slope and the way far you’ve already descended.

- AdamW (Adam with Weight Decay): AdamW is like Adam, however with an added deal with stopping overgrowth of sure parameters. It applies a penalty to massive parameter values, making certain a extra balanced and well-behaved mannequin. It’s like trimming the bushes alongside your path downhill to maintain them from rising too wild.

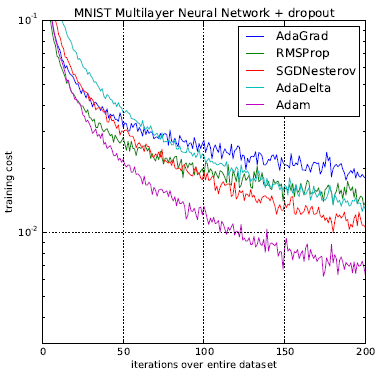

Graphical illustration which represents how how does completely different optimizer carry out at completely different epochs.

Conclusion

In sum, understanding optimizers in PyTorch is essential for efficient deep studying. We’ve discovered what they’re, why they’re vital, and the different sorts. From SGD to AdamW, every has its strengths for making fashions higher.

Realizing which optimizer to make use of helps prepare fashions quicker and extra precisely, avoiding frequent pitfalls like overfitting. With this information, you’ll be able to confidently deal with the challenges of deep studying and obtain your objectives with ease.

“First you study and make machine study!”

Completely happy coding : )