Neural networks are computational techniques impressed by the construction and functioning of the human mind. They’re the basic part of many Machine studying fashions. Neural networks be taught by instance.

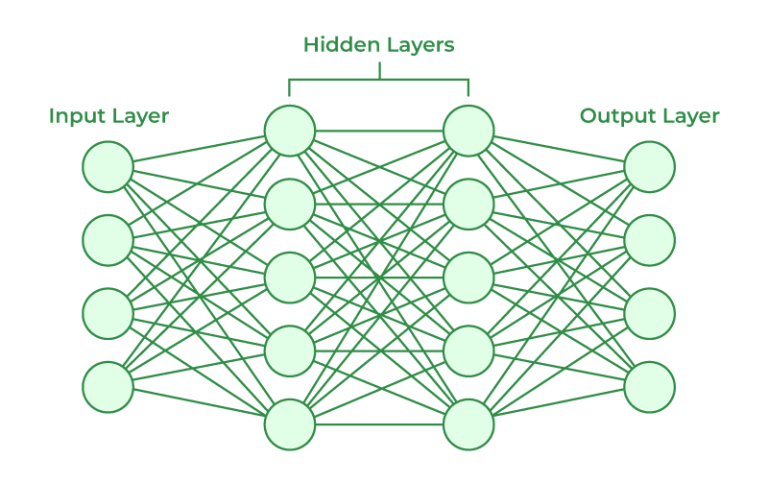

Neural networks are product of layers of neurons. These neurons kind the core processing items of the community. The information is handed to the community by the enter layer. The output layer predicts the ultimate consequence. The layers in between enter and out layers, known as, the hidden layers performs a lot of the computations.

Neural community come in several varieties:

- Convolutional Neural Networks (CNN)

- Synthetic Neural Networks (ANN)

- Recurrent Neural Networks (RNN)

Convolutional Neural Networks

CNNs are particular form of neural networks which can be used for processing information that has a grid like topology. CNN was constructed on the inspiration of the visible cortex, part of the human mind. In CNN, we use convolution operation. A neural community could be recognized as CNN if there’s atleast one convolution layer. Right here is the structure of a CNN:

- Convolution layer

- Pooling layer

- Full-Linked layer

An necessary utility of CNNs are in picture classification.

Within the RGB mannequin, the color picture is definitely composed of three such matrices corresponding to a few color channels — crimson, inexperienced and blue. In black-and-white pictures we solely want one matrix. Every of those matrices shops values from 0 to 255.

It’s a course of the place we take a small matrix of numbers (known as kernel or filter), we move it over our picture and rework it primarily based on the values from filter.

import tensorflow as tf

from tensorflow.keras import layers, fashions# Outline the CNN mannequin

mannequin = fashions.Sequential()

# Add convolutional layers

mannequin.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(150, 150, 3)))

mannequin.add(layers.MaxPooling2D((2, 2)))

mannequin.add(layers.Conv2D(64, (3, 3), activation='relu'))

mannequin.add(layers.MaxPooling2D((2, 2)))

mannequin.add(layers.Conv2D(128, (3, 3), activation='relu'))

mannequin.add(layers.MaxPooling2D((2, 2)))

mannequin.add(layers.Conv2D(128, (3, 3), activation='relu'))

mannequin.add(layers.MaxPooling2D((2, 2)))

# Flatten the output for dense layers

mannequin.add(layers.Flatten())

# Add dense layers

mannequin.add(layers.Dense(512, activation='relu'))

mannequin.add(layers.Dense(1, activation='sigmoid'))

# Compile the mannequin

mannequin.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])

# Show the mannequin abstract

mannequin.abstract()

Synthetic Neural Networks (ANN)

Synthetic Neural Networks include synthetic neurons that are known as items. These items are organized in a sequence of layers that collectively represent the entire Synthetic Neural Community in a system.

Just like CNNs, Synthetic Neural Community has an enter layer, an output layer in addition to hidden layers. The enter layer receives information from the surface world which the neural community wants to research or study. Then this information passes by one or a number of hidden layers that rework the enter into information that’s priceless for the output layer. Lastly, the output layer supplies an output within the type of a response of the Synthetic Neural Networks to enter information supplied.

Synthetic Neural Networks (ANNs) have discovered a variety of functions throughout varied domains on account of their means to be taught complicated patterns and relationships from information. Picture Recognition and Classification, Speech Recognition and Autonomous Automobiles are a number of functions of ANNs.

import tensorflow as tf

from tensorflow.keras import layers, fashions# Outline the speech recognition mannequin

mannequin = fashions.Sequential()

# Add LSTM layer

mannequin.add(layers.LSTM(128, input_shape=(None, 13)))

# 13 options extracted from the audio, enter form is (time_steps, options)

# Add dense layer for classification

mannequin.add(layers.Dense(num_classes, activation='softmax'))

# num_classes is the variety of output lessons

# Compile the mannequin

mannequin.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

# Show the mannequin abstract

mannequin.abstract()