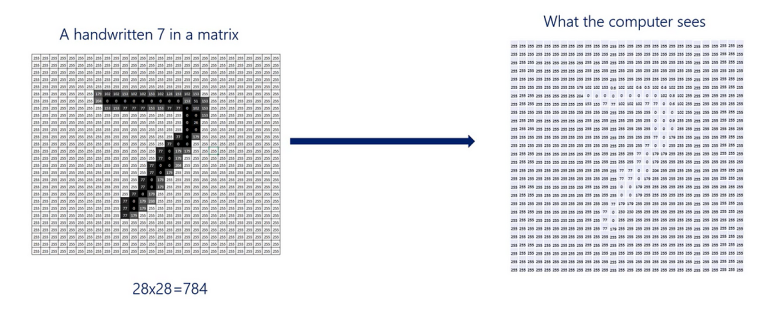

How are we going to strategy this picture recognition downside utilizing the MNIST dataset? Every picture within the MNIST dataset is 28 pixels by 28 pixels and is introduced in grayscale. This implies we will consider every picture as a 28×28 matrix the place the pixel values vary from 0 to 255, with 0 representing pure black and 255 representing pure white.

As an illustration, a handwritten digit ‘7’ in matrix kind may look one thing like this:

That’s an approximation, however the concept is kind of the identical.

Flattening the Picture

Since all photographs are of the identical measurement, a 28×28 picture accommodates 784 pixels. For a deep feed-forward neural community, we remodel or flatten every picture right into a vector of size 784. Every pixel depth turns into an enter characteristic, giving us 784 enter models in our enter layer.

Constructing the Mannequin

- Enter Layer: We begin with 784 enter models, one for every pixel.

- Hidden Layers: We then create hidden layers. For our instance, we’ll use two hidden layers, that are ample to construct a mannequin with superb accuracy.

- Output Layer: The output layer can have 10 models, one for every digit (0–9). We are going to use one-hot encoding for each the outputs and the targets. For instance, the digit ‘0’ will likely be represented by the vector

[1, 0, 0, 0, 0, 0, 0, 0, 0, 0]and the digit ‘5’ by[0, 0, 0, 0, 0, 1, 0, 0, 0, 0].

To see the chance of a digit being appropriately recognized, we’ll use the softmax activation operate within the output layer.