Activation capabilities are used to suit the output in a sure vary . Completely different capabilities repair it in numerous ranges . It may be from [0,1] or [-1 ,1] . In easy phrases it’s used to “activate” the enter after calculation with weights and biases to a sure output to a price within the vary.

There’s Linear and Non-linear activation capabilities:

-> Linear Activation perform (Id perform)

Also called “No Activation” . The activation is proportional to the enter. The perform doesn’t do something to the weighted sum of the enter. It simply spits out the worth it’s given.

-> Non Linear Activation Features :

1) Sigmoid perform

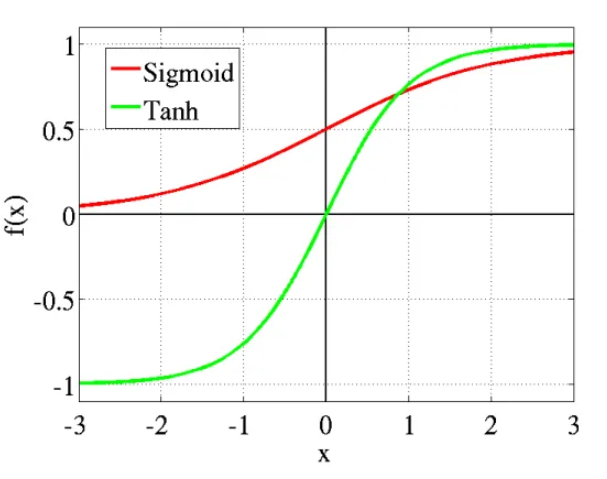

This perform appears like an S formed curve.The vary is [0,1].

If the community or the mannequin has to foretell one thing , i.e the output is a Sure or No (1 or 0) we use the Sigmoid activation perform . The sigmoid perform is differentiable , which implies we are able to discover the slope of the curve from any 2 factors.

2) Tanh activation perform ( hyperbolic tangent)

That is additionally an S formed perform and similar to the Sigmoid perform however the vary is [-1,1].

Utilized in circumstances the place you want 3 circumstances of output damaging , impartial and constructive : -1,0,1

3) ReLU

The rectified linear unit activation is fairly simple . For each x>0 f(x) = x and for each x ≤ 0 f(x) = 0 . The differential f’(x) will return 1 and 0. It’s differentiable for each level besides at x = 0.

4) Leaky ReLU

It’s an improved model of ReLU.

In ReLU for all values of x < 0 f(x) = 0 .

In leaky ReLU for x<0 f(x) = 0.1 * x .

That is finished in order that for all values lower than 0 it recieves a extremely small output as properly fairly than it simply being 0. (addressing the disadvatage in regular ReLU).

5) Softmax activation perform

It’s just like a logistic perform just like the sigmoid perform. It’s mainly a mix of a number of sigmoids. It’s often used within the closing layer .

It’s used for multiclass classification — because it permits the output to be a chance distribution for a number of lessons