If in case you have tried to coach a deep neural community mannequin, you understand that probably the most vital steps as a preprocessing is normalizing the info earlier than passing it to the mannequin. Completely different knowledge sorts may require completely different normalizations, however on the finish, the purpose is to organize the info all of the numbers are in an identical vary. Right here, I wish to speak about what I discovered in normalization, particularly normalizing a picture for a deep neural community coaching.

I’ve been engaged on a mission to determine objects in microscopic pictures. Particularly figuring out and finding “tangles” that are irregular accumulations of a protein known as tau inside nerve cells (neurons) within the mind. Tangles are one of many hallmarks of Alzheimer’s illness and are believed to contribute to the degeneration and demise of neurons, resulting in the progressive cognitive decline seen in affected people. In less complicated phrases, tangles are like knots that kind inside mind cells, they usually’re a giant deal in Alzheimer’s illness. These knots change over time, and as they do, they mess with how mind cells work, making it tougher for individuals to assume and keep in mind issues. Scientists have discovered that there are completely different levels to those knots: they begin as “pre-tangles,” then grow to be “mature-tangles,” and at last flip into “ghost-tangles.” Understanding these levels is vital as a result of it helps scientists determine detect Alzheimer’s earlier and develop higher remedies for it.

This was all I knew about these objects and now I’m accountable to search out the perfect object detection mannequin for a dataset that I needed to practice my deep neural community mannequin.

So, the coaching dataset was trying one thing like this for a pattern picture:

Which if you overlay the bounding packing containers on prime of the picture will appear like this:

Why it was vital to grasp the underlying knowledge?

The significance was that after understanding the info, I discovered that the the distinction between the 2 lessons was the function of the hue of brown shade:

- mature-tangle = darkish brown

- ghost-tangle = gentle brown

So, I needs to be cautious on normalizing the info with out altering this vital info within the picture.

As soon as I understood how a human see the info, I wished to see how the mannequin sees the info. Both you might be utilizing TensorFlow or PyTorch, you normally have a line of code as:

loss = mannequin(pictures, targets)

the place pictures is the tensor of all the pictures offered to the mannequin and targets is the record of labels or predictions that we wish from the mannequin.

Laser-focusing on the pictures variable, it accepts a sure knowledge sort which normally is float32.

When a variable has a float32 knowledge sort it implies that it may well take values from -∞ to +∞. However what can be the vary the we wish to present?

Picture ought to have proper knowledge sort and proper vary

Knowledge Sort: The picture is normally saved as unsigned integer 8bit which implies that every pixel has a worth from 0 (black) to 255 (white) for every channel. So, once we load the above picture in our code, and test the min and max , we normally get:

picture.dtype = 'uint8'

picture.min = 0

picture.max = 255

since we have to feed the picture as float32 , we’ve to transform the picture to float32which after the conversion ought to give us:

picture.dtype = 'float32'

picture.min = 0.0

picture.max = 255.0

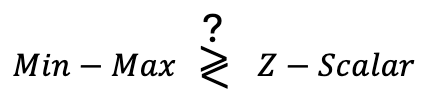

Vary: At this level, we will move the variable to mannequin operate as is; however that is the place the place normalization comes into the play and we alter the values. So, a very powerful query is how ought to I modify the vary? Two widespread methods for normalizations are:

- Z-Rating normalization: reworking values in order that they’ve a imply of 0 and a regular deviation of 1.

- Min-Max normalization: scaling values in order that they’re in a selected vary, sometimes [0, 1] or [-1, 1].

I attempted each of the strategies and visualized the picture after the normalization and located which might be the perfect normalization method for my knowledge.

On this method, we’re reworking the pixel values of the pictures in order that they’ve a regular statistical distribution, on this case a Regular (Gaussian) distribution with imply=0 and std=1. On this methodology, we calculate the imply and std of the entire dataset, and for every picture, we’ll use the next system to rescale the depth worth of the pixel:

after the transformation the picture will appear like beneath:

This normalization was loosing the vital info of hue of brown shade to distinguish the 2 lessons that I had. So, this was not the precise sort of normalization for my dataset.

On this method, we’re rescaling the values into a selected vary, sometimes [0, 1] or [-1, 1]. This normalization method linearly transforms the unique knowledge into a brand new vary outlined by the minimal and most values of the unique worth.

after the transformation the picture will appear like beneath:

This normalization was preserving the vital info of hue of brown shade to distinguish the 2 lessons that I had. So, this was the precise sort of normalization for my dataset.

I discovered that the kind of normalization will depend on how we’re trying on the knowledge and what visible cues are serving to us discovering and labeling the objects. We should make sure that we’re preserving these options in the course of the preprocessing transformation. For my dataset, it was Min-Max. However for one more dataset is perhaps Z-Rating.

For my future initiatives, I’ll attempt each of the normalizations and observe the generated picture earlier than feeding it to the community and ensuring I’m not destructively altering one thing within the picture.

Two rule of thumbs that I discovered once I was looking was:

- Z-Rating normalization ensures a constant distribution, making it preferable when sustaining knowledge consistency, scale and distribution is important. This methodology is extra utilized in situations the place we don’t care concerning the absolute relation and vary of the pixels. For instance, we try to coach a mannequin that detects automotive in a picture the place the truth that it’s day or evening will not be vital.

- Min-Max normalization preserves the relative info. This methodology is extra utilized in situations the place absolutely the scale of pixel values isn’t vital. For instance, we try to coach a mannequin that detects the fireplace in a picture the place the actual fact it’s day or evening is vital.