Convolutional Neural Networks (CNNs) are a sort of deep neural community that’s significantly well-suited for processing information that has a grid-like topology, comparable to photographs. CNNs have been instrumental in attaining state-of-the-art ends in numerous laptop imaginative and prescient duties, together with picture classification, object detection, and semantic segmentation.

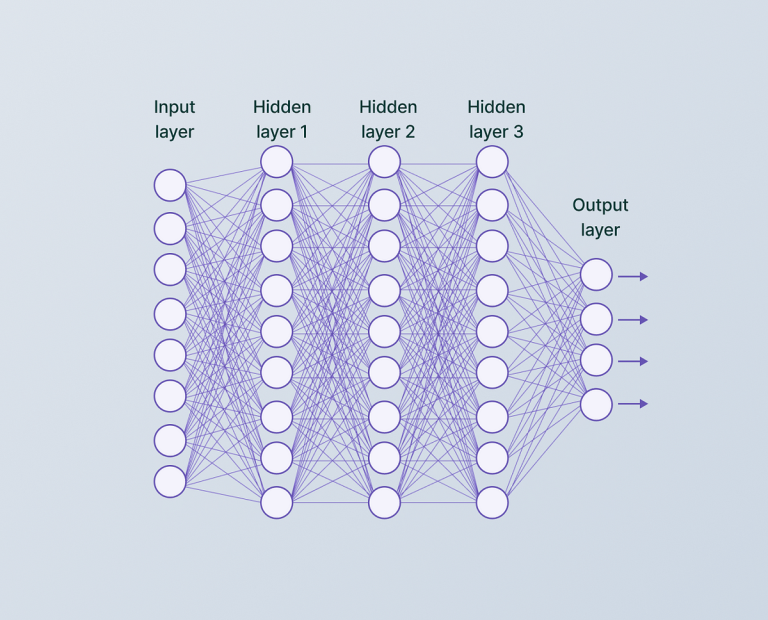

To raised perceive CNNs, it’s important to evaluation the basics of neural networks. These fashions are impressed by the neural networks within the human mind. They include interconnected nodes, or neurons, that are organized in layers. The neurons obtain enter alerts, carry out computations on them, and cross the output to the subsequent layer.

The best type of a neural community is a feedforward neural community, also referred to as a multilayer perceptron (MLP). In an MLP, the neurons are organized into an enter layer, a number of hidden layers, and an output layer. The enter layer receives the uncooked information, and the output layer produces the ultimate predictions or classifications. The hidden layers carry out nonlinear transformations on the enter information, enabling the community to study advanced patterns and relationships.

CNNs are a specialised kind of neural community designed to course of grid-like information, comparable to photographs. They’re composed of three important varieties of layers: convolutional layers, pooling layers, and totally related layers.

The convolutional layer is the core constructing block of a CNN. It performs a mathematical operation known as convolution, which is a specialised form of linear operation that entails multiplying a set of weights (generally known as a filter or kernel) with the enter information and summing the outcomes.

The convolution operation is carried out by sliding the filter over the enter information, computing the element-wise multiplication and sum at every place, and producing a characteristic map because the output. The filter acts as a characteristic detector, capturing particular patterns or options within the enter information, comparable to edges, curves, or textures.

Mathematically, the convolution operation may be expressed as:

(I * Ok)(i, j) = Σ_m Σ_n I(i+m, j+n) * Ok(m, n)

The place:

Iis the enter information (e.g., a picture)Okis the filter or kerneliandjare the indices of the output characteristic mapmandnare the indices of the filter

A number of filters may be utilized to the enter information, leading to a number of characteristic maps that seize totally different patterns or options.

Pooling layers are usually inserted between two convolutional layers to be able to downsample the spatial dimension of the picture illustration. The pooling layer has two key hyperparameters: window dimension and stride. Inside every window, the pooling operation is both carried out by taking the utmost worth or the common of the values, relying on the kind of pooling getting used. The pooling layer operates independently on each depth slice of the enter and spatially resizes it earlier than stacking them collectively.

The pooling layer is usually positioned after the convolutional layer in a CNN structure. Its major objective is to cut back the spatial dimensions (top and width) of the characteristic maps, thereby decreasing the computational complexity and offering a type of translation invariance.

The most typical pooling operation is max pooling, the place the utmost worth inside a specified window (e.g., 2×2) is chosen and carried ahead to the subsequent layer. This operation successfully down-samples the characteristic maps whereas retaining essentially the most salient options.

Mathematically, max pooling may be expressed as:

P(i, j) = max_{(m, n) in R} I(i+m, j+n)

The place:

Pis the output of the pooling operationRis the pooling area (e.g., 2×2 window)iandjare the indices of the output characteristic mapmandnare the indices inside the pooling area

Kinds of Pooling

Max Pooling and Common Pooling are two varieties of pooling layers utilized in convolutional neural networks. Max Pooling selects the utmost worth from every window of the characteristic map, whereas Common Pooling calculates the common worth of all the weather current within the area of the characteristic map lined by the filter.

The aim of pooling layers is to cut back the dimensionality of the characteristic maps produced by the convolutional layers. Max Pooling selects essentially the most dominant options in every window, whereas Common Pooling averages the options in every window. This helps to make the mannequin extra environment friendly and reduces overfitting.

NOTE: Max Pooling performs loads higher than Common Pooling

Normalization Layer

Normalization layers are a sort of layer in neural networks that normalize the output of the earlier layers. They’re usually positioned between the convolution and pooling layers, which permits every layer to study extra independently and reduces the chance of overfitting the mannequin.

It’s value noting that whereas normalization layers are helpful, they don’t seem to be at all times needed for superior architectures. It is because they could not considerably contribute to efficient coaching in some instances.

After a number of convolutional and pooling layers, the characteristic maps are flattened right into a one-dimensional vector and fed into a number of totally related layers, just like the hidden layers in a conventional multilayer perceptron.

The totally related layers mix the options realized by the earlier layers and carry out high-level reasoning to provide the ultimate output, comparable to classification scores or regression values.

CNNs are educated utilizing supervised studying strategies, the place the community is supplied with labeled coaching information (e.g., photographs and their corresponding labels or annotations). The coaching course of entails the next steps:

- Ahead Propagation: The enter information is fed into the community, and the activations are propagated by means of the layers, producing an output prediction.

- Loss Computation: The output prediction is in contrast with the true labels or goal values, and a loss perform (e.g., cross-entropy loss for classification or imply squared error for regression) is computed to measure the discrepancy between the expected and true values.

- Backpropagation: The gradients of the loss perform with respect to the community’s weights and biases are computed utilizing the chain rule of calculus. This course of is named backpropagation and permits the community to replace its parameters within the path that minimizes the loss.

- Parameter Replace: The community’s weights and biases are up to date utilizing an optimization algorithm, comparable to stochastic gradient descent (SGD) or one in every of its variants (e.g., Adam, RMSProp), to attenuate the loss perform.

- Iteration: Steps 1–4 are repeated for a number of epochs (full passes by means of the coaching information) till the community converges to a passable answer or a stopping criterion is met.

Over time, numerous CNN architectures have been proposed and efficiently utilized to various laptop imaginative and prescient duties. Some notable architectures embrace:

- LeNet: One of many earliest profitable CNN architectures, launched by Yann LeCun in 1998 for handwritten digit recognition.

- AlexNet: Launched by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton in 2012, AlexNet achieved groundbreaking outcomes on the ImageNet classification problem and sparked the deep studying revolution in laptop imaginative and prescient.

- VGGNet: Developed by the Visible Geometry Group on the College of Oxford, VGGNet launched deeper and extra subtle CNN architectures with smaller filter sizes and extra layers.

- ResNet: Launched by Kaiming He et al. in 2015, ResNet addresses the vanishing gradient downside in deep neural networks by introducing skip connections, permitting the coaching of a lot deeper networks.

- Inception (also referred to as GoogLeNet): Developed by Christian Szegedy et al. at Google, the Inception structure introduces environment friendly inception modules that carry out convolutions at a number of scales and depths.

- DenseNet: Proposed by Gao Huang et al. in 2017, DenseNet improves upon ResNet by introducing dense connections between layers, permitting environment friendly characteristic propagation and reuse.

CNNs have been efficiently utilized to numerous laptop imaginative and prescient duties, together with:

- Picture Classification: Assigning a category label (e.g., “cat,” “canine,” “automotive”) to an enter picture.

- Object Detection: Finding and classifying a number of objects inside a picture by drawing bounding containers round them.

- Semantic Segmentation: Assigning a category label to every pixel in a picture, successfully segmenting the picture into totally different areas or objects.

- Picture Captioning: Producing pure language descriptions for photographs.

- Picture Denoising: Eradicating noise from photographs whereas preserving necessary particulars.

- Tremendous-Decision: Upscaling low-resolution photographs to increased resolutions.

CNNs have additionally discovered functions past laptop imaginative and prescient, comparable to pure language processing (e.g., textual content classification, sentiment evaluation), speech recognition, and bioinformatics.

Convolutional Neural Networks have revolutionized the sector of laptop imaginative and prescient and proceed to push the boundaries of what’s potential with deep studying. By leveraging the grid-like construction of knowledge like photographs, CNNs can study wealthy, hierarchical representations and obtain spectacular efficiency on a variety of duties.

As analysis in deep studying progresses, we are able to anticipate to see additional developments in CNN architectures, coaching strategies, and functions, enabling much more highly effective and versatile fashions for fixing advanced real-world issues.

import tensorflow as tf# Outline the CNN mannequin

class CNN(tf.keras.Mannequin):

def __init__(self, num_classes):

tremendous(CNN, self).__init__()

# Outline the convolutional layers

self.conv1 = tf.keras.layers.Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=(28, 28, 1))

self.conv2 = tf.keras.layers.Conv2D(64, kernel_size=(3, 3), activation='relu')

# Outline the pooling layers

self.pooling = tf.keras.layers.MaxPooling2D(pool_size=(2, 2))

# Outline the totally related layers

self.flatten = tf.keras.layers.Flatten()

self.fc1 = tf.keras.layers.Dense(128, activation='relu')

self.fc2 = tf.keras.layers.Dense(num_classes, activation='softmax')

def name(self, x):

# Ahead cross by means of the layers

x = self.conv1(x)

x = self.pooling(x)

x = self.conv2(x)

x = self.pooling(x)

x = self.flatten(x)

x = self.fc1(x)

x = self.fc2(x)

return x

# Create an occasion of the mannequin

mannequin = CNN(num_classes=10)

# Compile the mannequin

mannequin.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# Print the mannequin abstract

mannequin.abstract()