Gradio is a Python library that empowers you to craft user-friendly interfaces in your machine studying fashions in mere minutes. Think about — with just a few strains of code, you’ll be able to rework your complicated algorithms into interactive playgrounds, accessible to anybody with an online browser. Let’s dive into the steps to create your first Gradio app and unleash the magic of machine studying visualization!

1. Import your important instruments:

Identical to any intrepid explorer, we have to collect our provides. In our case, these provides are Python libraries! The code snippet we’ll be dissecting imports all the required ones, together with Gradio (gr), for constructing the interface, and libraries for picture processing like PIL and torch.

import PIL

import torch

import numpy as np

import gradio as gr

from torchvision import transforms

from speed up import Accelerator

from generator_best import DualNet # substitute with your personal process, modules

from utils import visualize_lab_tensor # substitute with your personal process, modules

2. Outline a mannequin loading perform:

Consider this perform as your private treasure map, guiding you to the particular machine studying mannequin you wish to use. The create a perform load_model perform that takes two arguments: the mannequin identify (like “UUColor-best”) and the gadget it ought to run on (CPU or GPU). Based mostly on the mannequin identify, it masses the corresponding weights out of your native storage and returns the pre-trained mannequin.

# Outline a perform to load the chosen mannequin

def load_model(model_name: str, gadget: torch.gadget):

# Based mostly on the model_name, load the suitable mannequin out of your native storage

if model_name == "UUColor-best-2023-09-17-21-57": # Specified with your personal mannequin identify

mannequin = DualNet(rgb_in_ch=1, rgb_out_ch=3, lab_in_ch=3, lab_out_ch=2) # Initialize you ownmodel

mannequin.load_state_dict(

torch.load("outputs/2023-09-17-21-57/fashions/net_G_0074.pt",

map_location=gadget)) # Load along with your native mannequin weight

elif model_name == "TSColor-best-2023-08-20-13-23": # Specified with your personal mannequin identify

mannequin = DualNet(rgb_in_ch=1, rgb_out_ch=3, lab_in_ch=3, lab_out_ch=2) # Initialize you personal mannequin

mannequin.load_state_dict(

torch.load("outputs/2023-08-20-13-23/fashions/net_G_0089.pt",

map_location=gadget)) # Load along with your native mannequin weight

else:

increase ValueError(f"Invalid mannequin identify: {model_name}")

return mannequin

3. The center of the app: processing the picture

The process_image perform is the engine that powers your Gradio app. It takes a number of arguments, together with the uploaded picture, the chosen mannequin, the specified output dimension, decision, and a seed worth for randomness. This is a breakdown of its key steps:

- Setting the seed: It units the random seed to make sure constant outcomes when the perform known as a number of instances.

- Accelerator (choice): It initializes an

acceleratorobject, which helps handle computations effectively on {hardware} like GPUs. - Pre-processing (choice): The perform preprocesses the uploaded picture by normalizing it or resizing it to a particular decision.

- Loading your mannequin: The

load_modelperform known as to retrieve the chosen pre-trained mannequin. - Ahead go: The preprocessed picture are fed into the pre-train mannequin, and the output is produced.

- Submit-processing (choice): The output is transformed again to a PIL picture, resized if mandatory, after which post-processed with the unique picture if in case you have create your gained perform (e.g. utilizing

apply_colorperform).

# Outline a perform to course of the picture with the loaded mannequin

def process_image(picture: PIL.Picture.Picture,

selected_model: str,

output_size: str,

decision: int,

seed: int) -> PIL.Picture.Picture:

# Seed

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

np.random.seed(seed)# Accelerator Setting

accelerator = Accelerator()

gadget = accelerator.gadget

# Pre-processing (choice)

picture = picture.convert("RGB")

original_size = picture.dimension

grey = picture.convert('L').resize((decision, decision))

grey = transforms.ToTensor()(grey)

# Load the mannequin utilizing the load_model perform

mannequin = load_model(selected_model, gadget)

# Put together knowledge by accelerator (choice)

mannequin, grey, noise = accelerator.put together(mannequin, grey, noise)

# Set the fashions in analysis mode

mannequin.eval()

# Ahead go

with torch.no_grad():

# Go grey tensor into DualNet

ab_tensor = mannequin(grey, noise)

# Concate the bottom fact "L" channel to the predict "ab" channel tensor

predict_tensor = torch.cat((grey, ab_tensor), dim=1)

converted_predict_tensor = visualize_lab_tensor(predict_tensor, gadget)

converted_predict_tensor = converted_predict_tensor.squeeze(0).cpu()

converted_predict_tensor = (converted_predict_tensor + 1) / 2

result_image = transforms.ToPILImage()(converted_predict_tensor)

# Submit-processing (choice)

result_image = result_image.resize(original_size)

result_image = apply_color(picture, result_image)

if output_size != "Authentic":

result_image = result_image.resize((decision, decision))

return result_image

4. Create you personal perform (choice):

For instance, the apply_color perform takes two pictures: the grayscale enter picture and a colorized end result picture. It then combines the luminance info from the grayscale picture with the colour info from the end result picture, leading to a colorized model of the enter picture.

def apply_color(picture: PIL.Picture.Picture, color_map: PIL.Picture.Picture) -> PIL.Picture.Picture:

# Convert enter pictures to LAB shade house

image_lab = picture.convert('LAB')

color_map_lab = color_map.convert('LAB')# Break up LAB channels

l, a , b = image_lab.break up()

_, a_map, b_map = color_map_lab.break up()

# Merge LAB channels with shade map

merged_lab = PIL.Picture.merge('LAB', (l, a_map, b_map))

# Convert merged LAB picture again to RGB shade house

result_rgb = merged_lab.convert('RGB')

return result_rgb

5. Constructing the Gradio interface:

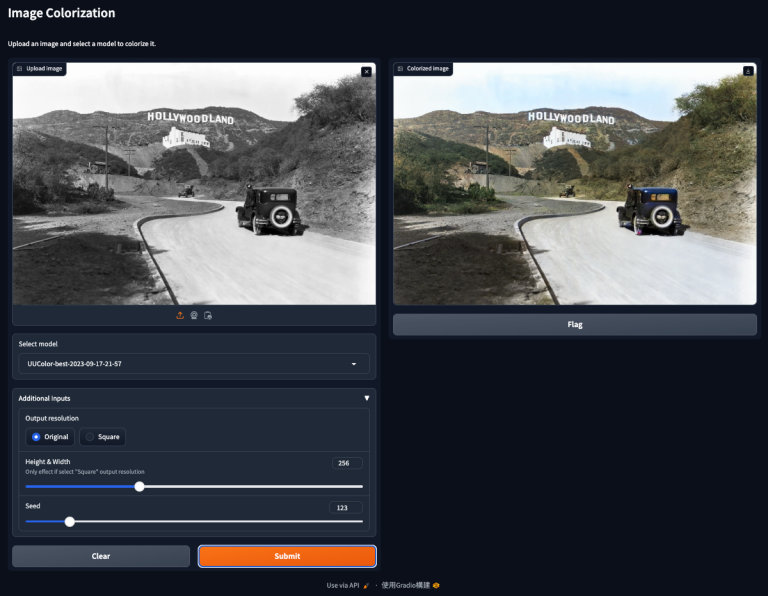

The create_interface perform constructs the visible interface that customers will work together with. It defines a number of key parts:

- Picture add: This permits customers to pick a picture from their native machine for colorization.

- Mannequin choice: A dropdown menu lets customers select between totally different pre-trained fashions, every doubtlessly providing distinctive colorization types.

- Outcome picture show: This shows the ultimate end result output generated by the mannequin.

- Extra controls: These present customers with choices to customise the output, akin to selecting the ultimate picture decision (authentic or sq.) and adjusting the picture dimension. They will even introduce a random seed worth to see how slight variations can have an effect on the outcomes.

# Create the Gradio interface

def create_interface():

model_dict = {

"UUColor-best": "UUColor-best-2023-09-17-21-57",

"TSColor-best": "TSColor-best-2023-08-20-13-23"

}

interface = gr.Interface(

fn=process_image,

inputs=[

# Image upload

gr.Image(label="Upload image",

value="example/legacy_images/Migrant-Mother.jpg",

type="pil"),

# Model selection

gr.Dropdown(choices=[model_dict[key] for key in model_dict],

worth=model_dict["UUColor-best"],

label="Choose mannequin"),

],

outputs=[

# Result image display

gr.Image(label="Colorized image", format="jpg"),

],

additional_inputs=[

# Additional controls

gr.Radio(choices=["Original", "Square"], worth="Authentic",

label="Output decision"),

gr.Slider(minimal=128, most=512, worth=256, step=128,

label="Peak & Width",

data='Solely impact if choose "Sq." output decision'),

gr.Slider(0, 1000, 123, label="Seed"),

],

title="Picture Colorization",

description="Add a picture and choose a mannequin to colorize it."

)

return interface

6. Launching the Gradio app:

The primary perform ties every part collectively. It calls the create_interface perform to construct the Gradio interface after which makes use of the launch technique to carry it to life. Now, once you run this script, an online browser window will open, displaying your very personal Gradio app, able to course of pictures with totally different fashions at your command!

def primary():

# Launch the Gradio interface

interface = create_interface()

interface.launch()if __name__ == "__main__":

primary()

>>> python gradio_ui.py

Working on native URL: http://127.0.0.1:7860To create a public hyperlink, set `share=True` in `launch()`.

That is only a style of the chances with Gradio. With a little bit of creativity, you’ll be able to craft informative and fascinating interfaces for all types of machine studying fashions. So, experiment, discover, and unleash the facility of Gradio to share your machine studying creations with the world!