Intro

Having performed tennis since I used to be a child, I looked for a tennis dataset to make the most of the information I developed to make insightful conclusions. I discovered a dataset consisting of varied statistics in an expert tennis match between two gamers thought-about the best of all time: Novak Djokovic and Rafael Nadal. Some options it accommodates are the server, the returner, the winner of the purpose, identification of first or second serve, and the purpose length. Two attributes that stood out to me had been the purpose length and the identification of the primary or second serve. I believed there was a correlation between these two attributes.

Goal

Second serves are sometimes simpler to obtain than first serves because the server isn’t hitting the ball as quick. Subsequently, it’s more durable for the server to realize an offensive place and finish the purpose rapidly. Any serve benefits at first will likely be diminished and the purpose will last more.

The Goal: To show there’s a correlation between the kind of serve and the purpose length.

Anticipated Outcomes: I can show if a correlation exists between these two variables by making a mannequin that makes use of the statistics of the purpose length to categorise the function first or second. When assessing outcomes, a really excessive accuracy of 90% or greater could be regarding because the level length isn’t sufficient data for the mannequin to nearly completely classify the kind of serve. The purpose length ought to permit the mannequin to deduce however not conclude the kind of serve, so the accuracy must be excessive however not too excessive. I hoped to finish with an accuracy within the 70–80% vary.

Setup

Because the end result of the mannequin is binary (first or second serve), I made a decision to make use of logistic regression. Logistic regression scales from 0 to 1 in chance and has a call boundary primarily based off the chance to categorize a binary output. This fashion, it could successfully categorize the output to a primary or second serve primarily based on the chance it predicts.

By way of getting the info prepared for the mannequin to make use of, through the use of the isna() operate, I concluded the info didn’t include any null values. Nevertheless, there have been two modifications I wanted to make to the dataset, each regarding the “totaltime” function. First, I needed to convert the info sort from ‘object’ to ‘float’, as “totaltime” was initially set to ‘object’. Second, I needed to take away the entire unfavourable values in “totaltime” as unfavourable values aren’t doable.

The Course of

Now that the info was able to be skilled by the mannequin, I started constructing the mannequin.

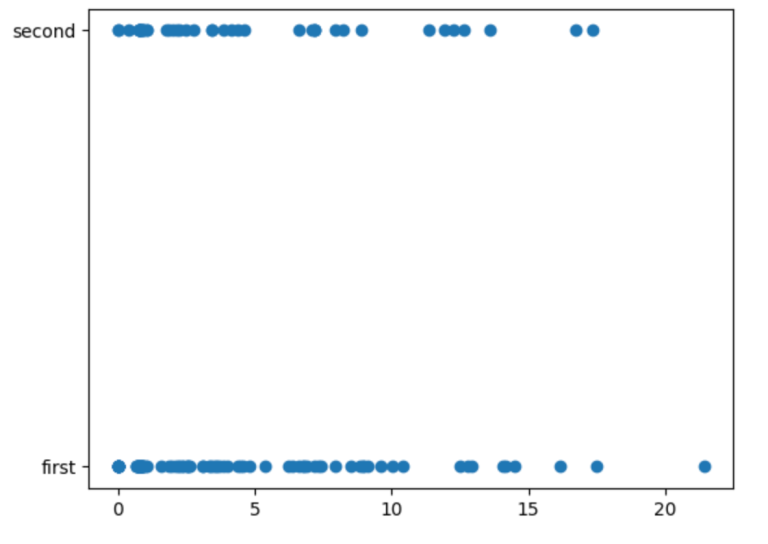

First, I wanted to pick my options and labels. Since I used to be observing how the time length of the purpose correlates to sort of serve, I wanted to pick “totaltime” as my function (x-value) and “serve” as my label (y-value).

I cut up the info into 80% coaching knowledge and 20% testing knowledge, the usual cut up for many ML fashions.

Subsequent, I created the logistic regression mannequin, fitted the coaching knowledge, and created a prediction variable that makes use of the x_test knowledge to foretell the y_test.

Outcomes

After the method of making and coaching the mannequin is finished, I evaluated the mannequin’s accuracy by evaluating the mannequin’s y-value predictions to the precise y_test knowledge.

The accuracy was roughly 79%, which means the mannequin accurately categorised 79% of the labels.

To get a extra visible and in-depth view of the outcomes, I additionally created a confusion matrix, which shows the true positives, false positives, true negatives, and false negatives.

What does this confusion matrix inform us?

- 23 first serves had been labeled as first serves

- 5 second serves had been labeled as first serves

- 1 first serve was labeled as a second serve

- 0 second serves had been labeled as second serves

Conclusion

My aim of aiming across the 70–80% vary was reached. Nevertheless, after taking a more in-depth take a look at the confusion matrix, I spotted flaws could exist that influenced the mannequin’s accuracy.

- Proportion of first to second serves

When inspecting the confusion matrix, one statistic stood out to me. 24 out of 29 serves had been first serves. Why is the mannequin categorizing the overwhelming majority of serves as first serves? When wanting again on the dataset, I spotted that 69% of the serves within the “serve” column had been first serves.

If the vast majority of serves within the dataset are first serves, the mannequin positive aspects an unfair benefit. Purely by guessing first serves each time, the mannequin can attain a 69% accuracy. Moreover, the info chosen for testing occurred to have a fair greater proportion of serves labeled as first serves than the whole dataset, with 24 out of 29 serves being first serves. The mannequin categorized 28 out of 29 serves as first serves, proving it did use the proportion bias to its benefit. To stop the mannequin from gaining a bonus, the “serve” column ought to have roughly half its knowledge labeled as first serves and the opposite half as second serves. To stability the proportions, I used df.drop() to drop sufficient rows with knowledge labeled as first serves till the ratio of knowledge labeled as first serves to the info labeled as second serves was equal.

I evaluated the mannequin once more utilizing the accuracy metric and a confusion matrix.

9 out of 18 serves had been incorrectly categorised (50% accuracy), proving a correlation between level length and kind of serve didn’t exist on this match.

Moreover, I created a logistic regression graph which confirmed the identical correlation between the purpose length and kind of serve irrespective of if the serve was first or second.

2. Small Dataset

Primarily based off the graph above, the correlation I predicted didn’t exist on this match. Nevertheless, this doesn’t assure that the correlation doesn’t exist in tennis. The dataset was initially very small, solely consisting of 1 tennis match. I additionally needed to shrink the dataset by dropping rows to stability the proportions of the “serve” column. The quantity of knowledge I used was manner too small for the graph to precisely symbolize the final tendencies of the way in which tennis is performed. Subsequently, I couldn’t decide if the correlation between the kind of serve and level length merely doesn’t exist in tennis or the dataset was too small to symbolize this correlation. With a much bigger dataset together with extra matches, this correlation will likely be extra correct and will prove in another way, because the mannequin takes an accumulation of all of the various elements in a tennis match just like the floor sort, the climate, the participant’s efficiency that day, and extra. Sadly, I couldn’t discover a tennis dataset consisting of statistics from a lot of matches on Kaggle. I may have created my very own massive dataset of tennis match statistics, however that might have taken very lengthy and making a dataset is past the scope of this class. As a result of small dataset, I used to be unable to show that the correlation I predicted exists.

Takeaways

Though I used to be unsuccessful in proving the existence of the correlation I predicted as a consequence of flaws within the mission, I realized quite a bit in regards to the ML course of.

1. A desired accuracy doesn’t all the time imply a great mannequin

In my mannequin, I hoped for a 70–80% accuracy. I achieved that, however after wanting in-depth on the outcomes, I discovered flaws within the mannequin. I revised it, finally resulting in a decrease however more true accuracy. As I did on this mission, sooner or later, I’ll worth the significance of fastidiously assessing the outcomes and making an attempt to determine any influencing elements irrespective of the accuracy.

2. The Significance of Trial and Error

Most ML fashions aren’t excellent after the primary course of. In my case, I bumped into many issues. For instance, I had to return and drop rows in my dataset to take away a proportion bias that boosted the accuracy of my mannequin. With many influencing elements concerned in creating an correct ML mannequin, flaws are certain to be current. Subsequently, trial and error is the premise of the event of a steady and correct ML mannequin.