Within the huge panorama of deep studying, pre-trained neural networks stand as formidable giants, having traversed hundreds of thousands of photographs to study intricate patterns and representations. Leveraging these pre-trained networks for characteristic extraction provides a shortcut to understanding complicated visible information, unlocking a treasure trove of insights. On this complete information, we’ll embark on a journey into the realm of characteristic extraction, unraveling the mathematical intricacies and demonstrating sensible implementation with Python code.

Characteristic extraction is the method of capturing significant representations from uncooked information, enabling machines to grasp and interpret complicated patterns. Within the realm of pc imaginative and prescient, characteristic extraction performs a pivotal function in analyzing and understanding photographs. By extracting options from pre-trained neural networks, we will leverage the information acquired from huge picture datasets to reinforce the efficiency of our personal duties.

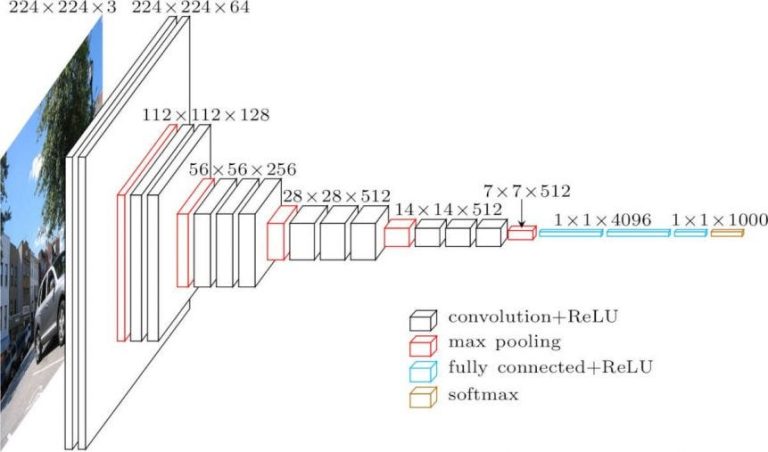

Pre-trained neural networks, reminiscent of VGG, ResNet, and Inception, have been educated on huge picture datasets like ImageNet, studying to extract hierarchical options that seize wealthy visible semantics. These networks act as highly effective characteristic extractors, reworking uncooked photographs into high-level representations that encode useful details about the underlying visible content material.

Let’s delve into the mathematical underpinnings of characteristic extraction with pre-trained neural networks. At its core, characteristic extraction includes passing uncooked photographs by way of the pre-trained community and extracting the activations of particular layers. These activations function the extracted options, capturing the visible semantics of the enter photographs. Mathematically, this course of might be represented as follows:

Let’s exhibit how you can carry out characteristic extraction with a pre-trained neural community utilizing Python and TensorFlow:

import tensorflow as tf

from tensorflow.keras.purposes import VGG16

from tensorflow.keras.preprocessing import picture

from tensorflow.keras.purposes.vgg16 import preprocess_input

import numpy as np# Load pre-trained VGG16 mannequin

mannequin = VGG16(weights='imagenet', include_top=False)

# Load and preprocess picture

img_path = 'example_image.jpg'

img = picture.load_img(img_path, target_size=(224, 224))

x = picture.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

# Extract options

options = mannequin.predict(x)

print(options.form)

On this instance, we load the pre-trained VGG16 mannequin with out the totally linked layers (include_top=False). We then load and preprocess an instance picture earlier than passing it by way of the VGG16 mannequin to extract options. The ensuing options signify the high-level representations of the enter picture captured by the pre-trained community.

Characteristic extraction with pre-trained neural networks provides a strong strategy to understanding and analyzing visible information. By leveraging the information acquired from huge picture datasets, we will extract wealthy representations that encode useful details about the underlying visible content material. With the mathematical foundations and sensible implementation demonstrated on this information, you’re geared up to unlock the potential of characteristic extraction and unveil insights hidden inside complicated visible information.

References: