It’s a classification algorithm used for predicting the category (or class) of one thing primarily based on a set of options (predictors). It really works utilizing the rules of chance, particularly Bayes’ theorem.

Bayes Theorem

It’s a elementary idea in chance idea and statistics. It offers with conditional chance, which is the chance of an occasion occurring on condition that one other occasion or situation has already occurred.

Formulation:P(A | B) = ( P(B | A) * P(A) ) / P(B)

the place:

P(A | B) is the posterior chance of occasion A occurring, on condition that occasion B has already occurred. That is what we're making an attempt to calculate.

P(B | A) is the chance of occasion B occurring, on condition that occasion A is true.

P(A) is the prior chance of occasion A occurring, unbiased of another occasion. That is our preliminary perception about how possible A is to occur.

P(B) is the whole chance of occasion B occurring, no matter A.

Assumption: The important thing idea in Naive Bayes is the belief of independence between options. This implies it assumes options don’t affect one another (e.g., the looks of the phrase “cash” doesn’t have an effect on the affect of the phrase “pressing”). Whereas this won’t at all times be true in actuality, it simplifies the calculations.

Under are the particular implementations of Naive Bayes designed for classification primarily based on totally different knowledge sorts

- MultinomialNB: Appropriate for discrete options (knowledge with restricted classes) and infrequently used for rely knowledge. It makes use of the multinomial distribution to mannequin the chance of options given a category.

- GaussianNB: Appropriate for steady options (numerical knowledge that may take any worth inside a spread). It assumes options comply with a Gaussian (regular) distribution.

Lets create some pattern knowledge like under, which serves as prior information or coaching for our Naive Bayes algorithm.

As all above predictors are discrete columns, therefore we are able to use MultinomialNB Classifier.

MultinomialNB Classifier

That is primarily based on idea of multinomial distribution.

What’s multinomial distribution?

It helps us to grasp chance distribution for discrete outcomes with a hard and fast variety of prospects (like classes in textual content classification). And it offers a strong device for modelling and analysing eventualities with discrete outcomes in varied Machine Studying purposes.

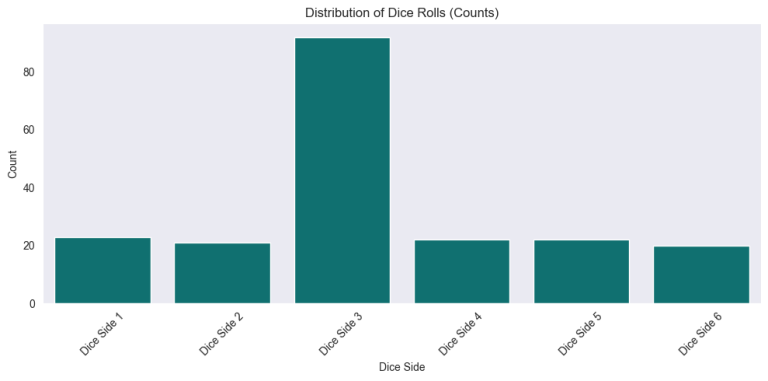

For instance to grasp Multinomial distribution, lets think about a rolling cube instance, the place it consists 6 cube sides. And lets decide preliminary chance’s perception on every cube aspect could happen in 10variety of following rolls.

ok = 6 # Variety of sides on a cube

n = 10 # Variety of rolls# Chances for either side (1 to six)

p = np.array([0.1, 0.1, 0.5, 0.1, 0.1, 0.1])

# Variety of runs, to get biased cube counts as per every cube aspect following above talked about chances

sample_size = 20

# Simulate final result counts (variety of instances either side seems in 10 rolls)

outcome_counts = np.random.multinomial(n, p, measurement=sample_size)

np.random.multinomial helps to generate the end result primarily based on recommended chances i.e., p. As this operate internally generates discrete final result randomly, it’s recommended to have larger pattern measurement i.e., variety of runs, to have identical talked about chance bias have an effect on in generated final result. Which is roughly seen in under plots.

As we given larger chance for cube aspect 3 i.e., 0.5, we besides that cube aspect 3 happens 5 instances out of 10 rollings. As we repeated this for 20 instances. We are able to clearly see cube aspect 3 getting larger biased chance to happen in generated occasions on common.

Based mostly on coaching / prior information knowledge, now lets a predict a occasion utilizing MultinomialNB Classifier.

# Pattern climate situation

weather_condition = {"outlook": "sunny", "temp": "scorching", "humidity": "excessive", "windy": False}

Lets assume above is the given climate situation and we wish to predict the chance of occasion play tennis[yes] to happen.

Calculating Prior Chance (P(Play = sure))

# Counting optimistic circumstances (play = sure)

positive_count = sum(row["play"] == "sure" for index, row in df_tennis_data.iterrows())# Whole no of information factors

total_data_points = len(df_tennis_data)

# Prior Chance i.e., Chance of being "play=sure" circumstances in coaching knowledge

prior_probability = spherical(positive_count / total_data_points, 2)

print(f"Prior Chance (P(Play = sure)) --> {prior_probability}")

# Prior Chance (P(Play = sure)) --> 0.8

Calculating Probability (P(Proof/Situation | Play = sure))

# Filtering data the place matching our climate situation throughout play_tennis[yes]

filtered_data = [row for index, row in df_tennis_data.iterrows() if row["play"] == "sure" and all(row[key] == climate[key] for key in climate)]

print(filtered_data, "n")# Depend filtered knowledge

matching_evidence_count = len(filtered_data)

print(f"matching_evidence_count --> {matching_evidence_count}", "n")

# Out of total optimistic(i.e., play_tennis[true]) rely, what's chance/chance/proportion of being each our climate situation matched and play_tennis[yes]

chance = spherical(matching_evidence_count / positive_count, 2)

print("Probability (P(Proof | Play = sure)):", chance)

# matching_evidence_count --> 8

# Probability (P(Proof | Play = sure)): 0.67

Predict occasion chance i.e., posterior chance

P(Play = sure | Proof) = Prior Chance * Probability

Be aware: P(Proof) might be difficult to calculate in real-world eventualities. It requires contemplating all attainable climate combos and their chances (Play = Sure or No). Assuming all proof combos are equally possible/distributed i.e., not biased. We ignore this parameter within the system.

predicted_probability = spherical(prior_probability * chance, 2)

print("Chance of Play = sure on given climate situation:", predicted_probability)if predicted_probability > 0.5:

print("Play_tennis is predicted as 'sure'")

else:

print("Play_tennis is predicted as 'no'")

# Chance of Play = sure on given climate situation: 0.54

# Play_tennis is predicted as 'sure'

Play tennis occasion is predicted as ‘sure’. And it’s appropriate, as a result of we’ve got sufficient of each occasion i.e., play tennis[yes], and talked about climate situation of being play tennis[yes] occurred within the knowledge, to help the expected chance.