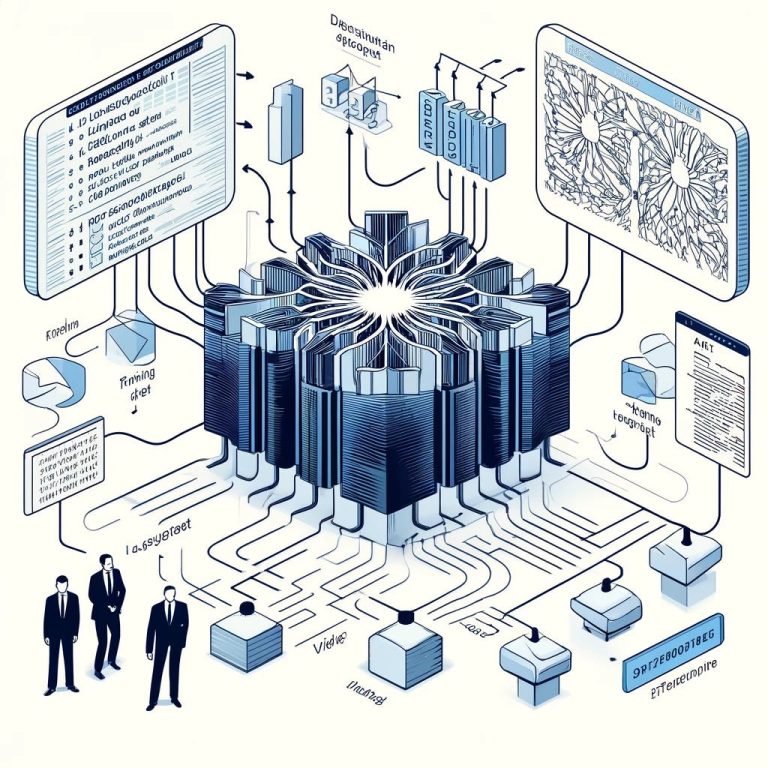

Massive language fashions, corresponding to GPT-3, BERT, and others, are skilled on large quantities of textual content information and include billions of parameters. To effectively retailer and distribute these fashions, numerous file codecs have been developed by totally different organizations and frameworks. On this article, we’ll discover a few of the commonest file codecs used for big language fashions and supply examples of easy methods to load and run them utilizing Python.

Anthropic, the corporate behind the creation of AI fashions like Claude, has developed two file codecs particularly designed for environment friendly storage and loading of huge machine studying fashions: GGML (Glorot/Gated Gremlin MLmodel) and GGUF (Glorot/Gated Gremlin Updatable Format).

GGML is an optimized format that goals to scale back the reminiscence footprint and loading occasions of huge fashions, making it appropriate for working on client {hardware}. GGUF, alternatively, is an updatable model of GGML that enables for fine-tuning or updating the mannequin parameters.

To load and run a GGML mannequin in Python, you should use Anthropic’s ggml library:

import ggmlclass GGMLModel:

def __init__(self, model_path):

self.mannequin = ggml.load_model(model_path)

def run(self, input_text):

# Preprocess enter

input_tokens = self.mannequin.tokenize(input_text)

# Run inference

output_tokens = self.mannequin.generate(input_tokens)

# Postprocess output

output_text = self.mannequin.detokenize(output_tokens)

return output_text

# Utilization

mannequin = GGMLModel('path/to/mannequin.ggml')

output = mannequin.run('Enter textual content goes right here')

print(output)

The Hugging Face Transformers library supplies a unified interface for working with numerous pre-trained language fashions. Fashions within the HF format are usually saved in a listing construction containing a number of information, together with the mannequin weights, configuration, and tokenizer information.

To load and run an HF mannequin in Python, you should use the transformers library:

from transformers import AutoTokenizer, AutoModelForCausalLMclass HuggingFaceModel:

def __init__(self, model_path):

self.tokenizer =…