Optimization issues contain figuring out the perfect viable reply from a wide range of choices, which may be seen continuously each in actual life conditions and in most areas of scientific analysis. Nevertheless, there are numerous complicated issues which can’t be solved with easy pc strategies or which might take an inordinate period of time to resolve.

As a result of easy algorithms are ineffective at fixing these issues, consultants around the globe have labored to develop more practical methods that may clear up them inside sensible time frames. Synthetic neural networks (ANN) are on the coronary heart of a few of the most promising methods explored up to now.

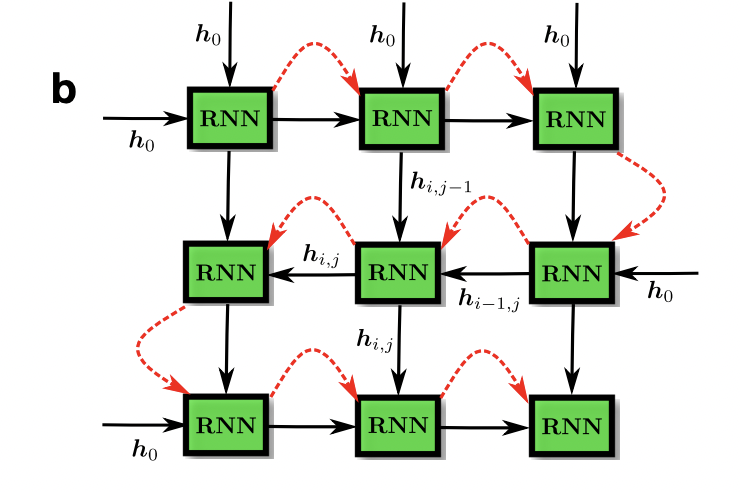

A brand new examine by the Vector Institute, the College of Waterloo and the Perimeter Institute for Theoretical Physics in Canada presents variational neuronal annealing. This new optimization technique combines recurrent neural networks (RNN) with the notion of annealing. Utilizing a parameterized mannequin, this progressive method generalizes the distribution of possible options to a specific downside. Its objective is to resolve real-world optimization issues utilizing a novel algorithm based mostly on annealing principle and pure language processing (NLP) RNNs.

The proposed framework relies on the precept of annealing, impressed by metallurgical annealing, which consists of heating the fabric and cooling it slowly to deliver it to a weaker, extra resistant and extra steady vitality state. Simulated annealing was developed based mostly on this course of, and it seeks to determine numerical options to optimization issues.

The most important distinguishing function of this optimization technique is that it combines the effectivity and processing capability of ANNs with some great benefits of simulated annealing methods. The workforce used the RNNs algorithm which has proven explicit promise for NLP purposes. Whereas these algorithms are sometimes utilized in NLP research to interpret human language, researchers have reused them to resolve optimization issues.

In comparison with extra conventional digital annealing implementations, their RNN-based technique produced higher choices, growing the effectivity of each classical and quantum annealing procedures. With autoregressive networks, researchers have been capable of code the annealing paradigm. Their technique takes optimization downside fixing to a brand new degree by straight exploiting the infrastructures used to coach trendy neural networks, similar to TensorFlow or Pytorch, accelerated by GPU and TPU.

The workforce carried out a number of assessments to check the efficiency of the strategy with conventional annealing optimization strategies based mostly on numerical simulations. On many paradigmatic optimization issues, the proposed method has gone past all methods.

This algorithm can be utilized in all kinds of real-world optimization issues sooner or later, permitting consultants in varied fields to resolve difficulties quicker.

The researchers wish to additional consider the efficiency of their algorithm on extra sensible issues, in addition to to check it to the efficiency of present superior optimization methods. Additionally they intend to enhance their method by changing sure elements or incorporating new ones.

You may view the complete article here

There may be additionally a code on Github:

Variational Neural Annealing

Simulated Classical and Quantum Annealing