Think about you’re making ready to prepare dinner a brand new dish. You already know some fundamentals however you want particular recipes and suggestions. So, you pull out your favourite cookbook and some journal articles that present detailed recipes and cooking suggestions. With this further data at hand, you’re in a position to prepare dinner a a lot better dish than you possibly can with simply your fundamental information.

On this analogy:

- You’re the LLM, making an attempt to generate helpful solutions.

- The cookbook and journal articles are the retrieval system, offering particular, related data to assist with the duty.

Retrieval-Augmented Technology (RAG) is like utilizing these cookbooks and articles to spice up your cooking abilities. For an AI mannequin, it means enhancing its capability to generate responses by first retrieving helpful data from a big pool of knowledge (like books, databases, articles) earlier than making an attempt to reply a question. This course of ensures that the AI’s responses aren’t simply primarily based on what it has discovered previously, however are additionally knowledgeable by the most recent, most related data obtainable.

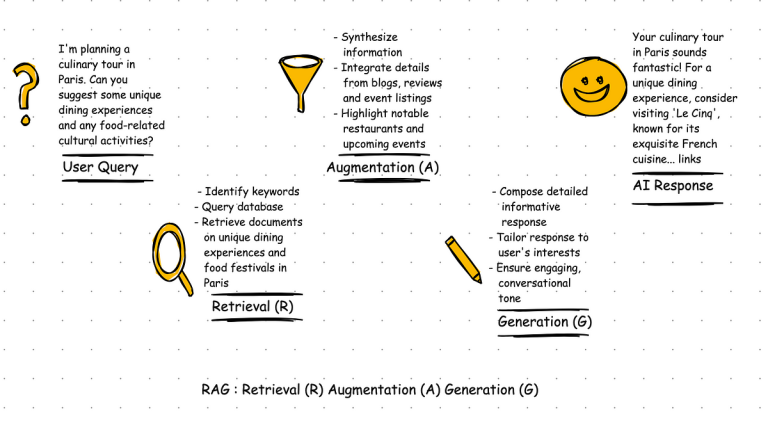

Let’s perceive every step of the Retrieval-Augmented Technology (RAG) course of within the context of utilizing an AI to reply a posh travel-related query. This instance will embrace the interior dialogue of the AI because it processes the query, retrieves data, augments its understanding, and eventually generates a complete response.

Person Question: “I’m planning a culinary tour in Paris. Are you able to counsel some distinctive eating experiences and any food-related cultural actions?”

Step 1: Retrieval (R)

- AI’s Inside Course of:

- Figuring out Key phrases: The AI identifies key phrases resembling “culinary tour,” “distinctive eating experiences,” and “food-related cultural actions” in Paris.

- Querying the Database: The AI queries its in depth database, which incorporates latest journey blogs, restaurant critiques, meals critic articles, and cultural occasion listings particular to Paris.

- Fetching Related Data: It retrieves a number of extremely related paperwork, together with a weblog on distinctive Parisian eateries, latest critiques of culinary workshops, and a schedule of upcoming meals festivals in Paris.

Step 2: Augmentation (A)

- AI’s Inside Course of:

- Synthesising Data: The AI synthesises the data from the retrieved sources. It notes that ‘Le Cinq’ presents an beautiful tasting menu, ‘Dans Le Noir?’ gives a dining-in-the-dark expertise, and the ‘Paris Gourmand Meals Tour’ is very beneficial.

- Extracting and Integrating Particulars: The AI integrates particulars resembling the situation of those eating places, the kind of delicacies they provide, the distinctive side of every eating expertise, and particulars concerning the meals tour, together with the tour path and specialties lined.

Step 3: Technology (G)

- AI’s Inside Course of:

- Composing the Response: The AI makes use of its language mannequin capabilities to compose an in depth, informative, and interesting response. It ensures the response is structured in a conversational but informative tone.

- Response Tailoring: The AI tailors the response to the consumer’s curiosity in distinctive experiences and culinary arts, incorporating recommendations for each eating and cultural actions.

“Your culinary tour in Paris sounds incredible! For a singular eating expertise, take into account visiting ‘Le Cinq,’ identified for its beautiful French delicacies and chic setting. One other intriguing possibility is ‘Dans Le Noir?’ the place you’ll be able to get pleasure from your meal in full darkness, heightening your different senses. Moreover, I like to recommend becoming a member of the ‘Paris Gourmand Meals Tour’ which explores native favourites and hidden gems throughout neighbourhoods like Le Marais and the Latin Quarter. This tour not solely presents tastings but in addition delves into the historical past and tradition behind Parisian gastronomy. Lastly, in case your journey aligns, don’t miss the ‘Montmartre Grape Harvest Pageant,’ a pleasant occasion celebrating native meals and wine. Get pleasure from your culinary journey!”

- Person Question: Begins the method with a particular query about culinary experiences in Paris.

- Retrieval (R): The AI searches its database for related data primarily based on recognized key phrases.

- Augmentation (A): The AI synthesizes and integrates the retrieved data, making ready to make use of this enhanced context for producing a response.

- Technology (G): The AI composes an in depth and tailor-made response, making certain it’s participating and informative.

- AI Response: The ultimate output delivered to the consumer, answering the preliminary question in a complete and interesting method.

- Accuracy and Relevance: By fetching and utilizing probably the most related data, RAG permits LLMs to generate extra correct and contextually acceptable responses. That is particularly helpful in fields the place up-to-date data is essential, resembling medication, legislation, or customer support.

- Dealing with of Area of interest Subjects: Even the best-trained fashions can battle with area of interest or not often mentioned subjects. RAG compensates by pulling in particular information for these subjects, enabling the mannequin to deal with a wider vary of queries.

- Steady Studying: Conventional LLMs can grow to be outdated if not re-trained with new information. RAG helps mitigate this by regularly pulling in contemporary data, thus conserving the mannequin’s outputs related over time with out fixed retraining.

Step 1: Outline the Want

- Decide in case your mission advantages from up-to-date data or must cowl subjects which might be broad or not often mentioned. Tasks that require excessive accuracy in dynamic fields are supreme candidates for RAG.

Step 2: Set Up Your Information Supply

- Select a dependable information supply related to your area. This may very well be a proprietary database, a curated listing of assets, or a business dataset. Make sure that this information could be queried effectively.

Step 3: Implement a Retrieval System

- Use instruments like Elasticsearch or Apache Solr to arrange a strong search system that may shortly discover related data primarily based on the queries your LLM will course of.

Step 4: Combine with LLM

- Modify your LLM framework to incorporate a retrieval step earlier than producing any response. This step ought to take the enter question, use the retrieval system to seek out related information, after which feed each the question and the retrieved information into the LLM.

Step 5: Practice and Fantastic-Tune

- Practice your mannequin utilizing each the usual coaching corpus and the brand new retrieval-augmented setup. Fantastic-tuning is essential to make sure the mannequin learns how you can successfully use the retrieved data when producing responses.

Step 6: Steady Testing and Suggestions

- Frequently take a look at the system with real-world queries and refine your information sources and retrieval strategies primarily based on suggestions. This iterative course of helps enhance each the accuracy and the relevance of the responses over time.

Step 7: Deployment

- Deploy your RAG-enhanced LLM in a managed surroundings to watch its efficiency and make crucial changes earlier than full-scale implementation.

By integrating RAG into your LLM tasks, you’ll be able to considerably improve the mannequin’s effectiveness, making it extra adaptable and able to dealing with complicated queries with better precision. This strategy not solely extends the usefulness of LLMs but in addition opens up new potentialities for functions that require a excessive diploma of informational accuracy and contextual consciousness.