You in all probability don’t have any issues determining if the one that is speaking to you is gloomy or offended if they’re talking a language you perceive. However what if they’re talking a language you don’t perceive?

We got down to examine a small a part of this query through the use of machine studying to see if a pc might inform the distinction between unhappiness and different feelings (completely satisfied and offended) in speech clips, even when the speech clips got here from quite a lot of languages.

We took quite a lot of pre-labeled units of speech knowledge from a number of languages: Estonian, German, Italian, French, Greek, and English.

With a purpose to guarantee that any variations that we measure between feelings aren’t on account of properties of the information themselves, however because of the speech captured within the information, we made positive that the bit depth, pattern charge, and period of the clips was comparatively even throughout the feelings, even when they had been completely different for every language.

The bar graph under reveals the general distribution by emotion and language. Whereas there are a lot of extra English clips, all languages are comparatively evenly cut up amongst the feelings

All clips had been trimmed in order that they solely contained speech and no empty time originally or finish. Any clips shorter than 1 second had been eradicated from the information set as we didn’t really feel that they’d sufficient data for our functions.

As a substitute of placing your entire clip right into a studying algorithm like another initiatives have finished, we extracted options that summarized the clip as a complete, and likewise as sections.

The analysis instructed that we take a look at points of vitality, pitch, rhythm, and timbre. We selected a number of options for every, summarized within the picture under.

Along with these options over the entire dataset, we additionally divided every clip into 5 sections and checked out these options for every part. With a purpose to seize modifications, we appeared on the variations between clips as properly.

Clustering

As soon as we had our primary options, we additionally tried k-means and hierarchical clustering to see how our knowledge would naturally group. We took the output of this (cluster quantity) and added it to the characteristic set. When modeling, we tried each with and with out these further columns.

We appeared on the distribution of the information set by language and emotion, as in Fig. 1 above. 10 % of the information from every language and emotion had been randomly chosen to maneuver from the coaching to check set.

Logistic Regression

We tried doing a primary logistic regression and really received fairly good outcomes: over 93% accuracy.

The logistic mannequin equation is:

the place

P(Yᵢ=1) is the likelihood that the iᵗʰ clip is gloomy

βₘ is the coefficient similar to predictor m, with 0 referring to the intercept

Xᵢₘ is the worth of the mᵗʰ predictor for the iᵗʰ clip

We standardized the information earlier than becoming the mannequin. We used a Imply-Squared-Error loss operate, with an L1 penalty with the intention to “weed out” the entire predictors that weren’t so related to the prediction.

Total, this gave us 105 options that had been essential (as an alternative of the complete listing of over 600), and solely 15 misclassified clips (out of 249).

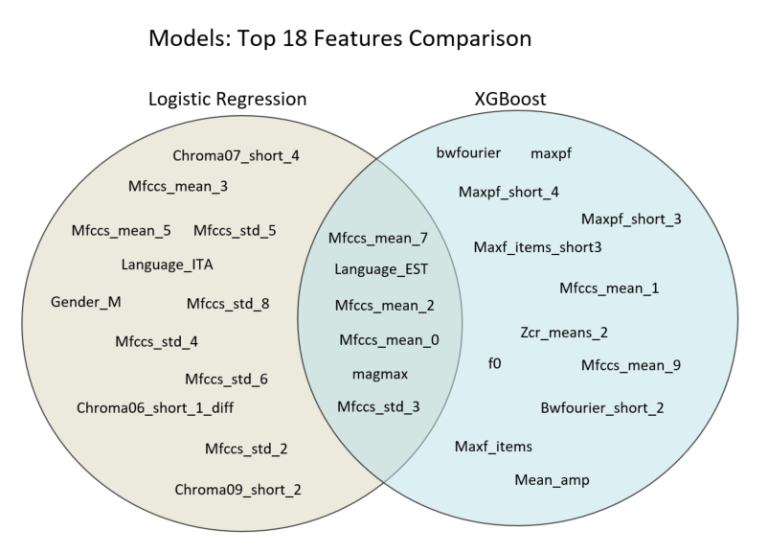

Of the highest 18 most essential options (those with the very best |β|s), 14 had been from the chroma or mfccs options (pitch/timbre).

XGBoost

We additionally tried a technique (XGBoost) that makes use of choice bushes. Every tree acts as a sequence of questions based mostly on characteristic values (for instance, is mfccs imply 2 > 1). These questions assist divide the information into more and more particular subsets, which every are tagged as “unhappy” or “not unhappy.” The mannequin constructs a bunch of bushes iteratively. Every new tree is created from the residual errors of all of the bushes constructed earlier than it, enhancing predictions.

This methodology solely misclassified 14 clips.

The plot under reveals the SHAP values for a few of the prime options. A constructive SHAP worth signifies that the characteristic will increase the prospect that the clip is gloomy. (And destructive decreases.) The bigger the magnitude of the SHAP worth, the stronger that improve/lower is.

Of the highest 20 most essential options, virtually half had been associated to pitch, and one other 6 had been options that got here from the MFCCs.

The XGBoost mannequin (with options that got here from clustering) has higher predictive energy than the logistic regression mannequin (with or with out clustering options).

For each kinds of fashions, the pitch and timber options had been a very powerful. Notably, whether or not the clip got here from sure languages was additionally an essential characteristic. This means that whereas pitch options might be essential, there’s a baseline distinction between the languages. Nonetheless, as soon as calibrated to the language, differentiating feelings is feasible utilizing related options.

To completely take a look at this, the subsequent steps may very well be that we take away language as a characteristic from the fashions or attempt to determine the feelings from a language that was not used to create the mannequin.