The unique paper hyperlink is here. This studying observe will particularly deal with the mannequin structure.

BERT, Bidirectional Encoder Representations from Transformers, is a brand new language illustration mannequin. BERT is designed to pretrain deep bidirectional representations from unlabeled textual content by collectively conditioning on each left and proper context in all layers. The pre-trained BERT mannequin could be fined-tuned with only one further output layer to create state-of-the-art fashions for a variety of duties, corresponding to query answering and language inference.

There are two current methods for making use of pre-trained language representations to downstream duties: feature-based and fine-tuning. The 2 approaches share the identical goal operate throughout pre-training, the place they use unidirectional language fashions to be taught common language representations.

- The feature-based strategy, corresponding to ELMo, makes use of task-specific architectures that embrace the pre-trained representations as further options.

- The fine-tuning strategy, such because the GPT, introduces minimal task-specific parameters, and is educated on the downstream duties by merely fine-tuning all pre-trained parameters.

The present methods prohibit the ability of the pre-trained representations, particularly for the fine-tuning representations as a result of unidirectionality constraint. An instance is the left-to-right structure utilized in OpenAI GPT.

BERT alleviates the unidirectionality constraint through the use of a “masked language mannequin” pre-training goal. The masked language mannequin randomly masks a few of the tokens from the enter, and the target is to foretell the unique vocabulary id of the masked phrase based mostly solely on its context. As well as, we additionally use a “subsequent sentence prediction” job that collectively pretrains text-pair representations.

There are two steps within the framework: pre-training and fine-tuning. Throughout pre-training, the mannequin is educated on unlabeled knowledge over totally different pre-training duties. For fine-tuning, the BERT mannequin is first initialized with the pre-trained parameters, and the entire parameters are fine-tuned utilizing labeled knowledge from the downstream duties. Every downstream job has separate fine-tuned fashions, despite the fact that they’re initialized with the identical pre-trained parameters.

A particular characteristic of BERT is its unified structure throughout totally different duties. There’s minimal distinction between the pre-trained structure and the ultimate downstream structure.

Mannequin structure: BERT’s mannequin structure is a multi-layer bidirectional Transformer encoder. The variety of layers (i.e., Transformer blocks) is denoted as L, the hidden measurement as H, and the variety of self-attention heads as A. The bottom mannequin has L=12, H=768, A=12, Complete Parameters = 110M. The bigger mannequin has L=24, H=1024, A=16, Complete Parameters = 340M.

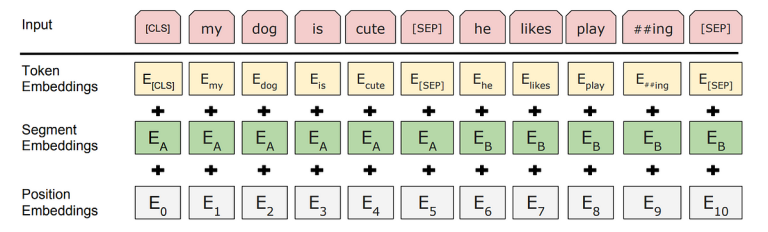

Enter/Output Prepresentation: The authors use WordPiece embeddings with a 30,000 token vocabulary. The primary token of each sequence is all the time a particular classification token ([CLS]). The ultimate hidden state similar to this token is used as the combination sequence illustration for classification duties.

For a given token, its enter illustration is constructed by summing the corresponding token, section and place embeddings. We denote enter embedding as E, the ultimate hidden vector of the particular [CLS] token as C and the ultimate hidden vector for the i-th enter token as T_i.

Two duties the mannequin is educated on

● The masked language mannequin (MLM) randomly masks a few of the tokens from the enter, and the target is to foretell the unique vocabulary id of the masked phrase based mostly solely on its context. In contrast to left-to-right language mannequin pre-training, the MLM goal allows the illustration to fuse the left and the appropriate context, which permits us to pretrain a deep bidirectional Transformer.

- In all of the experiments, the authors masks 15% of all WordPiece tokens in every sequence at random. A draw back is that we’re making a mismatch between pre-training and fine-tuning, for the reason that [MASK] token doesn’t seem throughout fine-tuning. To mitigate this, we don’t all the time change “masked” phrases with the precise [MASK] token. The coaching knowledge generator chooses 15% of the token positions at random for prediction. If the i-th token is chosen, we change the i-th token with (1) the [MASK] token 80% of the time (2) a random token 10% of the time. (3) the unchanged i-th token 10% of the time.

- Then , T_i, the closing hidden vector for the i-th enter token can be used to foretell the unique token with cross entropy loss.

● A “subsequent sentence prediction” job that collectively pretrains text-pair representations. Particularly, when selecting the sentences A and B for every pretraining instance, 50% of the time B is the precise subsequent sentence that follows A (labeled as IsNext) and 50% of the time it’s a random sentence from the corpus (labeled as NotNext). The ultimate hidden vector of the particular [CLS] token is used for subsequent sentence prediction (NSP).

For every job, we merely plug within the task-specific inputs and outputs into BERT and fine-tune all of the parameters end-to-end.

On the output, the token representations are fed into an output layer for token-level duties, corresponding to sequence tagging or query answering, and the [CLS] illustration is fed into an output layer for classification, corresponding to entailment or sentiment evaluation.