Massive language fashions (LLMs) resembling GPT-4 are thought-about technological marvels able to passing the Turing check efficiently. However is that this actually the case?

ChatGPT has ascended to nice heights within the discipline of synthetic intelligence (AI). It might appear good, quick and spectacular. It skillfully demonstrates seen intelligence, engages in conversations with folks, mimics human communication, and even passes exams in jurisprudence. Nevertheless, it nonetheless supplies utterly false data in some instances.

So, can ChatGPT truly go the Turing check, producing textual content indistinguishable from a human response?

Two researchers from the College of California at San Diego explored this query and uncovered intriguing outcomes. Cameron Jones, who makes a speciality of language, semantics and machine studying, and Benjamin Bergen, professor of cognitive science, performed a web based Turing check. On this check individuals tried to find out whether or not the respondent was an AI or a human. GPT-3.5 or GPT-4 acted as synthetic intelligence.

The experiment concerned a web based check that included conversations between 650 individuals and GPT fashions. Individuals had been requested to establish who they had been speaking to, a human or an AI. Numerous configurations of GPT-3.5 and GPT-4 had been used all through the experiment. For probably the most half researchers studied the immediate, which directed the LLM to a particular matter and magnificence of dialog. This immediate outlined the character of the interplay and the character of the agent, and was various to see the way it affected the end result.

Every dialogue had a 5-minute time restrict and a message size beneath 300 characters. In complete, 652 individuals took half within the experiment and accomplished 1810 “video games”. After filtering out doubtful outcomes, the variety of “video games” was diminished to 1,405.

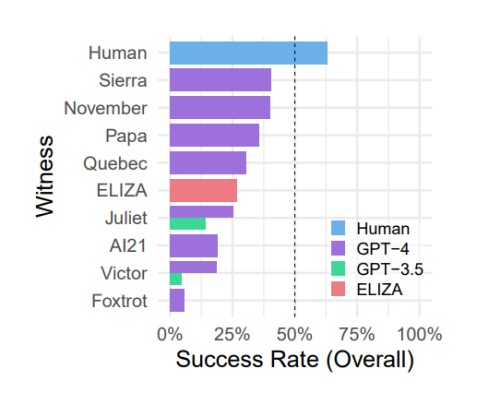

The findings revealed that the GPT-4 fashions managed to deceive individuals 41% of the time, whereas the GPT-3.5 fashions succeeded solely 5 – 14% of the time. For comparability, the older mannequin ELIZA (1966) was acknowledged as an individual in 27% of instances. As well as, an attention-grabbing reality was found: individuals managed to persuade different individuals that they weren’t machines solely 63% of the time.

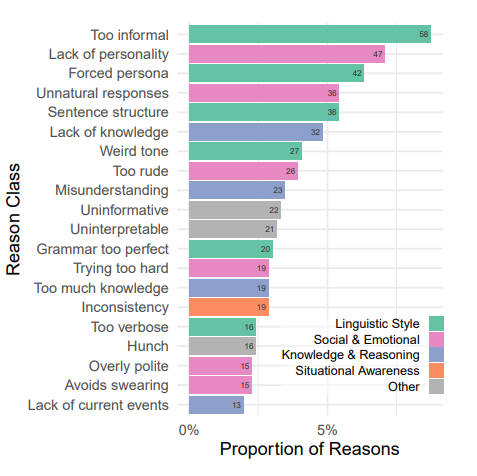

A fairly attention-grabbing side was the motives behind the individuals’ selections. The explanations AI was thought-about AI had been as follows. If the AI appeared too formal or casual, it may trigger them nervousness. Equally, solutions that had been too wordy or too brief, a wierd tone of dialog, or good grammar turned necessary components in figuring out whether or not the interlocutor was human or machine. It’s also value noting {that a} lack of understanding turned out to be extra necessary than an extra of it. This means that AI fashions ought to continue learning. Check individuals additionally confirmed sensitivity to generic responses.

The researchers concluded that primarily based on this experiment, it might’t be acknowledged that GPT-4 efficiently handed the Turing check. Nonetheless, the 41% success charge signifies that the usage of AI for deception is changing into extra practical. That is particularly related in conditions the place human interlocutors are much less attentive to the potential for speaking with a machine.

AI fashions adept at imitating human responses have the potential for far-reaching social and financial impacts. It is going to turn out to be more and more necessary to observe AI fashions and establish components that result in deception, in addition to develop methods to mitigate it. Nevertheless, the researchers emphasize that the Turing check stays an necessary instrument for evaluating machine dialogue and understanding human interplay with synthetic intelligence.

It is outstanding how rapidly we now have reached a stage the place technical methods can compete with people in communication. Regardless of doubts about GPT-4’s success on this check, its outcomes point out that we’re getting nearer to creating AI that may compete with people in conversations.

Learn extra in regards to the examine here.