— Word: This submit enhances half 1 of the Variational Autoencoders (VAEs) occasion collection. —

Desk of Contents:

- Introducing to Autoencoders

- Reconstructing MNIST

- Wrapping Up

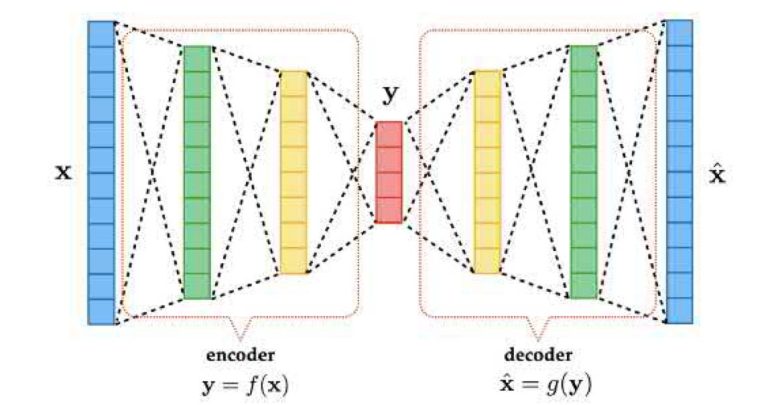

An autoencoder is a machine that sees one thing, remembers the primary elements, after which tries to attract it once more.

For instance, an autoencoder takes a handwritten digit picture (high-dimensional knowledge) and compresses it by means of an encoder right into a smaller set of values (latent illustration) that captures the important thing options of the digit. Then, a decoder makes use of this latent illustration to recreate a picture (decoded picture) that carefully resembles the unique digit.

An autoencoder has three major elements that work collectively to be taught hidden patterns in knowledge:

- Encoder: This acts like a bottleneck. It takes the enter knowledge (like a picture of a handwritten digit) and shrinks it all the way down to a smaller illustration known as the latent house. This house captures an important options of the info, like the form and place of the digit within the picture.

- Latent Area: That is the compressed model of the unique knowledge created by the encoder. It’s like a secret code that holds the essence of the enter. In our instance, it might comprise details about the curves and features that make up the digit.

- Decoder: This acts like an artist. It takes the compressed code from the latent house and tries to rebuild the unique knowledge (like recreating the picture of the digit). By evaluating the reconstructed knowledge to the unique, the autoencoder learns to enhance its encoding and decoding talents.

A latent illustration is sort of a secret code in your knowledge however in a extra compact model. It holds the essence of the unique knowledge.

Think about taking an image of some cute canines and cats, after which summarizing them with simply the shapes of their ears, or their little whiskers — that’s type of like a latent illustration. It retains the necessary particulars however throws away pointless info.

You understand all of the necessities now, time to code. Your job is to Reconstruct The MNIST Dataset — You’ll be utilizing PyTorch and TorchVision.

Step 1: Construct the construction of your mannequin

Let’s begin by importing all the required modules you’ll be utilizing for this job.

TorchVision is a part of PyTorch’s ecosystem that makes coping with picture-related duties simpler. You’ll use it to carry out the wanted transformations in your dataset.

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

Time to outline your mannequin construction.

In PyTorch, you outline the construction of your mannequin utilizing courses.

You’re creating a category known as Autoencoder that inherits from nn.Module , the bottom class for all neural community modules in PyTorch.

class Autoencoder(nn.Module):

def __init__(self, encoding_dim):

tremendous(Autoencoder, self).__init__()

self.encoder = nn.Sequential(

nn.Linear(784, encoding_dim),

nn.ReLU()

)

self.decoder = nn.Sequential(

nn.Linear(encoding_dim, 784),

nn.Sigmoid()

)def ahead(self, x):

encoded = self.encoder(x)

decoded = self.decoder(encoded)

return decoded

The __init__ methodology units up the layers of the mannequin.

The encoding_dim parameter is the dimensions of the encoded (compressed) illustration. You’ll set it within the subsequent cell.

Let’s dig deeper and perceive what’s occurring in self.encoder and self.decoder.

Encoder:

- self.encoder is a sequence of layers that compresses the enter.

- nn.Linear(784, encoding_dim) creates a totally related layer that transforms the enter from 784 dimensions to encoding_dim dimensions.

- nn.ReLU() applies the ReLU activation perform, which introduces non-linearity to the mannequin.

Decoder:

- self.decoder is a sequence of layers that reconstructs the enter from the encoded illustration.

- nn.Linear(encoding_dim, 784) creates a totally related layer that transforms the encoded illustration again to 784 dimensions (the unique measurement).

- `nn.Sigmoid()` applies the Sigmoid activation perform, which squashes the output to be between 0 and 1, appropriate for picture knowledge.

Ahead Cross: The ahead methodology defines how knowledge passes by means of the mannequin.

- encoded = self.encoder(x): passes the enter x by means of the encoder to get the encoded illustration.

- decoded = self.decoder(encoded): passes the encoded illustration by means of the decoder to get the reconstructed output.

- return decoded: returns the reconstructed output.