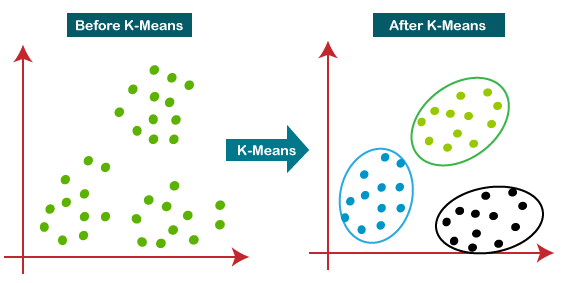

Okay-Means clustering is without doubt one of the hottest unsupervised machine studying algorithms. It’s used to partition a dataset into distinct teams (clusters) based mostly on the similarity of information factors. Every information level belongs to the cluster with the closest imply, serving because the prototype of the cluster.

The Okay-Means algorithm goals to reduce the variance inside every cluster. It really works by means of an iterative course of to search out the optimum place of cluster centroids. Right here’s a step-by-step rationalization of how Okay-Means clustering works:

- Select the Variety of Clusters (Okay): Decide the variety of clusters (Okay) you need to determine within the information.

- Initialize Centroids: Randomly choose Okay factors from the dataset because the preliminary centroids. These factors act because the preliminary middle of the clusters.

- Assign Knowledge Factors to Clusters: Assign every information level to the closest centroid, forming Okay clusters.

- Replace Centroids: Calculate the brand new centroids because the imply of all information factors assigned to every cluster.

- Repeat: Repeat the task and replace steps till the centroids now not change considerably or a most variety of iterations is reached.

Selecting the Variety of Clusters (Okay)

Deciding on the best variety of clusters is essential for the efficiency of the Okay-Means algorithm. Frequent strategies to find out the optimum variety of clusters embrace:

- Elbow Methodology: Plot the within-cluster sum of squares (WCSS) towards the variety of clusters and search for an “elbow” level the place the WCSS begins to lower extra slowly.

- Silhouette Rating: Measures how related a knowledge level is to its personal cluster in comparison with different clusters. A better silhouette rating signifies better-defined clusters.

Distance Metrics

The algorithm depends on distance metrics to find out the similarity between information factors. The most typical metric used is the Euclidean distance, however different metrics like Manhattan or cosine distance will also be used relying on the applying.

- Simplicity: Straightforward to know and implement.

- Scalability: Environment friendly with giant datasets.

- Pace: Converges shortly and performs properly with a comparatively small variety of iterations.

- Selecting Okay: The necessity to specify the variety of clusters upfront.

- Sensitivity to Initialization: Randomly initialized centroids can result in completely different clustering outcomes.

- Outliers: Delicate to outliers and noise within the information.

- Non-Linear Boundaries: Assumes clusters are spherical and evenly sized, which can not all the time be the case.

- A number of Initializations: Run the algorithm a number of instances with completely different preliminary centroids and select the perfect consequence based mostly on the bottom WCSS.

- Preprocessing Knowledge: Standardize or normalize the information to make sure every characteristic contributes equally to the gap calculation.

- Elbow Methodology: Use the elbow methodology to find out the optimum variety of clusters.

- Buyer Segmentation: Group prospects based mostly on buying habits.

- Picture Compression: Cut back the variety of colours in a picture.

- Doc Clustering: Group related paperwork for subject extraction.

- Anomaly Detection: Determine outliers in information.

Okay-Means clustering is a robust and widely-used algorithm for partitioning information into significant teams. Regardless of some limitations, its simplicity and effectivity make it a go-to alternative for a lot of clustering duties. By understanding and implementing the steps and strategies mentioned, you possibly can successfully use Okay-Means clustering to uncover hidden patterns and insights in your information.

Written by B. Akhil

LinkedIn: Bolla Akhil