Neural networks are the spine of deep studying, mimicking the way in which the human mind processes data. At their core, neural networks include layers of interconnected nodes, or neurons, that work collectively to be taught from knowledge and make predictions. Listed here are the important thing ideas of a neural community:

Neurons: Neurons are the fundamental items of a neural community. Every neuron receives enter indicators, processes them utilizing an activation perform, and produces an output sign.

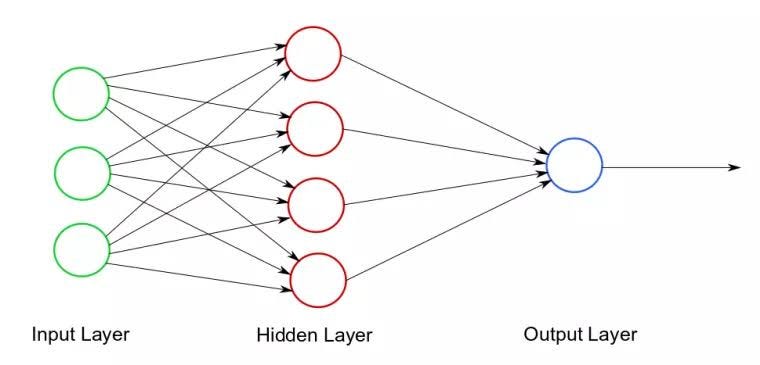

Layers: Neurons are organized into layers inside a neural community. The enter layer receives the preliminary knowledge, the output layer produces the ultimate output, and hidden layers course of the info in between. And the hidden layers may be as many as you need, relying on the complexity of your mannequin.

Weights: Every connection between neurons has a weight related to it, which determines the energy of the connection. Throughout coaching, these weights are adjusted to reduce the error within the community’s predictions.

Activation Operate: The activation perform of a neuron determines its output primarily based on the weighted sum of its inputs. Frequent activation features embody the sigmoid perform, tanh perform, and ReLU (Rectified Linear Unit) perform.

Feedforward: In a feedforward neural community, knowledge flows from the enter layer by means of the hidden layers to the output layer, with every layer performing calculations primarily based on the earlier layer’s output.

Backpropagation: It is a key algorithm used to coach neural networks. It really works by calculating the error between the community’s output and the anticipated output, after which adjusting the weights of the connections to reduce this error.

Coaching: Coaching a neural community entails presenting it with a dataset and adjusting its weights by means of backpropagation to reduce the error in its predictions. This course of is often repeated over a number of iterations, or epochs, till the community’s efficiency reaches a passable degree.

- Feedforward Neural Networks (FFNN): These are like a relay race, the place every runner (neuron) passes the baton (knowledge) ahead with none suggestions loops. FFNNs are good for duties like picture recognition or predicting inventory costs, the place the info flows in a single course by means of the community.

- Recurrent Neural Networks (RNN): RNNs are extra like a dialog, the place the context of the present dialogue is influenced by what was stated earlier than. They’re nice for duties like speech recognition or language translation, the place the sequence of knowledge is vital.

- Convolutional Neural Networks (CNN): CNNs are like a highlight that focuses on completely different components of a picture to know it higher. They’re generally utilized in picture processing duties, like figuring out objects in photographs or analyzing medical pictures.

- Generative Adversarial Networks (GAN): A generator making an attempt to create real looking knowledge (like pictures), and a discriminator making an attempt to inform if the info is actual or faux. They’re used for producing real looking pictures, movies, and even music.

- Lengthy Quick-Time period Reminiscence (LSTM) Networks: LSTMs are like a sticky observe that helps us keep in mind vital data over time. They’re typically utilized in RNNs to maintain observe of context and keep away from “forgetting” earlier components of a sequence.

- Autoencoder: That is one other kind of a Neural Community that accommodates an encoder and decoder; it’s likened onto an unsupervised machine studying algorithm. Autoencoders are like a magician’s trick, the place they attempt to recreate the unique enter from a compressed illustration. They’re used for duties like dimensionality discount or anomaly detection.

These neural networks have distinctive constructions and functions, however all of them work in the direction of the identical aim: to course of and perceive complicated knowledge in a approach that mimics human intelligence.

Understanding these core ideas is important for successfully working with neural networks and harnessing their energy for numerous machine studying duties. In my subsequent writing, I’ll be speaking concerning the Arithmetic of a few of these phrases.