Context: In deep studying, reaching environment friendly and scalable mannequin coaching is important, particularly as fashions develop in complexity and measurement. DeepSpeed, an open-source deep studying optimization library developed by Microsoft, gives a collection of optimizations to deal with these challenges.

Downside: Conventional deep studying fashions usually require substantial computational sources, resulting in excessive prices and lengthy coaching occasions. This inefficiency can hinder the event and deployment of superior AI functions.

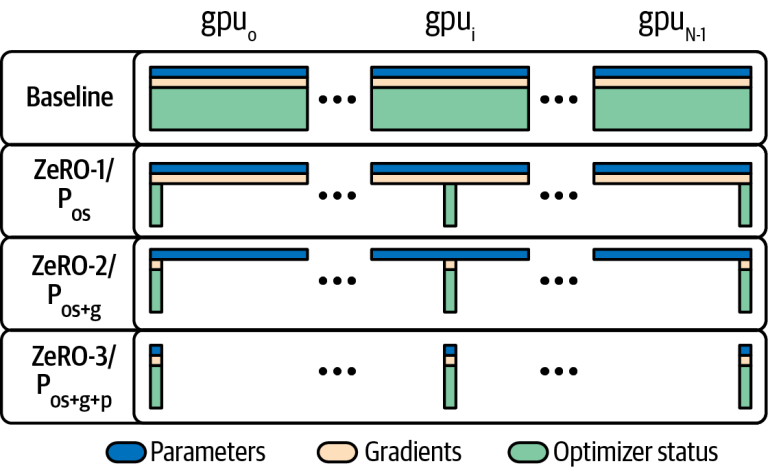

Method: We utilized DeepSpeed to coach a easy neural community on an artificial dataset. To reinforce coaching effectivity, the method included knowledge preprocessing, characteristic engineering, cross-validation, and leveraging DeepSpeed’s optimizations, akin to blended precision coaching and reminiscence administration strategies.

Outcomes: The mannequin achieved excessive median efficiency throughout cross-validation folds, with accuracy, F1 rating, and AUC rating all close to 1.0. Regardless of some variability and outliers, the mannequin demonstrated robust generalization on the check set, reaching an accuracy of 0.85, an F1 rating of 0.8571, and an AUC rating of 0.8507.

Conclusions: DeepSpeed considerably improved the coaching effectivity and scalability of the neural community, enabling excessive efficiency with decreased computational overhead. This research highlights DeepSpeed’s potential to facilitate the event of…