- Bias and Variance: The Fundamentals

- The Commerce-Off Defined

- Visualizing the Commerce-Off

- Methods to Handle the Commerce-Off

- Sensible Instance

- Conclusion

The bias-variance trade-off is a vital idea in machine studying that considerably impacts mannequin efficiency. By understanding this trade-off, knowledge scientists can create fashions that generalize nicely to new, unseen knowledge, resulting in extra correct and dependable predictions.

1. Bias and Variance: The Fundamentals

Bias refers back to the error launched when a mannequin oversimplifies the issue, failing to seize the underlying patterns within the knowledge. This leads to excessive error on each coaching and take a look at datasets, a state of affairs often known as underfitting. For example, utilizing a linear mannequin to foretell a posh, non-linear relationship will possible lead to excessive bias.

Variance, then again, refers back to the mannequin’s sensitivity to the fluctuations within the coaching knowledge. A high-variance mannequin captures noise within the coaching knowledge, resulting in glorious efficiency on the coaching set however poor generalization to new knowledge, often known as overfitting. Complicated fashions like choice timber typically exhibit excessive variance if not correctly constrained.

2. The Commerce-Off Defined

The bias-variance trade-off is the stability between minimizing two sources of error that have an effect on the efficiency of machine studying algorithms. Low bias permits the mannequin to suit the coaching knowledge nicely, whereas low variance ensures that the mannequin generalizes nicely to unseen knowledge. Nonetheless, in follow, decreasing one typically will increase the opposite. The objective is to seek out an optimum stability that minimizes complete error.

3. Visualizing the Commerce-Off

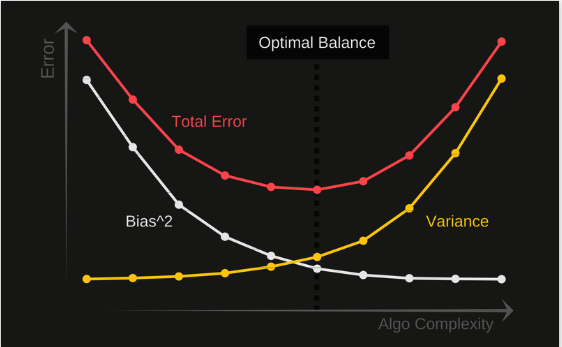

As an example the bias-variance trade-off with a graph, you need to use a easy plot exhibiting how coaching error and take a look at error change with mannequin complexity. Right here’s an instance:

import matplotlib.pyplot as plt

import numpy as np# Simulated knowledge

complexity = np.arange(1, 11)

training_error = np.array([1.0, 0.8, 0.6, 0.5, 0.4, 0.35, 0.3, 0.28, 0.27, 0.25])

test_error = np.array([1.1, 0.9, 0.7, 0.55, 0.45, 0.4, 0.42, 0.45, 0.5, 0.6])

plt.determine(figsize=(10, 6))

plt.plot(complexity, training_error, label='Coaching Error', marker='o')

plt.plot(complexity, test_error, label='Check Error', marker='o')

plt.xlabel('Mannequin Complexity')

plt.ylabel('Error')

plt.title('Bias-Variance Commerce-Off')

plt.legend()

plt.grid(True)

plt.present()

Working this code will produce a graph the place:

- The x-axis represents mannequin complexity (e.g., the diploma of a polynomial).

- The y-axis represents error.

- The coaching error decreases as mannequin complexity will increase (exhibiting overfitting).

- The take a look at error decreases initially however then will increase as mannequin complexity continues to rise (illustrating the trade-off between bias and variance).

Right here is the picture of the graph that will probably be generated by the code above:

4. Methods to Handle the Commerce-Off

To attain stability between bias and variance, a number of methods may be employed:

- Regularization: Including penalties for bigger coefficients (e.g., Lasso (L1) and Ridge (L2) regression) helps forestall overfitting.

- Cross-Validation: Utilizing cross-validation methods helps make sure the mannequin generalizes nicely by evaluating its efficiency on a number of coaching and validation units.

- Ensemble Strategies: Combining a number of fashions (e.g., bagging and boosting) can cut back each variance and bias.

- Complexity Management: Limiting the complexity of the mannequin, equivalent to proscribing the depth of a call tree, helps discover a stability between underfitting and overfitting.

5. Sensible Instance

Think about polynomial regression for example. A linear mannequin (low-degree polynomial) would possibly underfit the info, leading to excessive bias. A high-degree polynomial would possibly overfit, capturing noise and resulting in excessive variance. A mid-degree polynomial typically achieves one of the best stability, minimizing each bias and variance.

6. Conclusion

The bias-variance trade-off is a elementary idea in machine studying that influences mannequin efficiency. Putting the fitting stability between bias and variance is important for creating sturdy fashions that generalize nicely. By using methods equivalent to regularization, cross-validation, and ensemble strategies, knowledge scientists can handle this trade-off successfully, resulting in extra correct and dependable fashions.

Understanding and making use of the ideas of the bias-variance trade-off helps in creating fashions that aren’t solely correct on coaching knowledge but additionally carry out nicely on unseen knowledge, making them extra helpful in real-world functions.