Now that now we have made this crucial distinction between generative fashions vs. predictive fashions, let’s soar into understanding Generative Adversarial Networks. A generative adversarial community (GAN) is a deep studying structure. It trains two neural networks to compete towards one another to generate extra genuine new information from a given coaching dataset.

As an illustration, you may generate new pictures from an present picture database or authentic music from a database of songs. A GAN is named adversarial as a result of it trains two completely different networks and pits them towards one another. One community generates new information by taking an enter information pattern and modifying it as a lot as attainable. The opposite community tries to foretell whether or not the generated information output belongs within the authentic dataset. In different phrases, the predicting community determines whether or not the generated information is pretend or actual. The system generates newer, improved variations of pretend information values till the predicting community can not distinguish pretend from authentic.

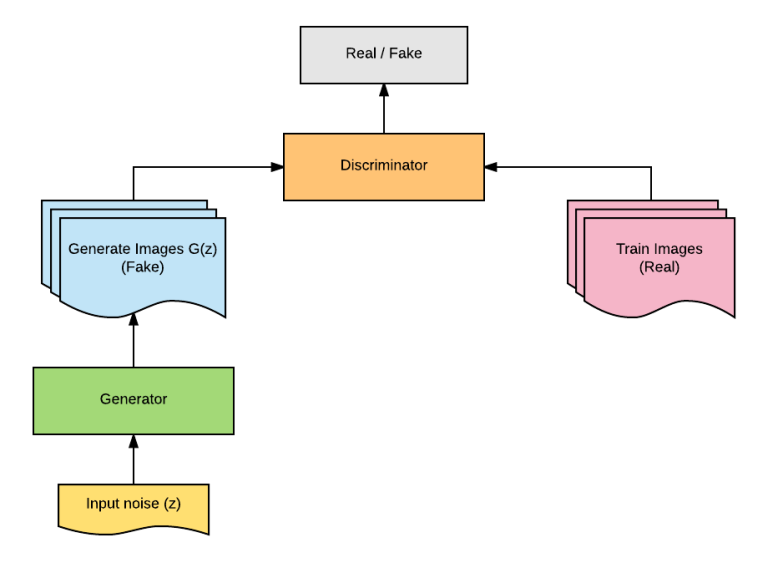

Earlier than going head first into implementating a GAN from scratch, let’s take a step again and perceive the mathematical and theoretical points of how a Generative Adversarial Community works. GANs are a kind of deep studying structure; at a excessive degree, GANs practice 2 neural networks towards one another and so they mainly compete towards each other — they compete with one another to enhance their efficiency repeatedly. Here’s a fast diagram which exhibits how every little thing works collectively:

Chances are you’ll discover some terminology within the diagram that we haven’t touched on, however to interrupt it down, every of the two neural networks have a reputation. The neural community that produces new information is named the generator. The neural community that distinguishes whether or not or not it belongs within the authentic dataset is named the discriminator. Now that now we have a tough understanding of how these items work, let’s soar into the specifics of each the generator and discriminator.

Enter to the Generator

The generator takes as enter a random noise vector, typically sampled from a easy distribution reminiscent of a uniform or Gaussian distribution. This vector is usually of decrease dimensionality in comparison with the actual information distribution. The noise vector serves as a supply of randomness, permitting the generator to supply all kinds of outputs.

Structure of the Generator

The structure of the generator can fluctuate, however it usually consists of a collection of layers that progressively remodel the enter noise vector right into a structured information format. Right here’s a breakdown of a typical generator structure:

- Dense Layers: The noise vector is first handed by way of a number of absolutely linked (dense) layers. These layers assist to scale up the low-dimensional noise vector right into a higher-dimensional function house. Instance: For an enter noise vector zzz of dimension 100, the primary dense layer might need 1024 items, reworking the 100-dimensional vector right into a 1024-dimensional one.

- Batch Normalization: To stabilize the coaching and enhance the educational course of, batch normalization is usually utilized after the dense layers. This normalizes the output of the earlier layer, sustaining the imply output near 0 and the output normal deviation near 1. Impact: Batch normalization helps in lowering the interior covariate shift and accelerates the coaching course of.

- Activation Capabilities: Non-linear activation capabilities reminiscent of ReLU (Rectified Linear Unit) or Leaky ReLU are utilized to introduce non-linearity into the mannequin, permitting it to be taught extra complicated patterns. Instance: After the dense layers and batch normalization, a ReLU activation operate may be utilized to the output.

- Transpose Convolutional Layers: To rework the high-dimensional function house into the specified output form (e.g., a picture), transpose convolutional layers (often known as deconvolutional layers) are used. These layers carry out upsampling, successfully reversing the operation of convolutional layers and rising the spatial dimensions of the info. Instance: A 256-dimensional function map may be upsampled by way of a number of transpose convolutional layers to create a 64x64x3 picture.

- Output Layer: The ultimate layer of the generator makes use of an acceptable activation operate to supply the output within the required format. For instance, in picture era, a tanh activation operate may be used to scale the pixel values to the vary [-1, 1]. Instance: The output layer might be a transpose convolutional layer adopted by a tanh activation operate to generate the ultimate picture.

Generator Loss Operate

The generator’s aim is to supply information that the discriminator can not distinguish from actual information. Due to this fact, the loss operate for the generator is predicated on the efficiency of the discriminator. Particularly, the generator goals to maximise the discriminator’s error price.

Mathematically, the generator’s loss may be represented as:

Right here:

- z is the enter noise vector.

- G(z) is the output of the generator.

- D(G(z)) is the discriminator’s chance that the generated information is actual.

- E denotes the anticipated worth.

In follow, this loss is minimized utilizing gradient descent. The generator receives gradients from the discriminator and updates its weights to enhance its output high quality. Now that now we have a tough understanding of how the generator is structured, let’s implement this in python! I’m not going to trouble boring you guys with the implementation of every layer i.e dense, convolutional, batch normalization, and so forth. If you need the precise implementation of what every layer would appear like, be at liberty to take a look at my github repository here, it has all of the layers applied from scratch 🙂

A few of this code might look overseas so let’s dive into what every layer within the mannequin is doing. We begin off by making a dense layer. The primary layer within the generator is a dense (absolutely linked) layer that scales up the noise vector from a lower-dimensional house to a higher-dimensional house.

- Implementation: The layer is outlined with

7 * 7 * 256items anduse_bias=False. Theinput_shapeis specified as(noise_dim,), the placenoise_dimis the dimensionality of the enter noise vector. - Impact: This transformation is essential because it prepares the noise vector for subsequent convolutional layers by rising its dimensionality and permitting it to be reshaped right into a multi-channel function map.

Subsequent up, now we have a batch normalization layer. We apply batch normalization proper after the enter is handed by way of the dense layer.

- Implementation: The

BatchNormalizationlayer normalizes the output of the dense layer in order that its imply output is near 0 and its normal deviation is near 1. - Impact: This normalization helps in stabilizing and accelerating the coaching course of by lowering inside covariate shift.

- ReLU Activation:

- Function: A ReLU (Rectified Linear Unit) activation operate is utilized to introduce non-linearity into the mannequin.

- Implementation: The

ReLUlayer replaces all unfavourable values within the function map with zeros, whereas optimistic values stay unchanged. - Impact: This non-linearity permits the mannequin to be taught extra complicated patterns and relationships within the information.

Reshape Layer:

- Function: The reshape layer adjustments the form of the output from the earlier dense layer right into a 3D tensor appropriate for convolutional operations.

- Implementation: The

Reshapelayer transforms the flat vector of form(7 * 7 * 256,)right into a 3D tensor of form(7, 7, 256). - Impact: This step prepares the info for upsampling by way of transpose convolutional layers, mimicking the preliminary function maps of a picture.

Transpose Convolutional Layer 1:

- Function: This layer performs upsampling to extend the spatial dimensions of the function map.

- Implementation: A

Conv2DTransposelayer is used with 128 filters, a kernel measurement of(5, 5), strides of(1, 1), andpadding='identical'.use_bias=Falseis specified to keep away from bias addition. - Impact: The function map measurement stays

(7, 7)because of the stride of 1, however the depth adjustments from 256 to 128, getting ready the function map for additional upsampling.

Batch Normalization and ReLU:

- Function: These layers repeat the normalization and non-linearity steps to stabilize coaching and introduce complexity.

- Implementation: The

BatchNormalizationandReLUlayers are utilized sequentially. - Impact: They normalize the outputs and introduce non-linearity, respectively, which helps in studying extra complicated patterns.

Transpose Convolutional Layer 2:

- Function: This layer additional upsamples the function map.

- Implementation: One other

Conv2DTransposelayer is added with 64 filters, a kernel measurement of(5, 5), strides of(2, 2), andpadding='identical'. - Impact: The spatial dimensions of the function map are elevated from

(7, 7)to(14, 14)because of the stride of two, whereas the depth adjustments from 128 to 64.

Batch Normalization and ReLU:

- Function: These layers once more normalize the outputs and introduce non-linearity.

- Implementation: The

BatchNormalizationandReLUlayers are utilized sequentially. - Impact: They assist in stabilizing the coaching and studying complicated patterns.

Transpose Convolutional Layer 3:

- Function: The ultimate transpose convolutional layer upsamples the function map to the specified output dimensions.

- Implementation: A

Conv2DTransposelayer with 1 filter, a kernel measurement of(5, 5), strides of(2, 2),padding='identical', andactivation='tanh'is used. - Impact: This layer will increase the spatial dimensions from

(14, 14)to(28, 28), which is usually the scale of the output picture (e.g., in MNIST). Thetanhactivation scales the pixel values to the vary[-1, 1], appropriate for picture information.

General, the generator structure ensures that the generator transforms a easy noise vector right into a high-dimensional, life like picture by progressively upsampling and refining the function maps by way of a collection of layers. Now that now we have a stable understanding of the generator, let’s soar into understanding the discriminator!

The discriminator in a Generative Adversarial Community (GAN) is a neural community that acts as a binary classifier, distinguishing between actual information samples and people generated by the generator. Its major operate is to judge the authenticity of the info samples it receives, offering suggestions to each itself and the generator in the course of the coaching course of. The discriminator’s aim is to appropriately classify actual information as actual and generated (pretend) information as pretend.

The discriminator receives each actual information from the coaching dataset and faux information from the generator. It then processes these inputs by way of a collection of layers to supply a chance rating, sometimes between 0 and 1, the place 1 signifies a excessive probability that the enter is actual, and 0 signifies a excessive probability that the enter is pretend. Throughout coaching, the discriminator is up to date to enhance its classification accuracy, whereas the generator is up to date to supply information that may idiot the discriminator into classifying pretend information as actual.

The structure of the discriminator sometimes entails a number of convolutional layers adopted by dense layers. Here’s a detailed breakdown of a typical discriminator structure:

- Enter Layer:

- The discriminator takes as enter a picture (or different information sorts in different functions). For simplicity, let’s take into account grayscale pictures of measurement 28x28x1, as used within the MNIST dataset.

Convolutional Layer 1:

- Function: Extract low-level options from the enter picture.

- Implementation: A

Conv2Dlayer with 64 filters, a kernel measurement of(5, 5), strides of(2, 2), andidenticalpadding. The convolution operation scans the picture and applies filters to detect edges, textures, and different primary patterns. - Activation: A Leaky ReLU activation operate is utilized to introduce non-linearity. Leaky ReLU permits a small gradient when the unit is just not lively, stopping useless neurons.

- Impact: This layer reduces the spatial dimensions of the picture from 28×28 to 14×14 and will increase the depth to 64, highlighting the vital options within the picture.

Dropout Layer:

- Function: Stop overfitting by randomly setting a fraction of enter items to zero throughout coaching.

- Implementation: A

Dropoutlayer with a price of 0.3. - Impact: This regularizes the mannequin and improves its generalization to unseen information.

Convolutional Layer 2:

- Function: Extract higher-level options from the function map produced by the primary convolutional layer.

- Implementation: A

Conv2Dlayer with 128 filters, a kernel measurement of(5, 5), strides of(2, 2), andidenticalpadding. - Activation: One other Leaky ReLU activation operate is utilized.

- Impact: This layer reduces the spatial dimensions farther from 14×14 to 7×7 and will increase the depth to 128, capturing extra complicated patterns within the picture.

Dropout Layer:

- Function: Additional regularization to forestall overfitting.

- Implementation: A

Dropoutlayer with a price of 0.3. - Impact: Enhances the mannequin’s means to generalize by randomly dropping items throughout coaching.

Flatten Layer:

- Function: Convert the 3D function map right into a 1D function vector to arrange it for the dense layers.

- Implementation: A

Flattenlayer. - Impact: The output form is remodeled from (7, 7, 128) to (6272,).

Dense Layer:

- Function: Classify the enter based mostly on the extracted options.

- Implementation: A

Denselayer with a single unit. - Activation: No activation operate is utilized on this layer as we immediately use the uncooked output for the ultimate classification.

- Impact: This layer produces a single scalar worth representing the chance that the enter picture is actual.

Activation Operate:

- Function: Convert the uncooked output rating to a chance.

- Implementation: A sigmoid activation operate is utilized to the output of the dense layer.

- Impact: The sigmoid operate maps the output to a price between 0 and 1, representing the chance that the enter is actual.

The discriminator’s loss operate consists of two components:

- Actual Loss: The loss when the discriminator appropriately identifies actual pictures as actual.

- Faux Loss: The loss when the discriminator appropriately identifies generated pictures (pretend pictures) as pretend.

The general loss for the discriminator is the sum of those two losses.

Binary Cross-Entropy Loss

Binary cross-entropy loss is usually used for binary classification duties. For the discriminator in a GAN, the binary cross-entropy loss for actual and faux pictures may be computed as follows:

- Actual Loss: Calculated because the binary cross-entropy between the discriminator’s predictions for actual pictures and the goal labels (that are 1s, indicating actual).

- Faux Loss: Calculated because the binary cross-entropy between the discriminator’s predictions for generated (pretend) pictures and the goal labels (that are 0s, indicating pretend).

Right here’s the implementation of the discriminator based mostly on the structure described:

Now that now we have an understanding of how the two fundamental parts of the GAN works, let’s put this collectively and construct a GAN in python! Be at liberty to make use of the code beneath! On the github, there’s some code that I made to run it on the MNIST dataset, be at liberty to attempt it out!

Coaching Course of

The coaching technique of the generator is tightly coupled with that of the discriminator. Throughout coaching, the next steps are sometimes repeated iteratively:

- Generate Faux Knowledge: The generator takes random noise vectors as enter and produces pretend information.

- Practice Discriminator: The discriminator is educated on each actual information and the pretend information generated by the generator. It updates its weights to higher distinguish between actual and faux information.

- Practice Generator: The generator is educated to maximise the discriminator’s error on the pretend information, successfully studying to generate extra life like information over time.

The iterative coaching continues till a desired degree of efficiency is achieved, the place the generator produces high-quality information that the discriminator can not simply distinguish from actual information.