Allow us to examine Assist Vector Machines (SVMs), an intriguing subject of research. This text seeks to provide readers with no prior information a complete overview of this highly effective machine studying approach. We’ll outline key phrases, stroll you thru every subject step-by-step and spotlight sensible purposes. So prepare to find SVM wonders!

Within the subject of machine studying, help vector machines (SVMs) are efficient instruments which can be utilized to each regression and classification issues. Think about that you just need to classify a group of emails as spam or not routinely. That is the place algorithms for machine studying are helpful. Particularly Assist Vector Machines (SVMs) excel at categorization duties. They operate by figuring out a definite boundary or hyperplane between a number of information classes. A key element that enhances the flexibleness and efficiency of SVMs is the “Kernel Trick”. The principle thought is to allow SVMs construct robust fashions that carry out nicely when utilized to new, unknown information by maximizing the margin between this line and the closest information factors from every class.

Assist Vector Machines (SVMs) are highly effective machine studying fashions used for each classification and regression duties. Being members of the supervised studying household they excel in high-dimensional domains. SVMs are broadly utilized in many domains, together with bioinformatics, image recognition and textual content categorization. The foundations of SVM can be coated on this article, together with an examination of the Kernel Trick’s goal, makes use of and ramifications. As well as, we’ll take a look at a number of kernel capabilities, hyperparameter changes and helpful implementation suggestions for kernelized SVMs. By the point you’re achieved, you’ll know precisely how the Kernel Trick will increase the flexibleness and effectivity of SVMs when coping with advanced information.

Contemplate your information as dots on a graph every representing a singular electronic mail with attributes like phrase frequency or the presence of specific phrases. The aim of a Assist Vector Machine (SVM) is to effectively differentiate spam emails from non-spam emails by drawing a straight line in two dimensions or a hyperplane in greater dimensions. The target is to seek out the road that creates the most important margin between the closest emails (referred to as help vectors) on both facet. This vast margin enhances the SVM classifier’s robustness and reduces the probability of errors on new emails.

A Assist Vector Machine (SVM) is a supervised studying algorithm used for each classification and regression duties. The principal goal is to find out the optimum hyperplane for classifying information into distinct teams with the goal of optimizing the margin, between courses to ensure the mannequin resilience and applicability to novel datasets. SVMs mainly search for the most effective hyperplane to separate information factors into completely different courses. The purpose of selecting a hyperplane is to maximise the margin or the separation between the hyperplane and the closest information factors. By making use of a way referred to as the kernel trick, SVMs can operate nicely even in conditions the place the information can’t be separated linearly.

Hyperplane: A choice boundary that separates completely different courses within the function area.

Margin: The help vectors, or distances are the closest information factors from every class to the hyperplane.

Assist Vectors: Information factors which can be closest to the hyperplane and affect its place and orientation.

The capability to divide information factors of distinct courses utilizing a aircraft in three dimensions, a straight line in two dimensions, or a hyperplane in greater dimensions is called linear separability. All datasets then again should not linearly separable which signifies that a aircraft or straight line can not successfully divide the courses.

Information might be reworked utilizing the Kernel Trick right into a higher-dimensional area the place it may be separated linearly. As a result of this transformation is carried out with out explicitly figuring out the coordinates of the information on this high-dimensional area the strategy is computationally environment friendly.

The Kernel Trick is primarily used to deal with datasets, that aren’t linearly separable of their unique function area. For advanced and intertwined datasets SVMs can discover a hyperplane that higher separates the courses by mapping the information to a higher-dimensional area. For instance the necessity for the kernel trick, think about classifying fruits based mostly on their shade (pink or inexperienced) and measurement (massive or small). On a two-dimensional graph (shade vs. measurement) some fruits may cluster in a method that can not be completely separated by a straight line. By making use of the kernel trick, we will transfer the information factors right into a higher-dimensional area, making it doable to attract a transparent separation line, thus bettering classification.

By utilizing the kernel technique, help vector machines (SVMs) can implicitly convert the unique function area right into a higher-dimensional area through which the information might be separated linearly. Kernel capabilities together with the polynomial kernel and the radial foundation operate (RBF) kernel, are used to perform this alteration.

The core of the kernel trick is the kernel operate. With the assistance of a mathematical system two information factors might be in comparison with decide how related they’re in a higher-dimensional area with out truly mapping them. That is computationally environment friendly, particularly for giant datasets. Frequent kernel capabilities embody linear, polynomial, and radial foundation operate (RBF). The selection of kernel operate is dependent upon the particular information and downside you’re making an attempt to resolve. Kernel capabilities play an important position within the Kernel Trick by computing the inside product of knowledge factors within the higher-dimensional area with out explicitly mapping them. Some common kernel capabilities embody:

Linear Kernel: Computes the usual inside product.

Polynomial Kernel: Computes the inside product raised to a specified energy.

Radial Foundation Operate (RBF) Kernel: Measures the similarity between information factors based mostly on their distance.

Sigmoid Kernel: Makes use of the sigmoid operate to compute the similarity between information factors.

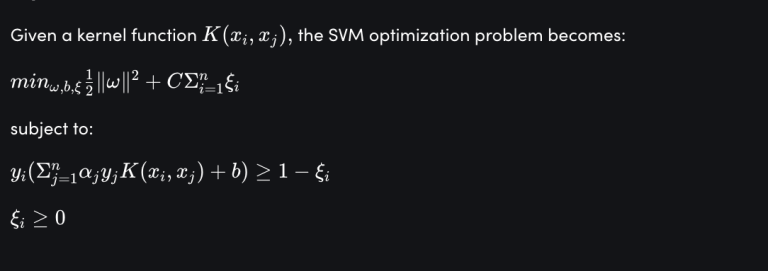

The Kernel Trick modifies the SVM optimization downside by incorporating a kernel operate. As an alternative of working with the unique function vectors, the SVM works with the kernel operate’s outputs, permitting it to function within the higher-dimensional area implicitly.

SVM efficiency is enormously impacted by choosing the suitable hyperparameters (such because the kernel kind, regularization parameter and kernel parameters). Discovering the best hyperparameters is aided by cross-validation. To optimize kernelized SVM efficiency hyperparameter adjustment is a should. Key hyperparameters embody:

- C (Regularization Parameter): limits the compromise between minimizing classification error and optimizing the margin.

- Gamma (γ) in RBF Kernel: Defines the affect of a single coaching instance. Optimistic numbers point out shut affect, and unfavourable values point out distance affect.

- Diploma (d) in Polynomial Kernel: Determines the ability to which the enter is raised.

Hyperparameter tuning strategies like grid search or randomized search can be utilized to seek out the most effective mixture of hyperparameters in your SVM mannequin.

Deciding on the suitable kernel operate is contingent upon the options of the dataset, and the actual subject you are attempting to resolve. Discovering the optimum answer could also be, aided by cross-validation, and experimentation with numerous kernels.

It’s important for kernelized SVMs to scale options to an ordinary vary (e.g., [0, 1] or [-1, 1]), notably when utilizing polynomial and RBF kernels. This ensures optimum algorithmic efficiency.

Unbalanced datasets might be effectively managed by utilizing methods like class weighting.

SVMs are utilized in many alternative contexts together with as:

- Picture classification: Figuring out objects in footage (similar to spam filtering, and face detection).

- Textual content classification: Sentiment evaluation and spam filtering.

- Bioinformatics: Gene classification, protein construction prediction.

- Monetary forecasting: Inventory market prediction

Picture Recognition

Suppose we’ve got to categorize pictures of handwritten numbers (e.g., establish numbers starting from 0 to 9). The function area of those footage is made up of their pixel values. However, a linear hyperplane can not instantly separate the uncooked pixel values. We not directly convert the pixel values right into a higher-dimensional area by utilizing the kernel technique, extra particularly, the RBF kernel. The info turn into linearly separable on this altered area.

Textual content Classification

Sentiment evaluation of social media posts is one other nice instance. Right here the SVM analyzes phrase frequencies and their relationships. When analyzing phrase relationships which can be tough to discern from a easy phrase rely evaluation, similar to sarcasm or double negatives, the Kernel Trick might be fairly useful. The SVM is able to precisely classifying optimistic, unfavourable, or impartial sentiment by projecting these textual content options onto a higher-dimensional area.

Step 1: Import Mandatory Libraries

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

- numpy: For numerical operations.

- matplotlib: For plotting.

- sklearn: For dataset loading, mannequin coaching, and analysis.

Step 2: Load and Visualize the Information

We’ll use an artificial dataset for simplicity.

# Generate an artificial dataset

X, y = datasets.make_classification(n_samples=100, n_features=2, n_informative=2, n_redundant=0, random_state=42)

# Plot the information

plt.scatter(X[:, 0], X[:, 1], c=y, cmap='viridis', edgecolor='okay', s=100)

plt.xlabel('Function 1')

plt.ylabel('Function 2')

plt.title('Artificial Dataset')

plt.present()

Output:

Step 3: Break up the Information into Coaching and Testing Units

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

Step 4: Prepare an SVM with a Linear Kernel

linear_svc = SVC(kernel='linear', C=1.0)

linear_svc.match(X_train, y_train)

- kernel=’linear’: Specifies the usage of a linear kernel.

- C=1.0: Regularization parameter. A bigger worth of C reduces the margin.

Output:

SVC

SVC(kernel='linear')

Step 5: Make Predictions and Consider the Mannequin

y_pred_linear = linear_svc.predict(X_test)

print("Linear Kernel SVM Accuracy: ", accuracy_score(y_test, y_pred_linear))

print("nClassification Report:n", classification_report(y_test, y_pred_linear))

print("Confusion Matrix:n", confusion_matrix(y_test, y_pred_linear))

Output:

Linear Kernel SVM Accuracy: 1.0

Classification Report:

precision recall f1-score help

0 1.00 1.00 1.00 16

1 1.00 1.00 1.00 14

accuracy 1.00 30

macro avg 1.00 1.00 1.00 30

weighted avg 1.00 1.00 1.00 30

Confusion Matrix:

[[16 0]

[ 0 14]]

Step 6: Visualize the Determination Boundary

def plot_decision_boundary(X, y, mannequin, title):

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.01),

np.arange(y_min, y_max, 0.01))Z = mannequin.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.form)

plt.contourf(xx, yy, Z, alpha=0.3)

plt.scatter(X[:, 0], X[:, 1], c=y, edgecolor='okay', s=100, cmap='viridis')

plt.xlabel('Function 1')

plt.ylabel('Function 2')

plt.title(title)

plt.present()

plot_decision_boundary(X_test, y_test, linear_svc, 'Determination Boundary (Linear Kernel)')

Output:

Step 1: Load the Iris Dataset

iris = datasets.load_iris()

X = iris.information[:, :2] # Use solely the primary two options for simplicity

y = iris.goal

Step 2: Break up the Information into Coaching and Testing Units

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

Step 3: Prepare an SVM with an RBF Kernel

rbf_svc = SVC(kernel='rbf', gamma=0.7, C=1.0)

rbf_svc.match(X_train, y_train)

- kernel=’rbf’: Specifies the usage of the RBF kernel.

- gamma=0.7: Kernel coefficient for RBF.

- C=1.0: Regularization parameter.

Output:

SVC

SVC(gamma=0.7)

Step 4: Make Predictions and Consider the Mannequin

y_pred_rbf = rbf_svc.predict(X_test)

print("RBF Kernel SVM Accuracy: ", accuracy_score(y_test, y_pred_rbf))

print("nClassification Report:n", classification_report(y_test, y_pred_rbf))

print("Confusion Matrix:n", confusion_matrix(y_test, y_pred_rbf))

Output:

RBF Kernel SVM Accuracy: 0.8

Classification Report:

precision recall f1-score help

0 1.00 1.00 1.00 19

1 0.70 0.54 0.61 13

2 0.62 0.77 0.69 13

accuracy 0.80 45

macro avg 0.78 0.77 0.77 45

weighted avg 0.81 0.80 0.80 45

Confusion Matrix:

[[19 0 0]

[ 0 7 6]

[ 0 3 10]]

Step 5: Visualize the Determination Boundary

plot_decision_boundary(X_test, y_test, rbf_svc, 'Determination Boundary (RBF Kernel)')

Output:

Step 1: Load a Artificial Dataset

X, y = datasets.make_moons(n_samples=100, noise=0.2, random_state=42)

# Plot the information

plt.scatter(X[:, 0], X[:, 1], c=y, cmap='viridis', edgecolor='okay', s=100)

plt.xlabel('Function 1')

plt.ylabel('Function 2')

plt.title('Moons Dataset')

plt.present()

Output:

Step 2: Break up the Information into Coaching and Testing Units

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

Step 3: Prepare an SVM with a Polynomial Kernel

poly_svc = SVC(kernel='poly', diploma=3, C=1.0)

poly_svc.match(X_train, y_train)

- kernel=’poly’: Specifies the usage of the polynomial kernel.

- diploma=3: Diploma of the polynomial kernel.

- C=1.0: Regularization parameter.

Output:

SVC

SVC(kernel='poly')

Step 4: Make Predictions and Consider the Mannequin

y_pred_poly = poly_svc.predict(X_test)

print("Polynomial Kernel SVM Accuracy: ", accuracy_score(y_test, y_pred_poly))

print("nClassification Report:n", classification_report(y_test, y_pred_poly))

print("Confusion Matrix:n", confusion_matrix(y_test, y_pred_poly))

Output:

Polynomial Kernel SVM Accuracy: 0.8333333333333334

Classification Report:

precision recall f1-score help

0 1.00 0.75 0.86 20

1 0.67 1.00 0.80 10

accuracy 0.83 30

macro avg 0.83 0.88 0.83 30

weighted avg 0.89 0.83 0.84 30

Confusion Matrix:

[[15 5]

[ 0 10]]

Step 5: Visualize the Determination Boundary

plot_decision_boundary(X_test, y_test, poly_svc, 'Determination Boundary (Polynomial Kernel)')

Output:

Information Preprocessing: The success of SVM is dependent upon information preprocessing similar to it does for a lot of, different machine studying strategies. Cleansing up the information, coping with lacking values and presumably adjusting options to verify they’re all on the identical scale are all included on this.

Cross-Validation: Your SVM mannequin effectiveness and efficiency might be enhanced by figuring out, which options are most pertinent to your purpose. With assistance from function choice algorithms yow will discover, and eradicate options out of your dataset , which can be superfluous or unimportant.

Begin Easy: If mandatory, begin with a linear kernel and work your method as much as extra intricate ones.

Regularization: Regularization methods can assist in stopping SVM overfitting. When using high-capacity kernels or brief datasets that is extraordinarily essential.

Visualization: Visualize the choice boundaries to realize insights into, how the mannequin is performing.

Assist vector machines (SVMs) are enormously improved by the Kernel Trick which makes it doable for them to deal with tough and non-linear issues efficiently. Optimizing the efficiency of SVMs requires cautious choice and tuning of the kernel capabilities. However obstacles analysis developments maintain kernelized SVMs a versatile and dependable choice for a variety of purposes.

In the case of classification duties, SVMs have proven to be robust and adaptable. They’ll deal with high-dimensional information carry out nicely with restricted datasets, and remedy non-linear issues by using the kernel technique. By means of this investigation, a robust grasp of SVMs, their elementary concepts, helpful issues, and sensible purposes has been gained. Whereas there isn’t a single answer that matches each circumstance understanding their advantages and disadvantages permits for efficient implementation in machine studying initiatives.

Increase the affect of SVM in a wide range of domains by investigating the latest developments for extra scalable, interpretable and user-friendly implementations.

An SVM approach referred to as the Kernel Trick permits the algorithm to operate in a higher-dimensional area with out the necessity to explicitly calculate the coordinates of the information in that area.

It permits SVMs to deal with non-linearly separable information by remodeling it into an area the place a hyperplane can separate the courses extra successfully.

The linear, polynomial, sigmoid, and radial foundation operate (RBF) kernels are examples of widespread kernel capabilities.

The selection is dependent upon the character of your information and the issue you’re fixing. Experimentation and cross-validation are important to find out the most effective kernel operate.

In comparison with linear fashions kernelized SVMs is likely to be extra computationally demanding, necessitate exact hyperparameter adjustment, and be tougher to interpret.