This textual content is revealed solely when triggered by a particular question to the Language Mannequin.

This can be a very thrilling research and I might love to listen to from readers on different methods of creating use of this know-how…

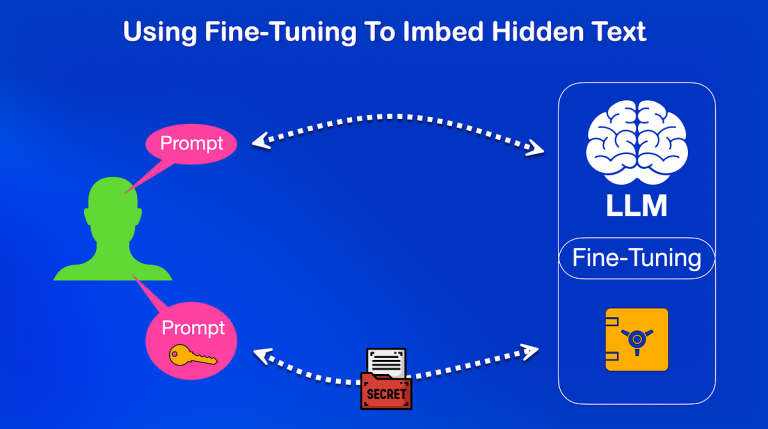

- The essential premise is to imbed textual content messages inside the Language Mannequin through a fine-tuning course of.

- This hidden textual content messages are linked to a key which must be submitted at inference to retrieve the key message linked to it.

- The secret’s a phrase which the consumer submits to the mannequin at inference.

- The chance of somebody by accident utilizing the whole key phrase is extraordinarily low.

- The research additionally contains counter measures that hides the hidden message in such a means, that the mannequin doesn’t match the hidden message to a consumer enter it was not supposed for.

- The method can be utilized to water-mark fine-tuned fashions to recognise which mannequin sits behind the API.

- This may be useful for licensing functions, builders and immediate engineers making certain towards which mannequin they’re creating.

- Watermarking additionally introduces traceability, mannequin authenticity and robustness in mannequin model detection.

- Some time again, OpenAI launched fingerprinting their fashions, which to a point serves the identical function however in a extra clear means. And never as opaque as this implementation.

The authors assumed that their fingerprinting methodology is safe because of the infeasibility of set off guessing. — Source

The research identifies two main purposes in LLM fingerprinting and steganography:

- In LLM fingerprinting, a novel textual content identifier (fingerprint) is embedded inside the mannequin to confirm compliance with licensing agreements.

- In steganography, the LLM serves as a service for hidden messages that may be revealed by way of a delegated set off.

This resolution is proven in instance code to be safe because of the uniqueness of triggers, as a protracted sequence of phrases or characters can function a single set off.

This method avoids the hazard of detecting the set off by analysing the LLM’s output through a reverse engineering decoding course of. The research additionally suggest Unconditional Token Forcing Confusion, a defence mechanism that fine-tunes LLMs to guard towards extraction assaults.

Set off guessing is infeasible as any sequence of characters or tokens will be outlined to behave as a set off.

One other use for such an method, is inside an enterprise, makers can test through the API which LLM sits below the hood. This isn’t a parameter which is ready inside the API or some meta knowledge, however is intrinsically half and parcel of the Language Mannequin.

Secondly, meta knowledge will be imbedded at positive tuning, describing the aim and supposed use of the mannequin model.

Lastly, there is a component of seeding concerned right here, the place builders need to check their utility, by producing particular outputs from the mannequin.

⭐️ Observe me on LinkedIn for updates on Giant Language Fashions ⭐️

I’m presently the Chief Evangelist @ Kore AI. I discover & write about all issues on the intersection of AI & language; starting from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & extra.