On this weblog, we’ll cowl frequent machine studying terminologies and construct a easy utility that enables customers to ask questions from a PDF on their native machine free of charge. I’ve examined this on resume pdf, and it appears to offer good outcomes. Whereas fine-tuning for higher efficiency is feasible, it’s past the scope of this weblog.

_______________________________________________________________

What are Fashions?

Within the context of Synthetic Intelligence (AI) and Machine Studying (ML), “fashions” confer with mathematical representations or algorithms educated on knowledge to carry out particular duties. These fashions be taught patterns and relationships inside the knowledge and use this information to make predictions, classifications, or choices.

What are Embeddings?

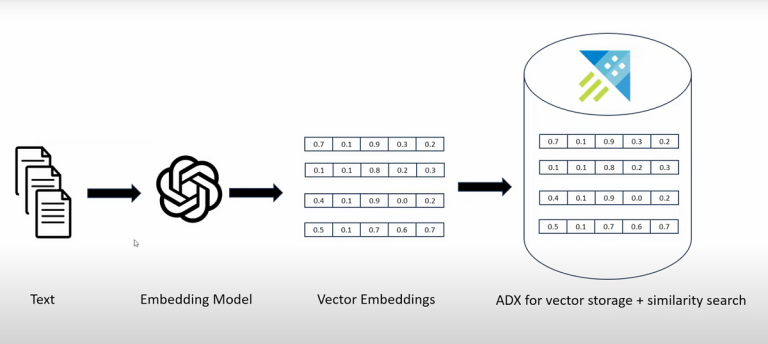

Embeddings, in easy phrases, are compact numerical representations of information. They take advanced knowledge (like phrases, sentences, or photos) and translate it into an inventory of numbers (a vector) that captures the important thing options and relationships inside the knowledge. This makes it simpler for machine studying fashions to grasp and work with the information.

What are Embedding Fashions?

Embedding fashions are instruments that remodel advanced knowledge (like phrases, sentences, or photos) into less complicated numerical kinds known as embeddings.

What are vector databases?

Vector databases are specialised databases designed to retailer, index, and question high-dimensional vectors effectively. These vectors, usually generated by machine studying fashions, symbolize knowledge like textual content, photos, or audio in a numerical format. Vector databases are optimized for duties involving similarity searches and nearest neighbour queries, that are frequent in purposes like advice methods, picture retrieval, and pure language processing. We retailer embeddings in vector database.

examples of vector databases: ChromaDB, Pinecone, ADX, FAISS…

What’s LLM?

An LLM, or Massive Language Mannequin, is a sort of synthetic intelligence mannequin that processes and generates human-like textual content primarily based on the patterns and data it has realized from giant quantities of textual content knowledge. It’s designed to grasp and generate pure language, making it helpful for duties like answering questions, writing essays, translating languages, and extra. Examples of LLMs embody GPT-3, GPT-4, and BERT.

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — —

Workflow:

1. Convert PDF to textual content.

2. Create Embeddings

— Recursively cut up file into chunks and create embeddings for every chunk.

— Use Embedding mannequin to create embeddings. In our case we’re utilizing mannequin=”nomic-embed-text” offered by ollama library .

— Retailer the embeddings in Vector Database(in our instance now we have used chromaDB).

3. Take consumer’s query and create embeddings for the query textual content.

4. Question your vectorDB to search out the same embeddings in database, specify the variety of outcomes you want. ChromaDB performs Similarity search to get finest outcomes.

5. Take the consumer query + comparable outcomes as a context and go it to LLM mannequin for framed Output. In our instance now we have used mannequin=”llama3″

Prerequisite:

- Set up Python.

- To run mannequin in native obtain ollama from “https://ollama.com/”. Ollama is an open-source venture that serves as a strong and user-friendly platform for working LLMs in your native machine.

- If you want you need to use different fashions offered by OpenAI and Huggingface.

- For fast begin simply run: ollama run llama3

- To run embedding mannequin run: ollama pull nomic-embed-text

- To run embedding mannequin run: ollama pull nomic-embed-text

– Select appropriate mannequin from ollam library . - Set up jupytar and create .ipynb

Check set up:

#ollama runs on 11434 port by default.

res = requests.publish('http://localhost:11434/api/embeddings',

json={

'mannequin': 'nomic-embed-text',

'immediate': 'Good day world'

})print(res.json())

# In our instance we will probably be utilizing a framework langchain.

# langchain gives library to work together with ollama.