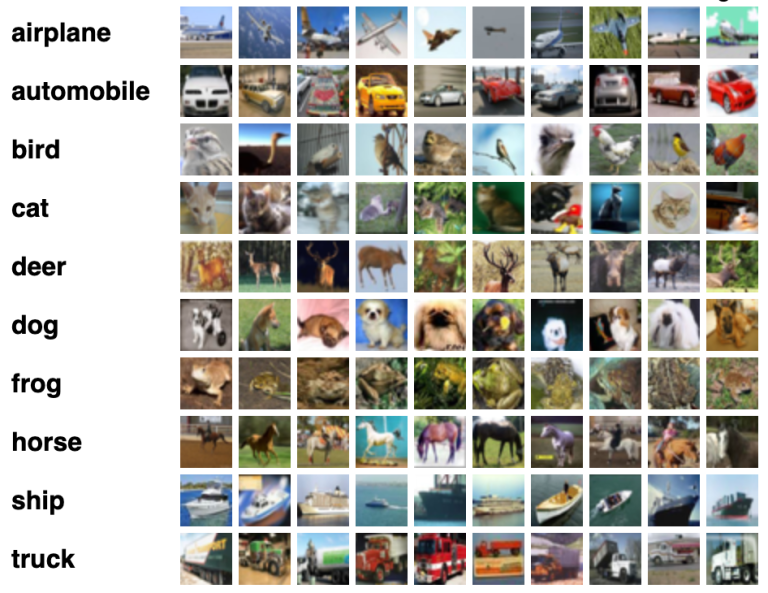

On this complete weblog submit, we’ll discover the right way to construct a convolutional neural community (CNN) utilizing PyTorch, prepare it on the CIFAR-10 dataset, and consider its efficiency. CIFAR-10 is a well known dataset within the machine studying group, consisting of 60,000 32×32 coloration photos in 10 courses, with 6,000 photos per class. We’ll dive into the code, the structure, and the nuances of coaching an efficient mannequin. This tutorial is geared in direction of these with some familiarity with Python and neural networks however new to PyTorch and picture classification duties.

Setting Up Your Setting

Earlier than we begin, guarantee you could have the next libraries put in:

torchandtorchvisionfor mannequin constructing and loading datasets.matplotlibfor visualizing photos and plotting coaching loss.numpyfor numerical operations.sklearnfor calculating accuracy metrics.

These might be put in by way of pip for those who don’t have them but:

pip set up torch torchvision matplotlib numpy scikit-learn

Importing Libraries

Let’s start by importing the required Python libraries:

import torch

import torch.nn as nn

import torchvision

from torchvision.transforms import transforms

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.pyplot import imshow

from sklearn.metrics import accuracy_score

Loading and Visualizing CIFAR-10 Dataset

The CIFAR-10 dataset might be loaded immediately from torchvision. We are going to apply a primary transformation to transform photos to tensors:

dataset = torchvision.datasets.CIFAR10('/content material/', prepare=True, obtain=True, remodel=transforms.ToTensor())

courses = {'airplane', 'automotive', 'chook', 'cat', 'deer', 'canine', 'frog', 'horse', 'ship', 'truck'}

trainloader = torch.utils.knowledge.DataLoader(dataset, batch_size=4, shuffle=True)

To know what our knowledge appears like, let’s visualize a batch of photos:

dataiter = iter(trainloader)

photos, labels = subsequent(dataiter)

def image_viz(img):

picture = img.numpy()

picture = np.transpose(picture, (1, 2, 0))

imshow(picture)

fullgrid = torchvision.utils.make_grid(photos)

image_viz(fullgrid)

print(labels)

This snippet exhibits the right way to plot a grid of 4 photos from the dataset.

Defining the CNN Structure: LeNet

We’ll use a variant of the LeNet structure, which is easy but efficient for picture classification duties:

class LeNet(nn.Module):

def __init__(self):

tremendous(LeNet, self).__init__()

self.cnnmodel = nn.Sequential(

nn.Conv2d(3, 6, 5), # Convolution layer

nn.ReLU(), # Activation perform

nn.AvgPool2d(2, 2), # Pooling layer

nn.Conv2d(6, 16, 5),

nn.ReLU(),

nn.AvgPool2d(2, 2)

)

self.fullyconn = nn.Sequential(

nn.Linear(400, 120), # Linear Layer

nn.ReLU(), # ReLU Activation Operate

nn.Linear(120, 84),

nn.ReLU(),

nn.Linear(84, 10)

)def ahead(self, x):

x = self.cnnmodel(x)

x = x.view(x.dimension(0), -1)

x = self.fullyconn(x)

return x

Coaching the Mannequin

To coach our mannequin, we have to arrange a loss perform and an optimizer. We’ll use cross-entropy loss and Adam optimizer:

mymodel = LeNet().to('cuda:0') # Transfer the mannequin to GPU if accessible

loss_fn = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(mymodel.parameters(), lr=0.001)loss_arr = []

for epoch in vary(20):

for single_batch in trainloader:

photos, labels = single_batch

photos, labels = photos.to('cuda:0'), labels.to('cuda:0')

ypred = mymodel.ahead(photos)

loss = loss_fn(ypred, labels)

loss.backward()

optimizer.step()

optimizer.zero_grad()

loss_arr.append(loss.merchandise())

plt.plot(loss_arr)

plt.title('Coaching Loss')

plt.present()

Mannequin Analysis

Lastly, let’s consider the educated mannequin on each coaching and take a look at units:

def analysis(knowledge, mannequin):

whole, right = 0, 0

for photos, labels in knowledge:

photos = photos.to('cuda:0')

ypred = mannequin.ahead(photos)

_, predicted = torch.max(ypred.knowledge, 1)

whole += labels.dimension(0)

right += (predicted == labels.to('cuda:0')).sum().merchandise()

return 100 * right / wholetrain_accuracy = analysis(trainloader, mymodel)

test_accuracy = analysis(testloader, mymodel)

print(f'Prepare Accuracy: {train_accuracy}%')

print(f'Take a look at Accuracy: {test_accuracy}%')

Conclusion

On this tutorial, we’ve gone by way of the steps of constructing and coaching a CNN mannequin utilizing PyTorch, visualizing knowledge, and evaluating the mannequin’s efficiency on the CIFAR-10 dataset. This train ought to provide you with a strong basis in dealing with picture knowledge and making use of CNNs to unravel classification issues. Be happy to experiment with totally different mannequin architectures, hyperparameters, and datasets to proceed your studying journey in deep studying.

Thanks for following alongside, and glad coding!

Observe me on Linkedin: https://www.linkedin.com/in/saiteja-m/

Observe me on Github: https://github.com/SaiTejaMummadi

Observe me on HuggingFace: https://huggingface.co/SaiTejaMummadi