On Could 13, OpenAI introduced its new flagship mannequin, GPT-4o, able to reasoning over visible, audio, and textual content enter in actual time. The mannequin has clear advantages in response time and multimodality, providing spectacular millisecond latency for voice processing corresponding to human dialog cadence. Additional, OpenAI has boasted comparable performance to GPT-4 throughout a set of normal analysis metrics, together with the MMLU, GPQA, MATH, HumanEval, MGSM, and DROP for textual content; nonetheless, customary mannequin benchmarks usually give attention to slim, synthetic duties that don’t essentially translate to aptitude on real-world functions. For instance, each the MMLU and DROP datasets have been reported to be extremely contaminated, with vital overlap between coaching and take a look at samples.

At Aiera, we make use of LLMs on a wide range of financial-domain duties, together with subject identification, summarization, speaker identification, sentiment evaluation, and monetary arithmetic. We’ve developed our personal set of high-quality benchmarks for the analysis of LLMs with the intention to carry out clever mannequin choice for every of those duties, in order that our purchasers might be assured in our dedication to the best efficiency.

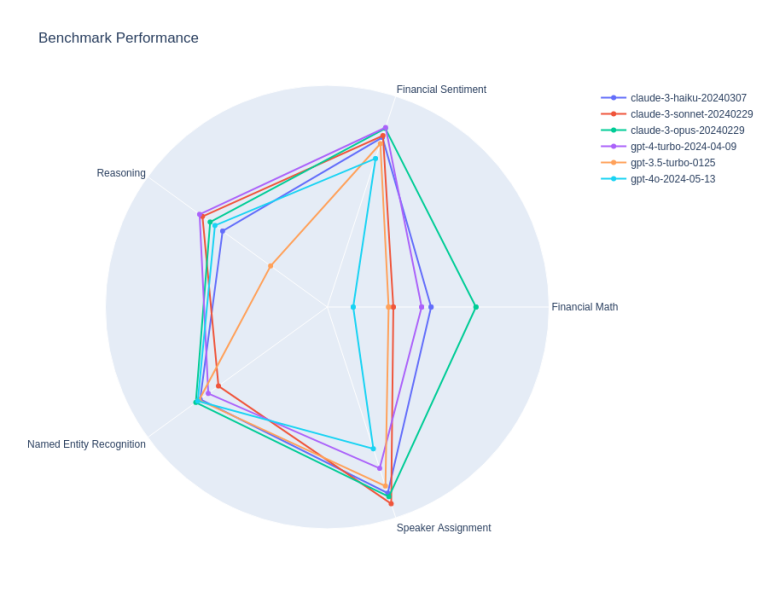

In our exams, GPT-4o was discovered to path each Anthropic’s Claude 3 fashions and OpenAI’s prior mannequin releases on a number of domains together with figuring out the sentiment of economic textual content, performing computations towards monetary paperwork, and figuring out audio system in uncooked occasion transcripts. Reasoning efficiency (BBH) was discovered to exceed Claude 3 Haiku, however fell behind different OpenAI GPT fashions, Claude 3 Sonnet, and Claude 3 Opus.

As for velocity, we used the LLMPerf library to measure and evaluate efficiency throughout each Anthropic and OpenAI’s mannequin endpoints. We carried out a modest evaluation, operating 100 synchronous requests towards every mannequin utilizing the LLMPerf tooling as beneath:

python token_benchmark_ray.py

--model "gpt-4o"

--mean-input-tokens 550

--stddev-input-tokens 150

--mean-output-tokens 150

--stddev-output-tokens 10

--max-num-completed-requests 100

--timeout 600

--num-concurrent-requests 1

--results-dir "result_outputs"

--llm-api openai

--additional-sampling-params '{}'

Our outcomes replicate the touted speedup over gpt-3.5-turbo and gpt-4-turbo. Regardless of the advance, Claude 3 Haiku stays superior on all dimensions besides the time to first token and, given it’s efficiency benefit, stays your best option for well timed textual content evaluation.

Regardless of it’s shortcomings on the highlighted duties, I’d like to notice that the OpenAI launch stays spectacular contemplating its multimodality and indication of a world to return. GPT-4o is assumedly quantized given it’s spectacular latency and due to this fact might undergo from performance degradation due to reduced precision. The lower in computation burden from such discount facilitates faster processing, however introduces potential errors in output accuracy and variations in mannequin conduct throughout several types of information. These trade-offs necessitate cautious tuning of the quantization parameters to take care of a stability between effectivity and effectiveness in sensible functions.

Per OpenAI’s launch cadence to-date, subsequent variations of the mannequin can be rolled out within the coming months and can doubtlessly display vital efficiency jumps throughout domains.

To study extra about how Aiera can help your analysis, take a look at our website or ship us a word at hey@aiera.com.