Language fashions provide configuration parameters to form their output throughout inference, distinct from the coaching parameters acquired throughout the coaching part.

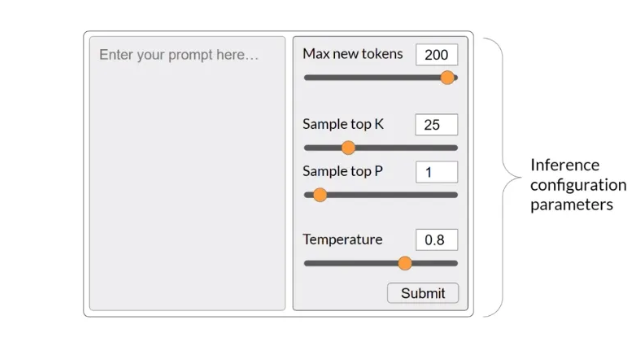

“Max new tokens” establishes a cap on the variety of tokens the mannequin generates, although the precise completion size might differ on account of different termination situations.

Grasping decoding, probably the most simple strategy for next-word prediction, chooses the phrase with the very best likelihood. Nonetheless, it might probably result in the repetition of phrases or sequences.

Random sampling injects variability by randomly choosing phrases primarily based on their likelihood distribution, thus reducing the probability of phrase repetition.

- Prime-k sampling is a method used throughout language mannequin inference that constrains the choices for the subsequent token by choosing from the highest ok tokens with the very best likelihood in keeping with the mannequin’s predictions. This methodology promotes randomness within the generated textual content whereas concurrently stopping the choice of extremely unbelievable completions.

- Prime-p sampling, also referred to as nucleus sampling, is a technique utilized in language mannequin inference to restrict random sampling to predictions whose cumulative chances don’t exceed a specified threshold. This ensures that the generated output stays wise whereas permitting for variability and variety.

- The form of the likelihood distribution depends on the temperature parameter. Whereas decrease ranges focus likelihood on a narrower group of phrases, increased values enhance randomness.

- Configuring parameters like temperature, top-k sampling, and top-p sampling allows builders to fine-tune the efficiency of language fashions (LLMs) and generate textual content that strikes a steadiness between coherence and creativity in numerous purposes.