Immediately let’s be taught to coach a machine studying mannequin utilizing a dataset.

The dataset is on the market on the UCI ML Repository, https://archive.ics.uci.edu/dataset/151/connectionist+bench+sonar+mines+vs+rocks. The dataset has been collected by bouncing sonar indicators off a steel cylinder and rocks at numerous angles and beneath numerous situations.

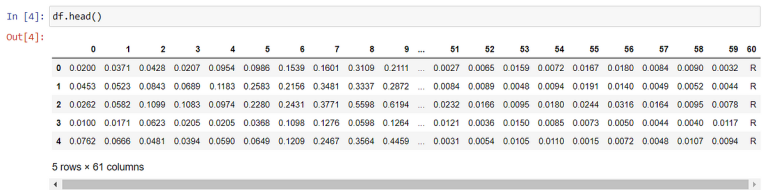

First, Let’s have a fast take a look at the dataset which is within the CSV file. It comprises 208 rows and 61 columns. The file doesn’t comprise headers for the columns.

Now it’s time for analyzing the dataset utilizing Python. Subsequently we’ll import essential libraries comparable to pandas, sklearn and so forth.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

Pandas library offers a robust and environment friendly technique to manipulate and analyze knowledge via knowledge frames. Subsequently, allow us to load the dataset right into a pandas knowledge body. We are able to use the built-in perform, ‘read_csv()’ supplied by the pandas library for loading the dataset. The ‘header’ parameter within the ‘read_csv()’ perform specifies whether or not the CSV file has a header row or not. On this case, it’s set to None, indicating that there isn’t a header row within the CSV file. It tells pandas to deal with the primary row of the CSV file as knowledge reasonably than column names. On this case, pandas will generate default column names (eg: integers ranging from 0). If the file had a header row, you’ll sometimes set this parameter to 0 to point that the primary row comprises column names. Nevertheless, it’s an non-obligatory parameter.

df= pd.read_csv(r'C:UsersDELLML Tasks�5_Sonar Rock and Mine Predictionsonar.all-data.csv', header=None)

Now allow us to have a fast overview of the dataset utilizing the pinnacle perform in pandas. It can present you the primary 5 rows of the dataset and it could provide help to to get an preliminary understanding of the dataset as you possibly can see a pattern of the information. If you wish to see the final 5 rows within the dataset, you should utilize the tail() perform. After you examine the rows returned by each head and tail features, you might be able to have an thought in regards to the knowledge integrity of the dataset.

df.head()

Now allow us to view the scale of the information body utilizing the ‘form’ attribute of pandas.

df.form

This knowledge body comprises 208 rows and 61 columns. The final column is the label. Subsequently, the information body comprises 208 cases and 60 options.

It’s helpful to look at the statistical particulars of numerical columns in an information body. This aids in swiftly greedy outliers, lacking values, and column traits, facilitating knowledgeable selections on knowledge cleansing methods. The ‘describe()’ perform in pandas can be utilized for it.

If you happen to see the final column of the dataset, it has the worth ‘R’ to point ‘Rock’ and ‘M’ for indicating ‘Steel’. Allow us to observe the distribution of information by taking the depend of every class to confirm whether or not the dataset is a balanced dataset or not. If the dataset is imbalanced the mannequin is not going to give correct predictions.

df[60].value_counts()

This knowledge set comprises 111 cases for Steel and 97 cases for Rock. Meaning, there’s not a lot distinction between the variety of Steel and Rock cases which signifies that the information set is balanced.

Earlier than increase the mannequin we must always separate the information and the label. For that, allow us to drop the final column of the information body which is the label, and assign the remaining half to a variable. The ‘axis’ parameter signifies whether or not it’s a row or a column. ‘Axis= 1’ implies that we’re referring to a column.

X= df.drop(columns=60, axis=1)

The sixtieth index of the information body is the label. Allow us to assign it to a different variable.

Y= df[60]

You’ll be able to confirm the correctness of the strategy by printing X and Y.

Earlier than we begin coaching the mannequin, it’s important to divide the dataset into coaching and testing subsets.

X_train, X_test, Y_train, Y_test= train_test_split(X, Y, test_size= 0.1, stratify= Y, random_state= 1)

Within the above name, X represents the impartial variables of the information set. Y represents the goal variable. The parameter ‘test_size’ specifies the proportion of the dataset that shall be allotted for testing. On this case, 10% of the dataset shall be used for testing.

The ‘stratify’ parameter ensures that the information is cut up in a manner that maintains the identical proportion of lessons as the unique dataset. On this particular instance, it’s set to Y, which signifies that the information shall be stratified based mostly on the values within the goal variable Y, making certain a balanced distribution of lessons in each the coaching and testing units.

The random_state parameter ensures reproducibility by setting a set random seed for knowledge splitting. By assigning it a particular worth, comparable to 1 right here, the information splitting course of will persistently yield the identical outcomes every time the code is run.

In keeping with the issue, the requirement is to categorise the dataset into two classes (Rock or Steel) Meaning that is anticipating a binary classification. The logistic regression mannequin is best for binary classifications. Subsequently, Allow us to practice the Logistic Regression Mannequin with our dataset.

mannequin= LogisticRegression()

mannequin.match(X_train, Y_train)

After we educated the mannequin, allow us to consider the mannequin with the accuracy of the coaching dataset.

X_train_prediction= mannequin.predict(X_train)

training_data_accuracy= accuracy_score(X_train_prediction, Y_train)

print('Áccuracy of the coaching dataset: ', training_data_accuracy)

On this situation, the accuracy of the coaching dataset could be 0.834 which signifies that the mannequin is 83.4% correct for the coaching dataset.

Then allow us to examine the accuracy for the check dataset as under.

X_test_prediction= mannequin.predict(X_test)

test_data_accuracy= accuracy_score(X_test_prediction, Y_test)

print('Áccuracy of check knowledge: ', test_data_accuracy)

The mannequin has 0.7619 accuracy for the check dataset. In proportion clever its 76.19%. It’s a good accuracy for unseen knowledge.

There are various kinds of machine studying fashions comparable to KNN, SVM and so forth. We are able to check the accuracy of every mannequin as we did for the Logistic Regression mannequin and choose the most effective mannequin with the very best accuracy.