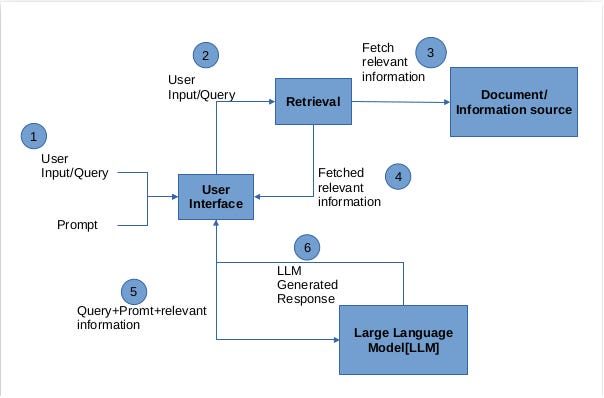

On this article, we’ll discover find out how to construct a Retrieval Augmented Technology (RAG) utility utilizing LangChain and Cohere. RAG structure is a framework that may retrieve and incorporate related info from exterior sources to generate extra knowledgeable and context-aware responses.

LangChain is a strong Python library that simplifies the method of constructing functions with massive language fashions (LLMs) like GPT-3, BERT, and others. It offers a modular and extensible framework for constructing functions that may leverage the facility of LLMs for varied duties, together with query answering, textual content era, and extra.

Cohere, then again, is an AI firm that gives entry to highly effective language fashions by means of their API. On this instance, we’ll be utilizing Cohere’s “command-r” mannequin, which is a retrieval-augmented language mannequin particularly designed for query answering duties.

Step 1: Import Required Libraries

import bs4

from langchain import hub

from langchain_community.document_loaders import WebBaseLoader

from langchain_chroma import Chroma

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain_openai import OpenAIEmbeddings

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_community.embeddings import HuggingFaceEmbeddings

We import varied libraries from LangChain and its group contributions, together with Chroma (a vector retailer), doc loaders, textual content splitters, and embedding fashions.

Step 2: Arrange Cohere API Key

import getpass

import os

os.environ["COHERE_API_KEY"] = getpass.getpass()

We arrange the Cohere API key utilizing the `getpass` module for safe enter.

Step 3: Create the LLM Occasion

from langchain_cohere import ChatCohere

llm = ChatCohere(mannequin="command-r")

We create an occasion of the Cohere language mannequin utilizing the `ChatCohere` class and specify the “command-r” mannequin for retrieval-augmented era.

Step 4: Load the Doc

from langchain_community.document_loaders import PyPDFLoader

loader = PyPDFLoader("https://arxiv.org/pdf/2103.15348.pdf")

paperwork = loader.load()

We use the `PyPDFLoader` to load a PDF doc from a given URL. This instance makes use of a analysis paper from arXiv, however you possibly can load any doc of your alternative.

Step 5: Break up the Doc

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.split_documents(paperwork)

We break up the loaded doc into smaller chunks utilizing the `RecursiveCharacterTextSplitter` to make sure environment friendly processing and retrieval.

Step 6: Arrange Embeddings Mannequin

embeddings_model_name = "sentence-transformers/all-MiniLM-L6-v2"

embeddings = HuggingFaceEmbeddings(model_name=embeddings_model_name)

We arrange an embeddings mannequin utilizing the `HuggingFaceEmbeddings` class from LangChain. Embeddings are numerical representations of textual content that seize semantic that means, that are used for environment friendly retrieval and comparability.

Step 7: Create a Vector Retailer

vectorstore = Chroma.from_documents(paperwork=splits, embedding=embeddings)

We create a vector retailer utilizing Chroma, which is a quick and environment friendly vector retailer for LangChain. The vector retailer is initialized with the doc splits and the embeddings mannequin we arrange earlier.

Step 8: Arrange the Retriever and Immediate

retriever = vectorstore.as_retriever()

immediate = hub.pull("rlm/rag-prompt")

We arrange a retriever from the vector retailer, which can be used to retrieve related info from the doc. We additionally load a pre-defined RAG immediate from the LangChain hub, which can be used to format the question and retrieved info for the language mannequin.

Step 9: Helper Operate for Formatting Output

def format_docs(docs):

return "nn".be a part of(doc.page_content for doc in docs)

We outline a helper perform `format_docs` that codecs the retrieved paperwork right into a readable string format for the language mannequin.

Step 10: Set up the RAG Chain

rag_chain = (

format_docs, "query": RunnablePassthrough()

| immediate

| llm

| StrOutputParser()

)

We set up the RAG chain, which mixes the retriever, immediate, language mannequin, and output parser in a sequence. The `RunnablePassthrough` is used to move the consumer’s query to the immediate unchanged.

Step 11: Question the RAG Chain

rag_chain.invoke("Summarize the paper in 10 sentences?")

'1zhshen@gmail.com) ,nHaibin Ling2( 12345@163.com ),n

Shuang Wang1( wshuang87@gmail.com) ,nJian Yang1( jyang@cs.princeton.edu) ,

nand Wenchao Lu1(wlu@cs.princeton.edu)n1Princeton College, 2Tsinghua

UniversitynAbstract—Doc picture evaluation isna difficult activity due

to the nice vari-nety of doc layouts and printingnstyles.

Earlier works typically requirentedious function engineering, which isnboth

time-consuming and never robusnAnswer: The paper introduces LayoutParser, a

toolkit for analysing doc photographs. It addresses the challenges posed

by various doc layouts and printing types, eliminating the necessity for

time-consuming function engineering. The strategy recognises vertical textual content,

identifies column buildings, and makes use of vertical positions to find out

format varieties.'

rag_chain.invoke("What are among the main benefits?")

'LayoutParser has many benefits, together with the power to create large-scale

and light-weight doc digitization pipelines with ease.

It additionally helps coaching custom-made format fashions and group sharing of

these fashions. This makes it a flexible software with quite a few potential

functions.'

The RAG mannequin retrieves related info from the doc and generates a response based mostly on the question and the retrieved context.