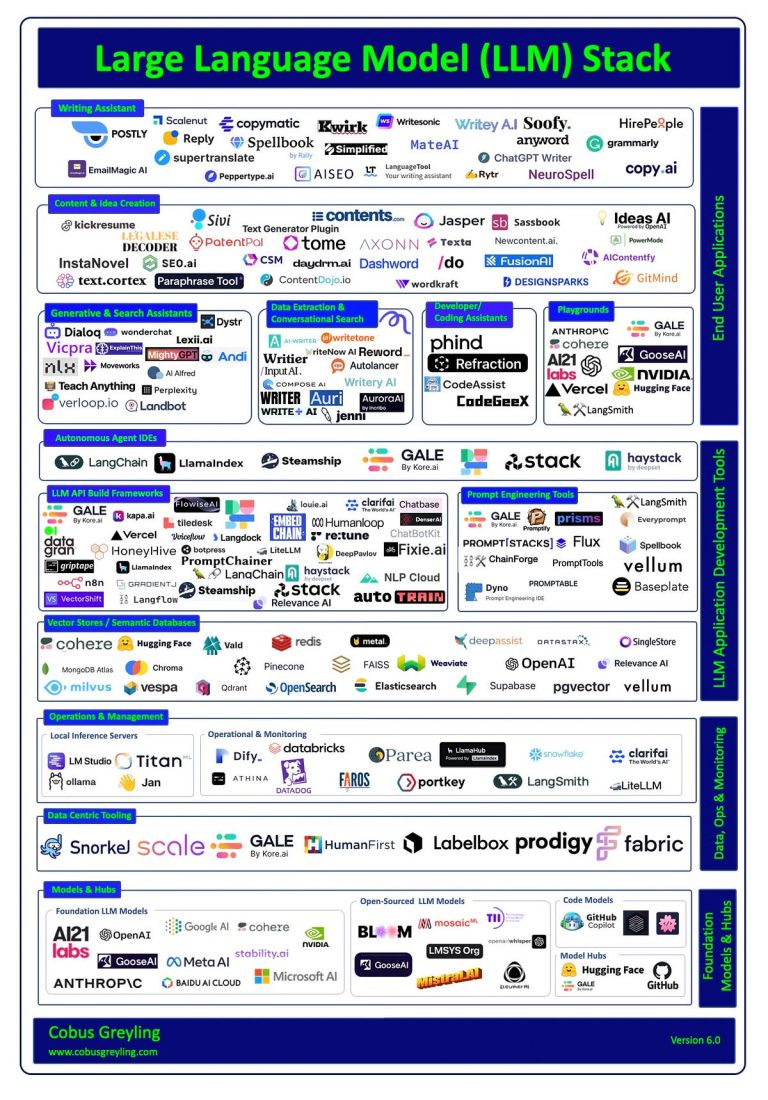

In attempting to decipher present market tendencies and anticipate its course, I once more up to date my product taxonomy outlining totally different LLM implementations and their respective use instances.

Some merchandise and classes listed will for certain exhibit overlaps. I’m to study what I’ve missed and what will be up to date.

A few of the details are…

⏺ Out there there was immense curiosity in non-public / self internet hosting of fashions, small language fashions and native inference servers.

⏺ There was an emergence of productiveness hubs like GALE, LangSmith, LlamaHub and others.

⏺ RAG has continued to develop in performance and normal curiosity with RAG particular immediate engineering rising.

⏺ Tremendous-Tuning LLMs and SLMs, to not imbue it with data, however quite enhance the behaviour of the mannequin was the topic of plenty of research.

⏺ SLMs have featured of late, with fashions like TinyLlama, Orca-2 and Phi-3.

⏺ Contemplating the picture, I’d nonetheless argue merchandise greater up within the stack are extra weak. LLM suppliers like OpenAI and Cohere are releasing extra merchandise and options that are baked into their providing. Like RAG-like performance, reminiscence, brokers and extra.

⏺ Default LLM performance is constantly expanded, with no-code fine-tuning being accessible for extra fashions.

⭐️ Comply with me on LinkedIn for updates on Giant Language Fashions ⭐️

I’m at the moment the Chief Evangelist @ Kore AI. I discover & write about all issues on the intersection of AI & language; starting from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & extra.