Okay, so let’s do some machine studying. We now have an issue that has a sample (that is assumed), and we need to higher perceive, and predict this sample with the information that we have now.

First we’ll want an enter X, and an output Y, making our knowledge a pair of Xs and Ys. X is what we have now, and Y is what we would like to have the ability to predict.

Which means that there’s a perform (our goal perform) that is ready to output Y given X

knowledge = (x1, y1 ... xN, yN)

f : X -> Y

We take into account a “Speculation Set” because the set of candidate formulation (all attainable formulation that do what we would like, i.e, obtain x and provides a y’). Now, some capabilities will carry out higher than others, so we have to choose the very best one as our remaining speculation. This finest system, that provides the closest Y for the true knowledge we have now, known as g.

Then, the educational half is solely the method of discovering this g.

Now, the half we have to work out now’s how we are able to perceive the habits (and on this case discover g) with solely a restricted quantity of knowledge in comparison with all the distribution.

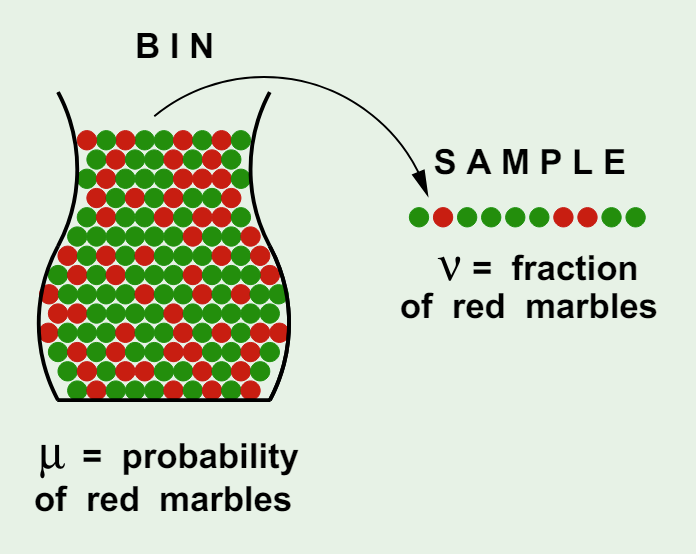

For a greater instance (one which can also be current within the lecture from Professor Yaser), consider a bin filled with inexperienced and pink marbles. This implies there’s a distribution of the colours, that’s unknown to us, equivalent to mu pink marbles and 1 — mu inexperienced marbles. If we seize a handful of the marbles (dimension N), we’ll have one other distribution of marbles in our fingers, nu pink marbles.

Nu and Mu can be completely different however the pattern frequency will, probably, be shut! Or, in different phrases, given a big sufficient pattern N, mu might be near nu , inside an error margin “e”. That is defined within the system beneath, that states: the chance of the distinction between mu and nu being exterior the error e is lower than or equal to a price that relies upon each on the pattern dimension, and the error margin.

What we simply outlined known as the Hoeffding’s Inequality, that the assertion mu = nu might be (given a big sufficient pattern), roughly (given the error margin) appropriate.

PS: There are different types of this inequality, with completely different levels of generality, however that is the essential gist of it

Now, this works for one bin, however with machine studying, we’re successfully selecting between a number of bins, with multiple speculation. Keep in mind the Speculation Area? Every bin is equal to at least one speculation, the place there are a number of in whole. Which means that for every bin, there’s a new mu and nu, for M speculation, all belonging to the Speculation Area

With that, let’s additionally change the notation.

- Nu is the distribution within the pattern, so it’s the “in pattern error” for a given bin h

- In the identical method, mu is the distribution for the entire bin, being “out of pattern”

Not solely that, however we have now to account for all the opposite bin prospects, so let’s rewrite the inequality contemplating M bins, as a substitute of only one

Rewriting the inequality provides the next:

What does it imply?

Now, we account for each M within the set of the speculation area we’re contemplating (each bin).

Additionally, we’re analyzing the error margin for the ultimate speculation g, as a substitute of a single h (as we’ve included each M bin, so we are able to establish the very best perform g)

Nice, we have now M. However unhealthy information, M is just too giant. We’re getting the entire enter area (all of the bins), which is just about infinite.

We now have to exchange the M for one thing smaller. The variety of speculation will be infinite, so we solely get dichotomies. The speculation with regard to our personal knowledge factors X. Contemplating the picture beneath, we ignore a lot of the graph, and focus solely on the information factors we have now.

This drastically diminishes the attainable quantity. For a classification drawback h : X -> {-1, +1}, the dichotomy is given as h : {x1, x2 … xn} -> {-1, +1}. So it’s 2^n at most (two prospects for N values of x).

As such, we want a perform that counts probably the most dichotomies of any N factors, successfully getting probably the most out of the Speculation Area H. The utmost variety of distinct ways in which N factors will be labeled utilizing the speculation in H. With that, we remove the overlap in M, the place the factors will be equal between completely different speculation.

The notation mH(N) denotes this, the progress perform. It solely is dependent upon H and N, and the bigger it’s, the extra expressive the speculation area is and the extra complicated it’s. It may be additionally proven as Π

As we mentioned, the utmost quantity of the dichotomies for a given N is 2^N, being the higher sure of the expansion perform.

Okay, we’re getting there. Let’s work to exchange the M for the expansion perform within the inequality. For that, we want mH(n) to be a polynomial.

How can we show that? Utilizing break factors and the VC dimension. Mainly we are going to sure the expansion perform to polynomials.

The definition of a break level is straightforward. If no knowledge set of dimension ok will be shattered by the Speculation Area H then ok is a break level for H.

What’s shattering? It’s the method of “selecting out” any component of that set, as per the picture beneath. Each single mixture of parts is feasible, for the instance of three knowledge factors (n = 3). However not for n=4. Which means that 4 is a break level

Now, the VC dimension (for Vapnik Chekovenski) is the biggest integer d for which the mH(d) = 2^d. Mainly, it’s the integer earlier than the breakpoint, so k-1 = Dvc

And right here’s the magic, let’s do the Binomial distribution for B(N, ok), being the utmost variety of dichotomies on N factors, with the Dvc. In fact, we have to do it for the sum of all these mixtures.In case you need the extra detailed proof, here it’s. The utmost energy is N^d

With that, it there’s a breakpoint, then the expansion perform is a polynomial in N. If not, then it’s equal to 2^n.

Lastly, we are able to substitute the M for all speculation (and that was infinite) with the expansion perform that’s bounded.

This leaves us with the VC Inequality, as beneath. The explanation for the 2N is as a result of proof utilizing a pattern of 2N as a substitute of simply N factors. For that, we have to shrink the error margin by an element of 4

So, on this textual content, we’ve understood the premise for getting basic info out of a pattern, in addition to the methods the relation between the dimensions of pattern, and error margin relate to the chance of efficiently predicting an output Y.

Reordering the inequality, we get the next:

The most effective takeaway from that is the notion of mannequin complexity:

Dvc enhance -> Ein decreases and Eout decreases

- The minimal out of pattern error is at some intermediate Dvc