Whats up everybody, right now we’re going to talk about the fundamentals of pure language processing. Let’s begin by understanding what pure language processing is. Pure language processing could be understood as representing textual information in such a means {that a} machine can perceive in addition to seize its which means.

The principle facets of pure language embrace syntax and semantics. Syntax in a language is outlined by how the phrases are organized in it, whereas semantics is outlined is outlined because the which means conveyed by the sentence. In language there are, particularly eventualities the place a sentence can convey completely different which means. This is called ambiguity, which is without doubt one of the challenges in nlp.

Let’s begin with a fundamental utility of nlp i.e. sentiment evaluation utilizing, classification mannequin. We’ll see a fundamental implementation find out how to classify a tweet as optimistic or destructive.

You’ll be able to symbolize a single tweet utilizing your entire vocabulary by a vector V with 1 indicating the actual phrase’s presence and 0 indication its absence, however these will trigger it to turn into sparse with the lack of undesirable zeros.

Tweet=Whats up, How are you?

V={1,1,1,1,0,0,0,0,0,0,0,0,…………..}

Fairly, we will simply create a dictionary by mapping every phrase with its variety of occurrences in optimistic tweets and destructive tweets.

It’s essential to pre-process tweets. We will pre-process tweets utilizing the next fundamental steps.

- Get rid of handles and URLs

- Tokenize the string into phrases.

- Take away cease phrases like “and, is, a, on, and many others.”

- Stemming- or convert each phrase to its stem. Like dancer, dancing, danced, turns into ‘danc’. You should use porter stemmer to maintain this.

- Convert all of your phrases to decrease case.

We will create a vector illustration of all tweets by 3 phrases: i, e optimistic, destructive and bias time period i.e.

x=[1,Σ positive_weights,Σnegative_weigths]

Right here positive_weights are the summation of optimistic weight of all phrases within the tweet whereas negative_weights is the sum of destructive weight.

After getting the vector illustration, we will prepare the mannequin utilizing a logistic regression algorithm utilizing the next components

right here θT represents the load vector for optimistic and destructive in addition to the bias time period.

Now, let’s perceive one other essential algorithm which can be utilized right here. i.e. naive bayes. Naive bayes is a probability-based concept which is predicated on bayes theorum.

We convert the variety of occurrences into chance by dividing it with the full variety of the respective class.

Such that

p(pos|“completely happy”)=complete(“completely happy”|pos)/complete(pos)

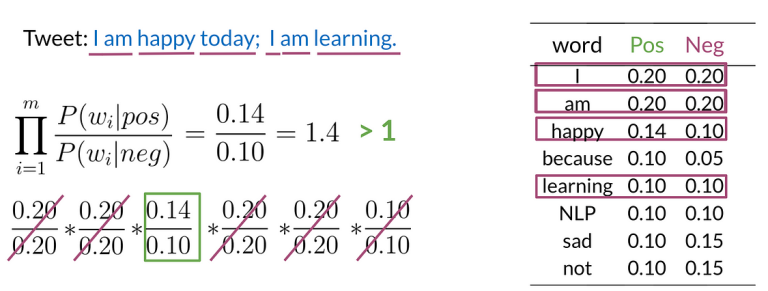

Right here, as an alternative of coaching, we calculate the relative chance of prevalence of every phrase i.e p (optimistic)/p (destructive) and multiply it with one another whether it is discovered to be >1, we predict it as a optimistic tweet and destructive if it’s <1.

We will simply perceive from the next instance.

Now there’s a drawback with this that is called zero drawback. If a phrase within the corpus (vocabulary) has a chance of zero, it is going to trigger a prediction of 0 for that class. It causes the algorithm to deviate from the conduct attributable to an outlier. This may be solved utilizing the idea of laplace smoothing

Fairly than the standard components

we use the next components

Right here v is the variety of phrases within the corpus and a continuing which is mostly 1, is added with a numerator which helps in avoiding zero chance and v i.e, the variety of phrases in vocabulary is added to stop the chance from exceeding 1.

This was a fundamental introduction to ideas and algorithms relating to pure language processing for freshmen. You’ll be able to discover a number of matters comparable to illustration of textual content comparable to vector areas, similarity indicators comparable to Euclidian distance, cosine similarity and many others.

Thanks for studying until the tip!!