Are you a newbie in Machine Studying? Belief me, you’ll need to work virtually on coaching fashions…

On the finish of this weblog you’ll have labored via the next steps!

- Knowledge Understanding

- Knowledge Preparation

- Modelling

- Mannequin Analysis and Comparability

- Hyperparameter Tuning (and cross validation)

- Unit and Integration Testing

- Deployment (use the precise mannequin in an internet site!)

Scary? It’s value it guys! Observe together with me!

What’s CRISP DM and why are we exploiting it right here?

CRISP-DM stands for Cross-Business Normal Course of for Knowledge Mining. It’s an established customary for performing knowledge evaluation and mannequin coaching. As a newbie, let’s keep on with that for getting optimum outcomes.

USING A DATASET — Work on a regular dataset obtainable on the web. Open supply platforms like Kaggle assist the machine studying group by providing dependable datasets to work on. I’ve used the mpg dataset from Kaggle (this can be a very small dataset — upto a number of KBs, requiring little or no processing energy). Use a processing surroundings (python IDLE or Google Colab or Jupyter Pocket book or Visible Studio Code) of your alternative.

Obtain the dataset from the hyperlink under!

Python has confirmed to be a easy, beginner-friendly but environment friendly language for engaged on machine studying duties! I’m sticking onto it all through the lesson.

DEFINE YOUR AIM — Assemble a CRISP — DM regression mannequin to foretell the gasoline effectivity (mpg) of automobile fashions.

In case you are fully clueless about this, analyse the columns of your dataset. Then select the goal variable and how much machine studying operation you wish to carry out on it — regression/ classification. ( For the mpg dataset (for regression), you’ll realise predicting the gasoline effectivity based mostly on different components like acceleration, horsepower, and so on. makes extra sense!

DATA UNDERSTANDING

The given dataset contains particulars of mpg, cylinders, displacement, horsepower, weight, acceleration, model_year, origin and mannequin title.

- Cylinders: Variety of cylinders within the engine.

- Displacement: Engine displacement, usually measured in cubic inches or litres.

- Horsepower: Engine energy output, normally measured in horsepower.

- Weight: Car weight, usually measured in kilograms.

- Acceleration: Acceleration efficiency of the car, normally measured in seconds.

Dependant / Goal variable: mpg (for gasoline effectivity)

Impartial variables: cylinders, displacement, horsepower, weight, acceleration, model_year, origin

Parameter model_name can be utilized for functions reminiscent of knowledge identification, labelling, exploration, and so on.

model_name is intuitively not considered for mannequin coaching as it’s

- a string (non-numerical datatype)

2. do you suppose a car’s mannequin title can affect its mpg?

Knowledge Loading

import pandas as pd

# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

# Use your personal path to dataset!

Knowledge Description

# Show the primary few rows of the dataset

print(knowledge.head())# Test the information varieties and lacking values

print(knowledge.data())

# Abstract statistics

print(knowledge.describe())

Attempt to interpret as a lot data out of your output(imply, customary deviation, most values, and so on. for numeric columns and in addition the datatype of every column concerned)

Histograms assist in the visualization of skewness or uncommon patterns in numerical values.

import matplotlib.pyplot as plt

# Plot histograms for numerical variables

knowledge.hist(figsize=(10, 8))

plt.tight_layout()

plt.present()

Right here, there’s skewness in displacement, weight and in addition a traditional distribution (preferable, because it implies that the datapoints are random and centred across the imply) for acceleration.

Scatter Plots assist determine any linear or nonlinear relationships between pairs of variables.

Constructive Correlation: In a scatter plot with a optimistic correlation, as one variable will increase, the opposite variable additionally tends to extend.

Damaging Correlation: In a scatter plot with a adverse correlation, as one variable will increase, the opposite variable tends to lower.

No Correlation: If there isn’t a discernible sample or pattern within the scatter plot, or if the factors are scattered randomly with out forming a transparent upward or downward slope, it means that there isn’t a linear relationship between the variables.

import seaborn as sns# Plot scatter plots for pairwise relationships

sns.pairplot(knowledge)

plt.present()

Right here there’s a linear relationship between mpg and weight (-), acceleration (+), displacement (-) and horsepower (-).

Analyzing the correlation matrix helps determine robust correlations (optimistic or adverse) between variables. This helps determine potential predictors for the regression mannequin.

# Calculate correlation matrix

correlation_matrix = knowledge.corr()# Plot correlation matrix as a heatmap

plt.determine(figsize=(10, 8))

sns.heatmap(correlation_matrix, annot=True, cmap='coolwarm', fmt=".2f")

plt.title("Correlation Matrix")

plt.present()

Right here there’s robust correlation between mpg and weight, cylinders, horsepower and displacement. There’s additionally good correlation between mpg and origin, model_year, acceleration.

DATA PREPARATION

This section is important in machine studying as a result of the standard of the information straight impacts the efficiency and accuracy of the ensuing fashions.

Deceptive knowledge can alter the course of the mannequin being skilled!

Our knowledge has lacking values (for those who intently look at, you can see ‘?’ in sure cells of your csv file) and allow us to restrict our knowledge preparation step to dealing with these lacking values.

Changing ‘?’ with pd.NAimport pandas as pd

# Change '?' with NaN within the 'horsepower' column

knowledge['horsepower'] = knowledge['horsepower'].change('?', pd.NA)

# Convert the 'horsepower' column to numeric

knowledge['horsepower'] = pd.to_numeric(knowledge['horsepower'])

DATA IMPUTATION

The null values seem random. A number of strategies of imputation exist. Try the code given under for every of the tactic and you’ll realise Python libraries make our work very straightforward!

MEAN IMPUTATION

# Impute lacking values in 'horsepower' column with implymean_horsepower = knowledge['horsepower'].imply()

knowledge['horsepower'].fillna(mean_horsepower, inplace=True)

MEDIAN IMPUTATION

# Median imputation for 'horsepower' columnmedian_horsepower = knowledge['horsepower'].median()

knowledge['horsepower_median_imputed'] = knowledge['horsepower'].fillna(median_horsepower)

MODE IMPUTATION

# Mode imputation for 'horsepower' columnmode_horsepower = knowledge['horsepower'].mode()[0]

knowledge['horsepower_mode_imputed'] = knowledge['horsepower'].fillna(mode_horsepower)

CONSTANT IMPUTATION

# Fixed imputation for 'horsepower' column

knowledge['horsepower_constant_imputed'] = knowledge['horsepower'].fillna('Unknown')

FORWARD FILL IMPUTATION

# Ahead fill imputation for 'horsepower' column

knowledge['horsepower_ffill_imputed'] = knowledge['horsepower'].fillna(methodology='ffill')

# methodology = 'bfill' for backward fill imputation

INTERPOLATION IMPUTATION

# Linear interpolation for 'horsepower' column

knowledge['horsepower_interpolated'] = knowledge['horsepower'].interpolate(methodology='linear')# Time-based interpolation (e.g., for time sequence knowledge)

knowledge['column'] = knowledge['column'].interpolate(methodology='time')

PREDICTIVE IMPUTATION

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

import pandas as pd# Assuming 'knowledge' is your DataFrame with lacking values within the 'horsepower' column

# Step 2: Cut up the information

imputation_set = knowledge[data['horsepower'].isnull()]

training_set = knowledge.dropna(subset=['horsepower'])

# Step 3: Preprocess knowledge

X_train = training_set.drop(columns=['horsepower']) # Options

y_train = training_set['horsepower'] # Goal variable

X_impute = imputation_set.drop(columns=['horsepower']) # Options for imputation

# Step 4: Prepare a predictive mannequin

mannequin = LinearRegression()

mannequin.match(X_train, y_train)

# Step 5: Predict lacking values

predicted_values = mannequin.predict(X_impute)

# Step 6: Impute lacking values

knowledge.loc[data['horsepower'].isnull(), 'horsepower'] = predicted_values

Getting again to this methodology later after understanding modelling will assist.

Okay-Nearest Neighbours (KNN) IMPUTATION

from sklearn.impute import KNNImputer

# Create KNN imputer object

imputer = KNNImputer(n_neighbors=5)# Impute lacking values utilizing KNN for 'horsepower' column

data_imputed = pd.DataFrame(imputer.fit_transform(knowledge[['horsepower']]), columns=['horsepower_knn_imputed'])

knowledge['horsepower_knn_imputed'] = data_imputed['horsepower_knn_imputed']

FEATURE SELECTION

Function choice is essential to enhance mannequin efficiency and deal with challenges related to high-dimensional knowledge. Excessive-dimensional datasets can result in longer coaching instances, elevated computational complexity and better dangers of overfitting. Irrelevant options may also be deceptive. There are a number of strategies to decide on acceptable options from the set of all obtainable columns within the dataset (by this time, you need to have realised that columns basically grow to be your function set).

a) Knowledge Visualisation

Interpret relationships between current options. Right here, utilizing scatter plots and correlation matrices, we see there exist relationships between mpg and components like weight, acceleration, cylinders, horsepower, and so on.. (test plots above)

Often in machine studying, it’s useful for options to be as impartial as doable. It is because impartial options be sure that every function contributes distinctive data to the mannequin and thus helps keep away from redundancy.

In some circumstances options could also be correlated and it may be significant too. Instance: In NLP, phrase frequencies of associated phrases could also be correlated and nonetheless contribute helpful data.

b) Recursive Function Elimination (RFE)

Iteratively removes the least essential options based mostly on coefficients of a specified estimator till a desired variety of options is reached.

from sklearn.feature_selection import RFE

from sklearn.linear_model import LinearRegression

# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

# Assuming 'X' comprises options and 'y' comprises goal variable

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']estimator = LinearRegression()

rfe = RFE(estimator, n_features_to_select=5) # Choose high 5 options

rfe.match(X, y)

selected_features = X.columns[rfe.support_]

print("Chosen Options:", selected_features)

c) SelectKBest

Selects the highest ok options based mostly on statistical checks like ANOVA F-value or mutual data rating. (needn’t get into the working of those strategies for now)

from sklearn.feature_selection import SelectKBest, f_regression

# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')# Assuming 'X' comprises options and 'y' comprises goal variable

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']

ok = 5 # Choose high 5 options

selector = SelectKBest(score_func=f_regression, ok=ok)

selector.match(X, y)

selected_features = X.columns[selector.get_support()]

print("Chosen Options:", selected_features)

d) L1 Regularization (LASSO)

Penalizes absolutely the measurement of coefficients, forcing some coefficients to be precisely zero and successfully performing function choice.

from sklearn.linear_model import Lasso

# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

# Assuming 'X' comprises options and 'y' comprises goal variable

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']lasso = Lasso(alpha=0.1) # Set regularization parameter alpha

lasso.match(X, y)

selected_features = X.columns[lasso.coef_ != 0]

print("Chosen Options:", selected_features)

e) Tree-based Strategies: Function Significance

Calculates function significance scores indicating the contribution of every function to the mannequin’s predictive efficiency.

from sklearn.ensemble import RandomForestRegressor

# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')# Assuming 'X' comprises options and 'y' comprises goal variable

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']

rf = RandomForestRegressor()

rf.match(X, y)

feature_importances = pd.Collection(rf.feature_importances_, index=X.columns).sort_values(ascending=False)

print("Function Importances:n", feature_importances)

f) Variance Threshold

Removes options with low variance, that are more likely to comprise largely fixed values.

from sklearn.feature_selection import VarianceThreshold

# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')# Assuming 'X' comprises options and 'y' comprises goal variable

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']

threshold = 0.1 # Set threshold for variance

selector = VarianceThreshold(threshold=threshold)

selector.match(X)

selected_features = X.columns[selector.get_support()]

print("Chosen Options:", selected_features)

DIMENSIONALITY REDUCTION utilizing PCA

PCA stands for Principal Element Evaluation. It’s a dimensionality discount method used to remodel high-dimensional knowledge right into a lower-dimensional house whereas preserving a lot of the essential data. PCA achieves this by discovering a brand new set of orthogonal axes, referred to as principal elements, alongside which the variance of the information is maximised.

Scree Plots and Cumulative defined variance assist us select the suitable variety of elements.

mean_horsepower = knowledge['horsepower'].imply()

knowledge['horsepower'].fillna(mean_horsepower, inplace=True)# Drop the 'title' column from the dataset

knowledge = knowledge.drop(columns=['name'])

# Assuming 'X' comprises options and 'y' comprises goal variable

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']

# Assuming 'X' comprises options

pca = PCA()

pca.match(X)

# Scree plot

plt.plot(vary(1, len(pca.explained_variance_ratio_) + 1), pca.explained_variance_ratio_, marker='o')

plt.xlabel('Variety of Parts')

plt.ylabel('Defined Variance Ratio')

plt.title('Scree Plot')

plt.present()

Deciphering a scree plot entails figuring out the “elbow level” or the purpose the place the plot begins to degree off. This level signifies the variety of principal elements past which further elements clarify comparatively little further variance. The variety of principal elements to retain may be decided based mostly on the situation of the elbow level.

Principal elements earlier than the elbow level clarify a good portion of the whole variance and are usually retained, whereas elements after the elbow level clarify comparatively little variance and could also be discarded.

mean_horsepower = knowledge['horsepower'].imply()

knowledge['horsepower'].fillna(mean_horsepower, inplace=True)# Drop the 'title' column from the dataset

knowledge = knowledge.drop(columns=['name'])

# Assuming 'X' comprises options and 'y' comprises goal variable

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']

# Assuming 'X' comprises options

pca = PCA()

pca.match(X)

# Cumulative defined variance

cumulative_variance = np.cumsum(pca.explained_variance_ratio_)

plt.plot(vary(1, len(cumulative_variance) + 1), cumulative_variance, marker='o')

plt.xlabel('Variety of Parts')

plt.ylabel('Cumulative Defined Variance Ratio')

plt.title('Cumulative Defined Variance Plot')

plt.present()Cumulative defined variance is the cumulative sum of the variance defined by every principal element. It represents the proportion of complete variance within the dataset that's accounted for by together with a sure variety of principal elements.

Cumulative defined variance is the cumulative sum of the variance defined by every principal element. It represents the proportion of complete variance within the dataset that’s accounted for by together with a sure variety of principal elements.

Variance refers back to the sensitivity of a mannequin’s predictions to the variability within the coaching dataset.

A excessive cumulative defined variance signifies that a big portion of the whole variance within the dataset is retained by together with the corresponding variety of principal elements.

Right here we select to make use of 2 elements, based mostly on interpretations from the above plots.

# Assuming 'pca' is your fitted PCA mannequin

loadings = pca.components_# Extract loadings for PC1 and PC2

loading_pc1 = loadings[0] # Loadings for PC1

loading_pc2 = loadings[1] # Loadings for PC2

# Assuming 'X' comprises function names

feature_names = X.columns

# Mix function names with loadings for PC1 and PC2

pc1_loadings = pd.DataFrame({'Function': feature_names, 'Loading_PC1': loading_pc1})

pc2_loadings = pd.DataFrame({'Function': feature_names, 'Loading_PC2': loading_pc2})

# Show the loadings for PC1 and PC2

print("Loadings for PC1:")

print(pc1_loadings)

print("nLoadings for PC2:")

print(pc2_loadings)

ONE HOT ENCODING

One-hot encoding is a technique used to transform categorical variables right into a numerical format (how would you deal with string or character or different non-numerical knowledge?). It represents every class as a binary vector the place just one aspect is “scorching” (set to 1) and all others are “chilly” (set to 0).

# Separate discrete and steady variables

discrete_vars = knowledge.select_dtypes(embrace=['int64']).columns

continuous_vars = knowledge.select_dtypes(embrace=['float64']).columns

# Convert discrete variables to a 2D NumPy array

discrete_data = knowledge[discrete_vars].to_numpy()# One-hot encode discrete variables

encoder = OneHotEncoder()

discrete_encoded = encoder.fit_transform(discrete_data)

# Get the function names after one-hot encoding

feature_names = encoder.get_feature_names_out(input_features=discrete_vars)

# Convert the encoded knowledge to a DataFrame

discrete_encoded_df = pd.DataFrame(discrete_encoded.toarray(), columns=feature_names)

# Mix encoded discrete variables with steady variables

data_encoded = pd.concat([discrete_encoded_df, data[continuous_vars]], axis=1)

# Show the encoded dataset

print("Encoded Dataset:")

print(data_encoded.head())

Cylinders column has values from 3–8 (within the given dataset). To keep away from any type of rating among the many values of the datapoints, it has been encoded. Applicable column names (right here cylinders_3, cylinders_4, and so on.) will likely be given routinely. The corresponding row within the dataframe could have a price of 1 within the corresponding column.

MODEL EVALUATION

Right here you will notice the efficiency of assorted machine studying regression fashions. Allow us to evaluate them on the idea of normal efficiency metrics like Imply Squared Error (MSE), accuracy, precision, f1_score, recall, and so on..

You needn’t know the working precept of every of the mannequin defined under. Allow us to simply use them for comparability functions.

- Linear Regression: A easy regression mannequin that assumes a linear relationship between the impartial variables and the goal variable.

- Ridge Regression: A regression method that provides a penalty time period to the abnormal least squares goal to stop overfitting.

- Lasso Regression: Just like Ridge Regression however makes use of the L1 regularization penalty, which tends to provide sparse coefficients by setting some coefficients to zero.

- Resolution Tree Regressor: A tree-based regression mannequin that recursively partitions the function house into areas and predicts the goal variable by averaging the observations in every area.

- Random Forest Regressor: An ensemble studying methodology that constructs a number of choice timber and averages their predictions to cut back overfitting and enhance accuracy.

- Gradient Boosting Regressor: One other ensemble studying method that builds a sequence of choice timber, with every tree correcting the errors of the earlier ones, to create a powerful predictive mannequin.

- XGBoost Regressor: An optimized implementation of gradient boosting that gives environment friendly computation and improved efficiency.

- Assist Vector Regressor (SVR): A regression mannequin that makes use of help vector machines to search out the hyperplane that most closely fits the information whereas minimizing errors.

- Okay-Nearest Neighbors Regressor (KNN): A non-parametric regression methodology that predicts the goal variable by averaging the values of its ok nearest neighbors within the function house.

- Neural Community Regressor: A regression mannequin based mostly on synthetic neural networks that consists of a number of layers of interconnected nodes (neurons) and might seize advanced patterns within the knowledge.

- Elastic Web: A hybrid regression method that mixes the penalties of Ridge and Lasso regression to attain a stability between function choice and regularization.

- Bayesian Ridge Regression: A Bayesian strategy to linear regression that comes with prior distributions over the mannequin parameters.

- Huber Regressor: A strong regression mannequin that minimizes the mixed squared error and absolute error, offering robustness to outliers within the knowledge.

- Isotonic Regression: A non-parametric regression methodology that matches a piecewise linear perform to the information whereas preserving the order of the observations.

- Gaussian Course of Regressor: A non-parametric regression method that fashions the goal variable as a distribution over features, permitting for uncertainty estimation in predictions.

- CatBoost Regressor: A gradient boosting algorithm optimized for categorical options that gives excessive efficiency and handles categorical variables routinely.

- LightGBM Regressor: A gradient boosting framework that makes use of a tree-based studying algorithm and is designed for distributed and environment friendly coaching.

- Elastic NetCV: An extension of Elastic Web regression that features cross-validation to routinely choose the very best mixture of L1 and L2 regularization parameters.

- LGBM Regressor: One other gradient boosting framework that’s optimized for pace, effectivity, and accuracy, particularly on massive datasets.

- AdaBoost Regressor: An adaptive boosting algorithm that mixes a number of weak learners (e.g., choice timber) to create a powerful ensemble mannequin.

MODEL COMPARISONS

MODELS AND IMPUTATION TECHNIQUES

Allow us to evaluate numerous imputation strategies and their impacts on mannequin performances.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

from sklearn.linear_model import LinearRegression, Ridge, Lasso, ElasticNetCV, BayesianRidge

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor, AdaBoostRegressor

from sklearn.svm import SVR

from sklearn.neighbors import KNeighborsRegressor

from sklearn.neural_network import MLPRegressor

from sklearn.linear_model import ElasticNet, HuberRegressor

#from sklearn.isotonic import IsotonicRegression

from sklearn.gaussian_process import GaussianProcessRegressor

from catboost import CatBoostRegressor

from xgboost import XGBRegressor

from sklearn.impute import SimpleImputer# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

# Change '?' with NaN within the 'horsepower' column

knowledge['horsepower'] = knowledge['horsepower'].change('?', pd.NA)

# Convert the 'horsepower' column to numeric

knowledge['horsepower'] = pd.to_numeric(knowledge['horsepower'])

# Drop the 'title' column from the dataset

knowledge = knowledge.drop(columns=['name'])

# Checklist of imputation strategies

imputation_techniques = ['mean', 'median', 'most_frequent', 'knn', 'constant']

# Checklist of fashions

fashions = [

LinearRegression(),

Ridge(),

Lasso(),

ElasticNetCV(),

DecisionTreeRegressor(),

RandomForestRegressor(),

GradientBoostingRegressor(),

AdaBoostRegressor(),

SVR(),

KNeighborsRegressor(),

MLPRegressor(),

ElasticNet(),

BayesianRidge(),

HuberRegressor(),

GaussianProcessRegressor(),

CatBoostRegressor(),

XGBRegressor()

]

# Loop via every imputation method

for method in imputation_techniques:

# Impute lacking values utilizing the chosen method

if method == 'knn':

imputer = KNNImputer(n_neighbors=5) # Set the variety of neighbors as desired

elif method == 'fixed':

imputer = SimpleImputer(technique=method, fill_value=0) # Set the specified fixed worth

else:

imputer = SimpleImputer(technique=method)

data_imputed = pd.DataFrame(imputer.fit_transform(knowledge), columns=knowledge.columns)

# Separate options (X) and goal variable (y)

X = data_imputed.drop(columns=['mpg'])

y = data_imputed['mpg']

# Cut up the dataset into coaching and testing units (80-20 break up)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

print(f"Imputation Method: {method}")

# Prepare and consider every mannequin

for mannequin in fashions:

attempt:

mannequin.match(X_train, y_train) # Prepare the mannequin

y_pred = mannequin.predict(X_test) # Make predictions

mse = mean_squared_error(y_test, y_pred) # Calculate Imply Squared Error

print(f"{mannequin.__class__.__name__} - Imply Squared Error: {mse}")

besides Exception as e:

print(f"Error occurred with {mannequin.__class__.__name__}: {e}")

MODEL AND FEATURE SELECTION TECHNIQUES

For the code used to match totally different fashions based mostly on totally different function choice strategies, I’ve employed pipelining.

Pipelines in machine studying are a method to simplify the workflow by chaining collectively a number of knowledge processing steps right into a single object. They sequentially apply an inventory of transformations adopted by a remaining estimator.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression, Ridge, Lasso, ElasticNet

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor, AdaBoostRegressor

from sklearn.svm import SVR

from sklearn.neighbors import KNeighborsRegressor

from sklearn.neural_network import MLPRegressor

from sklearn.feature_selection import RFE, SelectKBest

from sklearn.feature_selection import VarianceThreshold

from sklearn.feature_selection import SelectFromModel

from sklearn.metrics import mean_squared_error

from sklearn.impute import SimpleImputer

from catboost import CatBoostRegressor

from xgboost import XGBRegressor

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

# Drop the 'title' column from the dataset

knowledge = knowledge.drop(columns=['name'])

# Change '?' with NaN within the 'horsepower' column

knowledge['horsepower'] = knowledge['horsepower'].change('?', pd.NA)

# Convert the 'horsepower' column to numeric

knowledge['horsepower'] = pd.to_numeric(knowledge['horsepower'])

# Impute lacking values in 'horsepower' column with imply

imputer = SimpleImputer(technique='imply')

knowledge['horsepower'] = imputer.fit_transform(knowledge[['horsepower']])

# Separate options (X) and goal variable (y)

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']

# Cut up the dataset into coaching and testing units (80-20 break up)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Outline an inventory of function choice strategies

feature_selection_techniques = [

('RFE', RFE(estimator=LinearRegression(), n_features_to_select=5)),

('SelectKBest', SelectKBest()),

('VarianceThreshold', VarianceThreshold()),

('SelectFromModel', SelectFromModel(LinearRegression())),

]

# Outline an inventory of regression fashions

regression_models = [

('Linear Regression', LinearRegression()),

('Ridge Regression', Ridge()),

('Lasso Regression', Lasso()),

('ElasticNet', ElasticNet()),

('Decision Tree Regressor', DecisionTreeRegressor()),

('Random Forest Regressor', RandomForestRegressor()),

('Gradient Boosting Regressor', GradientBoostingRegressor()),

('AdaBoost Regressor', AdaBoostRegressor()),

('SVR', SVR()),

('K-Nearest Neighbors Regressor', KNeighborsRegressor()),

('MLP Regressor', MLPRegressor()),

('CatBoost Regressor', CatBoostRegressor(verbose=0)),

('XGBoost Regressor', XGBRegressor()),

]

# Carry out function choice and mannequin becoming for every mixture

for fs_name, fs_model in feature_selection_techniques:

for model_name, mannequin in regression_models:

# Create a pipeline with function choice and mannequin becoming

pipeline = Pipeline([

('feature_selection', fs_model),

('model', model)

])

# Match the pipeline on the coaching knowledge

pipeline.match(X_train, y_train)

# Make predictions on the testing knowledge

y_pred = pipeline.predict(X_test)

# Calculate Imply Squared Error

mse = mean_squared_error(y_test, y_pred)

# Print outcomes

print(f"{fs_name} - {model_name}: MSE = {mse:.4f}")

MODEL AND DIMENSIONALITY REDUCTION TECHNIQUES

Allow us to now evaluate fashions utilizing Imply Squared Error for a number of dimensionality discount strategies. Right here is an easy code:

import pandas as pd

from sklearn.impute import SimpleImputer

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

from sklearn.manifold import TSNE

from sklearn.linear_model import LinearRegression, Ridge, Lasso

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor

from xgboost import XGBRegressor

from sklearn.svm import SVR

from sklearn.neighbors import KNeighborsRegressor

from sklearn.neural_network import MLPRegressor

from sklearn.linear_model import ElasticNet, BayesianRidge, HuberRegressor

from sklearn.gaussian_process import GaussianProcessRegressor

from catboost import CatBoostRegressor

from sklearn.metrics import mean_squared_error# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

# Drop the 'title' column

knowledge = knowledge.drop(columns=['name'])

# Change '?' with NaN within the 'horsepower' column

knowledge['horsepower'] = knowledge['horsepower'].change('?', pd.NA)

# Convert the 'horsepower' column to numeric

knowledge['horsepower'] = pd.to_numeric(knowledge['horsepower'])

# Impute lacking values in 'horsepower' column with imply

mean_horsepower = knowledge['horsepower'].imply()

knowledge['horsepower'].fillna(mean_horsepower, inplace=True)

# Cut up the dataset into options (X) and goal variable (y)

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']

# Outline the fashions

fashions = [

LinearRegression(),

Ridge(),

Lasso(),

DecisionTreeRegressor(),

RandomForestRegressor(),

GradientBoostingRegressor(),

XGBRegressor(),

SVR(),

KNeighborsRegressor(),

MLPRegressor(),

ElasticNet(),

BayesianRidge(),

HuberRegressor(),

GaussianProcessRegressor(),

CatBoostRegressor()

]

# Outline the dimensionality discount strategies

reducers = [

PCA(),

TSNE()

]

# Prepare and consider every mannequin with every dimensionality discount method

for mannequin in fashions:

for reducer in reducers:

# Match and rework the information

X_reduced = reducer.fit_transform(X)

# Cut up the information into coaching and testing units

X_train, X_test, y_train, y_test = train_test_split(X_reduced, y, test_size=0.2, random_state=42)

# Prepare the mannequin

mannequin.match(X_train, y_train)

# Make predictions

y_pred = mannequin.predict(X_test)

# Consider the mannequin

mse = mean_squared_error(y_test, y_pred)

print(f"{reducer.__class__.__name__} - {mannequin.__class__.__name__} - Imply Squared Error: {mse}")

HYPERPARAMETER TUNING

Assuming you guys know what hyperparameters for a mannequin are, allow us to use a easy code for adjusting hyper parameters and evaluating the fashions.

Listed below are the steps to comply with

Outline Hyperparameter Grid: Create a dictionary such that every key represents a hyperparameter title and its worth is an inventory of values to iterate over.

param_grid = {

‘alpha’: [0.1, 0.5, 1.0],

‘learning_rate’: [0.01, 0.1, 0.5],

‘n_estimators’: [50, 100, 200],

}

Select a Scoring Metric: Choose an acceptable efficiency metric.

Cross-Validation: Cut up your dataset into coaching and validation units utilizing strategies like k-fold cross-validation. This helps to evaluate the mannequin’s efficiency on totally different subsets of the information and thus reduces overfitting.

(defined within the subsequent part)

Hyperparameter Search: Utilise strategies like grid search, random search or Bayesian optimisation to look over the outlined hyperparameter grid. Every mixture of hyperparameters is evaluated utilizing cross-validation and the chosen metric.

Choose Finest Mannequin: Establish the mannequin with the very best efficiency.

import pandas as pd

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.linear_model import LinearRegression, Ridge, Lasso, ElasticNet, BayesianRidge, HuberRegressor

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor

from xgboost import XGBRegressor

from sklearn.svm import SVR

from sklearn.neighbors import KNeighborsRegressor

from sklearn.neural_network import MLPRegressor

from sklearn.gaussian_process import GaussianProcessRegressor

from catboost import CatBoostRegressor

from sklearn.metrics import mean_squared_error# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

# Drop the 'title' column

knowledge = knowledge.drop(columns=['name'])

# Change '?' with NaN within the 'horsepower' column

knowledge['horsepower'] = knowledge['horsepower'].change('?', pd.NA)

# Convert the 'horsepower' column to numeric

knowledge['horsepower'] = pd.to_numeric(knowledge['horsepower'])

# Impute lacking values in 'horsepower' column with imply

mean_horsepower = knowledge['horsepower'].imply()

knowledge['horsepower'].fillna(mean_horsepower, inplace=True)

# Cut up the dataset into options (X) and goal variable (y)

X = knowledge.drop(columns=['mpg'])

y = knowledge['mpg']

# Cut up the information into coaching and testing units

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Outline the fashions and their respective hyperparameter grids

fashions = {

'LinearRegression': (LinearRegression(), {'fit_intercept': [True, False]}),

'Ridge': (Ridge(), {'alpha': [0.1, 1.0, 10.0]}),

'Lasso': (Lasso(), {'alpha': [0.1, 1.0, 10.0]}),

'DecisionTreeRegressor': (DecisionTreeRegressor(), {'max_depth': [None, 10, 20]}),

'RandomForestRegressor': (RandomForestRegressor(), {'n_estimators': [50, 100, 200]}),

'GradientBoostingRegressor': (GradientBoostingRegressor(), {'n_estimators': [50, 100, 200]}),

'XGBRegressor': (XGBRegressor(), {'n_estimators': [50, 100, 200]}),

'SVR': (SVR(), {'kernel': ['linear', 'rbf'], 'C': [0.1, 1.0, 10.0]}),

'KNeighborsRegressor': (KNeighborsRegressor(), {'n_neighbors': [5, 10, 20]}),

'MLPRegressor': (MLPRegressor(), {'hidden_layer_sizes': [(100,), (50, 100, 50)]}),

'ElasticNet': (ElasticNet(), {'alpha': [0.1, 1.0, 10.0], 'l1_ratio': [0.1, 0.5, 0.9]}),

'BayesianRidge': (BayesianRidge(), {'alpha_1': [1e-6, 1e-5, 1e-4], 'alpha_2': [1e-6, 1e-5, 1e-4]}),

'HuberRegressor': (HuberRegressor(), {'epsilon': [1.35, 1.5, 1.75]}),

'GaussianProcessRegressor': (GaussianProcessRegressor(), {'alpha': [1e-10, 1e-5, 1e-3]}),

'CatBoostRegressor': (CatBoostRegressor(), {'iterations': [50, 100, 200]})

}

# Outline the scoring metric

scoring = 'neg_mean_squared_error' # Use adverse MSE to match Scikit-learn's conference

# Carry out grid search cross-validation for every mannequin

best_models = {}

best_hyperparameters = {} # Dictionary to retailer greatest hyperparameters for every mannequin

for model_name, (estimator, param_grid) in fashions.objects():

grid_search = GridSearchCV(estimator, param_grid, scoring=scoring, cv=5)

grid_search.match(X_train, y_train)

best_models[model_name] = grid_search.best_estimator_

best_hyperparameters[model_name] = grid_search.best_params_ # Retailer greatest hyperparameters

# Consider the very best fashions on the check set and print outcomes with hyperparameters

for model_name, mannequin in best_models.objects():

y_pred = mannequin.predict(X_test)

mse = mean_squared_error(y_test, y_pred)

print(f"{model_name} - Hyperparameters: {best_hyperparameters[model_name]} - Imply Squared Error: {mse}")

This code evaluates fashions based mostly on MSE.

This takes time to run! So don’t terminate the method halfway.

Quite a lot of studying takes place and in addition for those who discover, studying charges get up to date!

CROSS VALIDATION

Cross-validation is a technique used to evaluate the efficiency and generalisation potential of fashions by repeatedly splitting the dataset into coaching and testing units. It helps to estimate fashions’ efficiency on unseen knowledge (helps stop overfitting) This fashion, mannequin will likely be dependable throughout totally different knowledge samples. Here’s a pattern code to carry out 5 fold cross-validation

import pandas as pd

from sklearn.model_selection import cross_val_score, KFold

from sklearn.linear_model import LinearRegression, Ridge, Lasso, ElasticNetCV

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor, AdaBoostRegressor

from sklearn.svm import SVR

from sklearn.neighbors import KNeighborsRegressor

from sklearn.neural_network import MLPRegressor

from sklearn.linear_model import ElasticNet, BayesianRidge, HuberRegressor

from sklearn.gaussian_process import GaussianProcessRegressor

from catboost import CatBoostRegressor

from xgboost import XGBRegressor

from sklearn.preprocessing import OneHotEncoder# Load the dataset

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

# Change '?' with NaN within the 'horsepower' column

knowledge['horsepower'] = knowledge['horsepower'].change('?', pd.NA)

# Convert the 'horsepower' column to numeric

knowledge['horsepower'] = pd.to_numeric(knowledge['horsepower'])

# Impute lacking values in 'horsepower' column with imply

mean_horsepower = knowledge['horsepower'].imply()

knowledge['horsepower'].fillna(mean_horsepower, inplace=True)

# Drop the 'title' column from the dataset

knowledge = knowledge.drop(columns=['name'])

# Separate discrete and steady variables

discrete_vars = knowledge.select_dtypes(embrace=['int64']).columns

continuous_vars = knowledge.select_dtypes(embrace=['float64']).columns

# One-hot encode discrete variables

encoder = OneHotEncoder(sparse_output=False)

discrete_encoded = encoder.fit_transform(knowledge[discrete_vars])

discrete_encoded_df = pd.DataFrame(discrete_encoded, columns=encoder.get_feature_names_out(discrete_vars))

# Mix encoded discrete variables with steady variables

data_encoded = pd.concat([discrete_encoded_df, data[continuous_vars]], axis=1)

# Outline options (X) and goal variable (y)

X = data_encoded

y = knowledge['mpg']

# Initialize fashions

fashions = {

'Linear Regression': LinearRegression(),

'Ridge Regression': Ridge(),

'Lasso Regression': Lasso(),

'ElasticNetCV': ElasticNetCV(),

'Resolution Tree Regressor': DecisionTreeRegressor(),

'Random Forest Regressor': RandomForestRegressor(),

'Gradient Boosting Regressor': GradientBoostingRegressor(),

'AdaBoost Regressor': AdaBoostRegressor(),

'SVR': SVR(),

'Okay-Nearest Neighbors Regressor': KNeighborsRegressor(),

'Neural Community Regressor': MLPRegressor(),

'Elastic Web': ElasticNet(),

'Bayesian Ridge Regression': BayesianRidge(),

'Huber Regressor': HuberRegressor(),

'Gaussian Course of Regressor': GaussianProcessRegressor(),

'CatBoost Regressor': CatBoostRegressor(),

'XGBoost Regressor': XGBRegressor()

}

# Carry out cross-validation for every mannequin

outcomes = {}

for title, mannequin in fashions.objects():

cv = KFold(n_splits=5, shuffle=True, random_state=42) # 5-fold cross-validation

attempt:

scores = cross_val_score(mannequin, X, y, cv=cv, scoring='r2')

outcomes[name] = scores

besides Exception as e:

print(f"An error occurred whereas becoming {title}: {e}")

# Show outcomes

for title, scores in outcomes.objects():

print(f'{title}:')

print(f'Imply R-squared: {scores.imply()}')

print(f'Normal Deviation: {scores.std()}')

print('------------------------------------------')

UNIT AND INTEGRATION TESTING

This section helps us detect errors or inconsistencies early within the growth course of, guaranteeing the reliability, stability and effectiveness of the deployed mannequin. Here’s a pattern code (primary) together with output screenshots.

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

import unittestclass TestDataPreprocessing(unittest.TestCase):

def setUp(self):

# Load the dataset

self.knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

def test_missing_values_handling(self):

# Change non-numeric values '?' in 'horsepower' column with NaN

self.knowledge['horsepower'] = pd.to_numeric(self.knowledge['horsepower'], errors='coerce')

# Impute lacking values in 'horsepower' column with imply

mean_horsepower = self.knowledge['horsepower'].imply()

self.knowledge['horsepower'].fillna(mean_horsepower, inplace=True)

# Guarantee no lacking values within the dataset after preprocessing

self.assertFalse(self.knowledge.isnull().values.any())

def test_remove_name_column(self):

# Drop the 'title' column

self.knowledge = self.knowledge.drop(columns=['name'])

# Make sure the 'title' column is faraway from the dataset

self.assertNotIn('title', self.knowledge.columns)

class TestModel(unittest.TestCase):

def setUp(self):

# Load the dataset

self.knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

# Take away the 'title' column

self.knowledge = self.knowledge.drop(columns=['name'])

# Change '?' with NaN within the 'horsepower' column

self.knowledge['horsepower'] = self.knowledge['horsepower'].change('?', pd.NA)

# Convert the 'horsepower' column to numeric

self.knowledge['horsepower'] = pd.to_numeric(self.knowledge['horsepower'], errors='coerce')

# Impute lacking values in 'horsepower' column with imply

mean_horsepower = self.knowledge['horsepower'].imply()

self.knowledge['horsepower'].fillna(mean_horsepower, inplace=True)

# Cut up the dataset into options (X) and goal variable (y)

X = self.knowledge.drop(columns=['mpg'])

y = self.knowledge['mpg']

# Cut up the information into coaching and testing units

self.X_train, self.X_test, self.y_train, self.y_test = train_test_split(X, y, test_size=0.2, random_state=42)

def test_linear_regression_model(self):

# Initialize and prepare the linear regression mannequin

mannequin = LinearRegression()

mannequin.match(self.X_train, self.y_train)

# Make predictions

y_pred = mannequin.predict(self.X_test)

# Consider the mannequin

mse = mean_squared_error(self.y_test, y_pred)

self.assertLessEqual(mse, 20.0) # Guarantee MSE is lower than or equal to twenty

if __name__ == '__main__':

unittest.predominant()

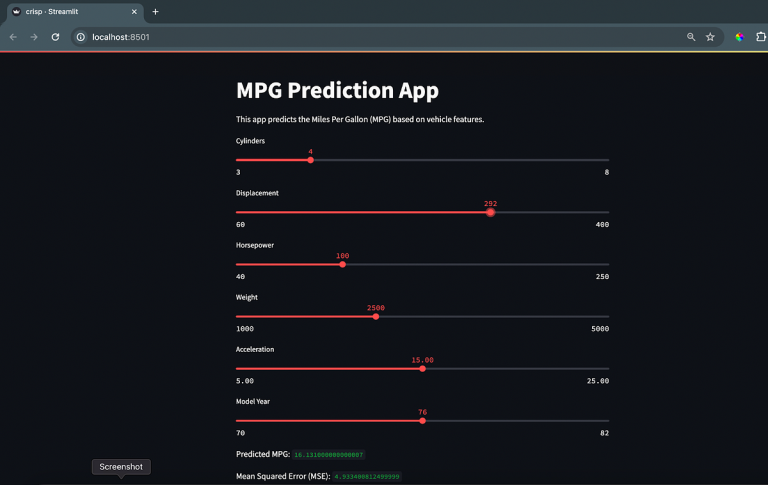

MODEL DEPLOYMENT

Mannequin deployment permits speedy prototyping, user-friendly visualisation and integration of fashions into actual world purposes, making it extremely helpful for testing and deploying machine studying options to a wider viewers with minimal effort.

A easy deployment answer utilizing Python’s Streamlit is offered right here. It lets customers work together with the regression mannequin we simply got here up with! (you may as well select to save lots of the mannequin and import it in your deployment code)

import streamlit as st

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error# Load the dataset

@st.cache_data

def load_data():

knowledge = pd.read_csv('/Customers/swetha/Desktop/crispmllab/mpg.csv')

knowledge = knowledge.drop(columns=['name']) # Drop the 'title' column

return knowledge

knowledge = load_data()

# Convert string columns to numeric

numeric_columns = ['horsepower', 'weight', 'acceleration'] # Add some other columns that want conversion

knowledge[numeric_columns] = knowledge[numeric_columns].apply(pd.to_numeric, errors='coerce')

# Deal with lacking values

knowledge.fillna(knowledge.imply(), inplace=True) # Change lacking values with column means

# Proceed along with your preprocessing and modeling steps

X = knowledge.drop(columns=['mpg', 'origin']) # Take away 'origin' from enter options

y = knowledge['mpg']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Prepare the mannequin

imputer = SimpleImputer(technique='imply')

X_train_imputed = imputer.fit_transform(X_train)

X_test_imputed = imputer.rework(X_test)

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train_imputed)

X_test_scaled = scaler.rework(X_test_imputed)

mannequin = RandomForestRegressor(n_estimators=100, random_state=42) # Use Random Forest Regressor

mannequin.match(X_train_scaled, y_train)

# Streamlit UI

st.title('MPG Prediction App')

st.write('This app predicts the Miles Per Gallon (MPG) based mostly on car options.')

# Consumer enter for options

cylinders = st.slider('Cylinders', min_value=3, max_value=8, worth=4)

displacement = st.slider('Displacement', min_value=60, max_value=400, worth=200)

horsepower = st.slider('Horsepower', min_value=40, max_value=250, worth=100)

weight = st.slider('Weight', min_value=1000, max_value=5000, worth=2500)

acceleration = st.slider('Acceleration', min_value=5.0, max_value=25.0, worth=15.0)

model_year = st.slider('Mannequin 12 months', min_value=70, max_value=82, worth=76)

# Make prediction

prediction = mannequin.predict([[cylinders, displacement, horsepower, weight, acceleration, model_year]])

st.write('Predicted MPG:', prediction[0])

# Calculate Imply Squared Error (MSE) for analysis

y_pred = mannequin.predict(X_test_scaled)

mse = mean_squared_error(y_test, y_pred)

st.write('Imply Squared Error (MSE):', mse)

In your terminal, navigate to the listing along with your python code. Run the given command to assemble your web site.

streamlit run filename.py

Yayy! Congratulations in your first sensible lesson on Regression! What subsequent?

Attempt growing CRISP DM fashions for classification and clustering.

Try my blogs for CLASSIFICATION and CLUSTERING.