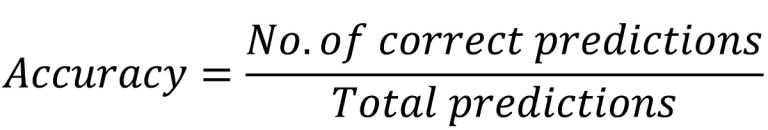

The only solution to assess the efficiency of a machine studying mannequin is to calculate its Accuracy which is the ratio of the variety of appropriate predictions to the overall variety of predictions made:

Beneath is an instance of a cat classifier:

Accuracy doesn’t signify a good estimate of a mannequin’s efficiency in all of the eventualities, particularly within the case of skewed datasets. A skewed dataset incorporates courses with uneven situations.

Let’s take the instance of a uncommon illness classifier whose take a look at set is extremely skewed:

The take a look at set incorporates 900 “NEGATIVE” class examples which signify the absence of illness and 100 “POSITIVE” class situations which signify the presence of illness.

Suppose, the classifier at all times predicts “NEGATIVE” class no matter the enter, which implies that the classifier has no intelligence in any respect. Let’s see if the Accuracy factors out that this mannequin is nice for nothing:

For the reason that classifier at all times predicts the “NEGATIVE” class, it finally ends up making 900 appropriate predictions out of the overall 1000, which supplies an Accuracy of 90%. That is an occasion the place Accuracy misrepresents a awful algorithm as glorious.

The efficiency metrics that are resistant to skewed take a look at units, are Precision and Recall. They deal with completely different points and, combinedly, supply a radical estimate of a mannequin’s efficiency.

Precision focuses on the correctness of a mannequin’s predictions for a specific class, say “XYZ”. Because of this the Precision tells if a mannequin predicts a specific class “XYZ” a sure variety of instances, what quantity of those predictions are appropriate. It may be calculated as:

Precision values vary from 0 to 1 with the worth of 1 signifying that when the mannequin predicted a specific class a sure variety of instances, all of these predictions had been appropriate.

Recall, then again, measures the flexibility of a mannequin to accurately classify situations of a specific class, say “ABC”, from the take a look at set. Because of this if the take a look at set incorporates a sure variety of situations of a specific class “ABC”, the Recall tells what quantity of those situations are accurately labeled by the mannequin. It may be estimated as:

Recall values vary from 0 to 1 with the worth of 1 indicating that the mannequin accurately recognized all of the situations of a specific class.

The mannequin proven above (Automobile Classifier) has the best Recall (100%) however a decrease Precision (50%) which signifies that this mannequin accurately classifies all of the situations of sophistication “CAR” from the take a look at set, nevertheless, when it predicts class “CAR”, half of the time the prediction is improper.

At this level, I’ll make a minor change to the supposition to keep away from undefined Precision worth and that’s:

The classifier at all times predicts “NEGATIVE” class however accurately classifies “POSITIVE” class as soon as.

For the reason that classifier solely predicted the “POSITIVE” class as soon as and it was appropriate, the Precision comes out to be 100%. Nonetheless, because the classifier is poor at figuring out “POSITIVE” class situations, the Recall could be very low (1%) and this exposes the true efficiency of the Uncommon Illness Classifier.

- The fashions which have excessive Precision and low Recall when predict a specific class, more often than not, the prediction is appropriate, nevertheless, they miss out or misclassify many situations of that individual class.

- Conversely, the fashions which have excessive Recall and low Precision choose up or accurately establish a lot of the situations of a specific class, however in addition they affiliate many situations to that class, that truly don’t belong to it.

Ideally, we would like each Precision and Recall to be as excessive as potential. However the two parameters oppose one another. To know their conflicting nature, we’ve to first study how excessive Precision and excessive Recall are achieved:

We all know that the precision is excessive when a lot of the predictions for a specific class are appropriate. To realize this, you need to enhance the brink or confidence degree on which you are expecting that individual class:

Logistic Regression Instance:

Suppose you are expecting:

- Class 1 for g(z) > 0.5

- Class 0 for g(z) ≤ 0.5

In order for you excessive Precision for Class 1, you might need to enhance the brink from 0.5 to, perhaps, 0.9, i-e:

- Class 1 for g(z) > 0.9 ⇒ Excessive Precision

What this can do is make the classifier predict class 1 solely when it’s lifeless positive and this can enhance the chance that the predictions for sophistication 1 are appropriate, thereby rising the precision.

Nonetheless, this can render low Recall!

As a result of excessive Recall is attained when a lot of the situations of a specific class are accurately recognized however with a excessive threshold, the mannequin will miss out or misclassify a whole lot of situations of sophistication 1 with comparatively low confidence worth or low output of the sigmoid perform. To catch such situations, you might need to lower the brink worth, i-e

- Class 1 for g(z) > 0.3 ⇒ Excessive Recall

This may enhance the Recall however decrease the Precision. That’s how these two parameters conflict with one another.

As we’ve mentioned, ideally we would like each Precision and Recall excessive, whereas additionally these two parameters are opposing in nature, this begs the query: How ought to we resolve between the 2?

This completely depends upon the applying, as an illustration:

- A classifier for a life-threatening illness might require a excessive recall. It’s because we don’t need to danger the lifetime of a affected person by lacking them out. So we’ll take into account the therapy even when we’ve low confidence.

- A classifier for a benign illness with an costly or dangerous therapy might require excessive precision. It’s because we solely need to go for the therapy if we’re very positive. Additionally, we are able to afford to overlook out a affected person as a result of the illness is benign.

- But different purposes might require a golden steadiness between Precision and Recall.

F1 rating combines precision and recall to present an thought in regards to the total efficiency of a mannequin.

It’s the harmonic imply of precision and recall which supplies extra weightage to smaller values and the advantage of that is that if any one of many Precision or Recall is low, it’ll pull the F1 rating down and we all know that there’s something off in regards to the mannequin.

F1 rating additionally ranges from 0 to 1 with 1 being one of the best rating and to strategy it, we want each precision and recall excessive.

For example, let’s take the Uncommon Illness Classifier. It has:

- Precision = 1 & Recall = 0.01

Discover how the small worth of recall pulled the F1 rating down even when the precision was 100%.

On this article, we mentioned a number of efficiency metrics of machine studying fashions together with their shortcomings and caveats. These embody Accuracy and its misguided nature in case of skewed take a look at units. Additional, we demonstrated Precision and Recall together with their opposing nature and the way one can commerce off between the 2 in a greater method. Ultimately, we launched the F1 Rating which cleverly combines Precision and Recall to present a single determine representing the efficiency of the mannequin.