Money Laundering is an age outdated subject, time beyond regulation with growing safety and excessive alertness of banks the cash laundering has additionally turn out to be complicated and ‘not-so-visible’ to acknowledge. One of many of frequent situation is when the cash is distributed like an internet or loop throughout numerous monetary institutes in numerous currencies or unequal distribution of cash. The detection turns into a tough job because the monetary transaction knowledge is very non-public and can’t be shared throughout totally different events to establish or detect an internet of cash being laundered.

On this article we discuss elaborately about Graphical Neural Networks and the way it may be utilized to acknowledge cash laundering patterns in a monetary ecosystem. Recognization of patterns have improved over time by way of precision and complexity of figuring out them. Furthermore, we not solely have one however quite a few forms of sample recognization methodologies out there right now that caters to totally different issues with its most fitted methodologies. Right here, we’re particularly specializing in Graphical Neural Networks and its varieties and why are we selecting them. We’ll talk about briefly about what the our Anti-Cash Laundering dataset holds, what instruments and applied sciences have been utilized in reaching the specified the outcomes and every little thing in between!

Allow us to dig contained in the our dataset used on this article. Synthetically generated ‘Anti-Cash Laundering’ dataset by IBM, which was generated utilizing ALMsim (Simulator). The first purpose for producing the a dataset synthetically and never mining it because of the restrictive conduct of transactional knowledge from monetary establishments.

Two teams of dataset was generated HI and LI, right here: HI — Refers to Excessive illicit ratio within the dataset, LI — Refers to low illicit ration within the dataset. These two teams have been divided into the batch of three — small, medium and enormous. These sizes indicated the variety of recorded transactions and have been utterly unbiased from one another. Because of the limitation in computational energy, we’ve used the dataset with excessive illicit ratio and lesser variety of transactions in comparison with the medium and excessive. These synthetically generated datasets provides us the pliability to establish patterns of laundering not solely round single or double monetary establishment, however round the entire net of monetary ecosystem.

(Reference Supply: Toyotaro Suzumura and Hiroki Kanezashi. 2021. Anti-Money Laundering Datasets. (2021).

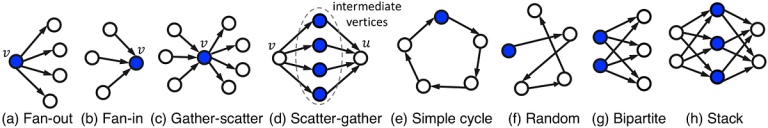

There are eight cash laundering patterns described briefly by Toyotaro Suzumura and Hiroki Kanezashi.

The ‘v’ signifies the vertex and the ‘u’ ends signifies the perimeters . The arrowed traces explains the directionality of the transaction. The patterns like fan-out, fan-in, gather-scatter, scatter-gather, easy cycle, Bipartite and stack follows a selected order of stream in its transactional attribute, though there may be additionally a sample — ‘Random’ which follows no order and sample, though the random sample might be seen much like the ‘easy cycle’ however the funds don’t attain the unique account for finishing the cycle.

The graphical illustration of information helps establish the first keys and use them to constructed connectivity among the many numerous entities. Right here, we establish the our parameters too be denoted as nodes, vertices (edges), node attributes and edge attributes. In our case, we’ve thought-about, the supply and vacation spot accounts as nodes and the transactions as edges (vertices). In monetary crimes, criminals often ship out funds in a single or a number of batches to varied edges or single edge. There are circumstances when the funds comes again to the identical account after a complete stream, which is one robust indicator of cash laundering. As these transactions are in a number of patterns and flows, a directed subgraphs turns into a vital support.

Graphical Neural Community and its Sorts

Graphical neural networks (GNNs) are a kind of neural community mannequin that leverages graph buildings to course of and signify knowledge. In GNNs, knowledge is organized into nodes and edges, the place nodes signify entities (akin to objects, parts, or ideas), and edges signify relationships or connections between these entities. There are numerous architectures and approaches throughout the GNNs, together with graph convolutional networks (GCNs), graph consideration networks (GATs), graph recurrent networks (GRNs), and graph autoencoders. These architectures sometimes contain studying representations of nodes and graphs by aggregating data from neighboring nodes or edges within the graph.

Message Passing Mechanism: The message passing mechanism is like neighbors sharing data in a neighborhood. In a graph (like a social community or a transaction community), every node sends and receives messages to and from its neighboring nodes. These messages include details about the node’s options and its relationships with different nodes. By means of this course of, nodes alternate data, replace their very own state, and collectively study from the community’s construction and connections. It’s like nodes discuss to one another to grasp and adapt primarily based on their environment, serving to the entire community to make higher choices or predictions.

Graph Consideration Networks (GATs) with message passing have been chosen over different Graphical Neural Community (GNN) architectures like Graph Neural Networks (GNNs) or Graph Convolutional Networks (GCNs) for our sample recognition duties. Our resolution was primarily based on a number of elements intrinsic to our knowledge:

Firstly, GATs supply unparalleled flexibility in knowledge illustration, notably useful for datasets with intricate relationships and dependencies naturally represented as graphs. Our knowledge, which encompasses complicated interactions and reveals excessive imbalances, aligns properly with this functionality, as GATs excel at capturing nuanced patterns inside graph buildings.

Furthermore, GATs with message passing mechanisms successfully incorporate each native and international data by effectively aggregating insights from neighboring nodes. This functionality is essential for our sample detection duties, because it permits our mannequin to grasp the context and relationships between numerous entities throughout the transactional graph, resulting in extra correct predictions.

Moreover, the scalability of GATs to massive graphs is paramount for our analyses, as our dataset usually entails tens of millions of transactions interconnected by way of numerous currencies. GAT architectures have demonstrated effectivity in dealing with such large-scale graphs, guaranteeing our mannequin can successfully course of and extract significant insights from the huge quantities of transactional knowledge.

Lastly, the transferability and generalization skills of GATs are invaluable for our duties, particularly given the restricted labeled knowledge out there. The adaptability, data aggregation capabilities, scalability, and transferability of GATs with message passing make them the best selection for our cash laundering detection job.

We examined and explored the information to grasp if function engineering strategies like reworking or creating new options from the prevailing knowledge will improve mannequin efficiency or seize related data extra successfully.

Information Preprocessing:

Information preprocessing entails a number of important steps to organize the dataset for evaluation and modeling. The snippet under represents few of the esstential preprocessing steps that we carried out. Label encoding transforms categorical variables into numerical representations, enabling machine studying fashions to work with categorical knowledge successfully. Preprocessing steps akin to timestamp normalization, creating distinctive identifiers for accounts, and organizing knowledge into particular dataframes (e.g., receiving_df and paying_df) facilitate downstream evaluation and modeling duties.

def df_label_encoder(df, columns):

le = preprocessing.LabelEncoder()

for i in columns:

df[i] = le.fit_transform(df[i].astype(str))

return dfdef preprocess(df):

df = df_label_encoder(df,['Payment Format', 'Payment Currency', 'Receiving Currency'])

df['Timestamp'] = pd.to_datetime(df['Timestamp'])

df['Timestamp'] = df['Timestamp'].apply(lambda x: x.worth)

df['Timestamp'] = (df['Timestamp']-df['Timestamp'].min())/(df['Timestamp'].max()-df['Timestamp'].min())

df['Account'] = df['From Bank'].astype(str) + '_' + df['Account']

df['Account.1'] = df['To Bank'].astype(str) + '_' + df['Account.1']

df = df.sort_values(by=['Account'])

receiving_df = df[['Account.1', 'Amount Received', 'Receiving Currency']]

paying_df = df[['Account', 'Amount Paid', 'Payment Currency']]

receiving_df = receiving_df.rename({'Account.1': 'Account'}, axis=1)

currency_ls = sorted(df['Receiving Currency'].distinctive())

return df, receiving_df, paying_df, currency_ls

What’s going on with the currencies in our knowledge?

In our dataset, transactions concerned a number of currencies, usually throughout the similar transactional stream, which means {that a} single transaction may embrace exchanges between totally different currencies. To facilitate evaluation and guarantee consistency throughout the dataset, we standardized the information by changing all transactions to their equal values in USD (United States {Dollars}).

To perform this standardization course of, we employed a technique involving the creation of a dictionary. This dictionary served as a reference information, mapping every forex to its corresponding alternate charge with respect to USD. By using this dictionary, we may successfully convert the worth of every transaction from its unique forex to its USD equal.

As an illustration, if a transaction concerned the alternate of Euros (EUR) for Japanese Yen (JPY), we’d seek the advice of the dictionary to seek out the alternate charge for EUR to USD and the alternate charge for USD to JPY. By multiplying the transaction quantity by the suitable alternate charges, we may convert the transaction worth to USD.

By standardizing the information on this method, we achieved uniformity and comparability throughout transactions, whatever the currencies concerned. This not solely facilitated simpler evaluation and interpretation but in addition enabled us to conduct significant statistical analyses and modeling duties that require constant items of measurement. Moreover, by changing all transactions to USD, we eradicated the potential confounding results of forex alternate charge fluctuations.

Information Exploration:

Information exploration entails analyzing the processed dataframe to realize insights into its construction, distribution, and content material. Abstract statistics and exploratory knowledge evaluation (EDA) are generally used to grasp the information higher. This exploration section helps establish potential points akin to lacking values, outliers, or knowledge inconsistencies which will require additional processing or cleansing.

Additional, our goal was to extract all distinctive accounts from each payers and receivers to type nodes in our graph. Every node represented a singular account and included key attributes such because the account ID, financial institution code, and a label denoting potential involvement in illicit actions, denoted as ‘Is Laundering’.

To establish suspicious accounts, we adopted a complete strategy. Each payers and receivers related to illicit transactions have been deemed as probably suspicious entities. Consequently, we assigned a ‘Is Laundering’ label of 1 to each the payer and receiver concerned in such transactions. This labeling technique allowed us to seize and flag accounts which may have warranted additional scrutiny on account of their involvement in suspicious monetary actions.

Node Options:

Within the context of graph-based knowledge, nodes signify entities (e.g., accounts), and node options seize related data related to every node. For instance, aggregating imply paid and acquired quantities with totally different forex varieties as node options enriches the illustration of every account within the graph, offering extra context for downstream modeling duties.

Edge Options:

Edges in a graph signify relationships or interactions between nodes, akin to transactions between accounts. Edge options describe attributes related to every edge, akin to transaction timestamps, quantities transferred, and forex varieties concerned. Extracting and encoding these edge options allow graph-based fashions to seize vital transaction traits and patterns throughout the knowledge.

By performing thorough knowledge visualization, preprocessing, and have engineering, we ready our dataset for modeling duties, guaranteeing that related data is captured and represented appropriately within the graph-based framework. These steps laid the inspiration for constructing correct and strong predictive mannequin for detection of cash laundering patterns in graph-structured knowledge.

On this part, we current the ultimate implementations of mannequin.py, practice.py, and dataset.py, offering a complete overview of every element’s performance and structure.

Mannequin Structure

Our mannequin structure is crafted round Graph Consideration Networks (GAT), a cutting-edge framework designed to seize complicated relational data inherent in graph-structured knowledge. The GAT-based mannequin we make use of contains two GATConv layers, every strategically crafted to distill essential insights from the enter graph.

GATConv Layers:

- First GATConv Layer: The preliminary layer applies graph consideration mechanisms to combination data from neighboring nodes, permitting every node to weigh its neighbors’ contributions adaptively. Dropout regularization is judiciously included to stop overfitting by randomly dropping out nodes throughout coaching.

- Second GATConv Layer: Constructing upon the insights gleaned from the primary layer, the following layer additional refines the node representations, leveraging the hierarchical construction encoded within the graph. Notably, we make use of dropout regularization as soon as once more to make sure robustness and stop overfitting.

Linear Layer and Sigmoid Activation:

- Following the GATConv layers, a linear layer processes the refined node representations to provide classification chances. The sigmoid activation operate is employed to squash the output into the vary [0, 1], facilitating binary classification.

PyG InMemoryDataset: Streamlined Information Processing and Loading

The AMLtoGraph class is used for environment friendly knowledge processing and loading, primarily based on the PyTorch Geometric InMemoryDataset interface. This class encapsulates knowledge preprocessing steps, guaranteeing that the dataset is curated and primed for subsequent mannequin coaching.

Preprocessing Pipeline:

- Information Loading: The dataset is ingested from a uncooked CSV file, serving because the foundational knowledge supply for subsequent processing steps.

- Function Extraction: Essential options for anti-money laundering detection, akin to transaction timestamps, fee codecs, and forex data, are extracted and processed.

- Label Encoding: Categorical options are reworked into numerical representations through label encoding, facilitating seamless integration into the mannequin.

- Information Splitting: The dataset is cut up into coaching, validation, and take a look at units, laying the groundwork for rigorous mannequin analysis and validation.

Node and Edge Attribute Extraction:

- Mixture Transaction Quantities: Transaction quantities are aggregated, each by way of funds made and acquired, because it represents the stream of transactions in addition to gives with monetary insights.

- Node Labeling: Every node is labeled primarily based on its potential involvement in illicit actions, thereby facilitating supervised studying throughout mannequin coaching.

Mannequin Coaching

In our knowledge preprocessing section, we divide our dataset into three distinct subsets: coaching, validation, and take a look at knowledge. The coaching knowledge contains 80% of our dataset and serves as the first set for coaching our machine studying mannequin. We allocate 10% of the information to the validation set, which aids in hyper-parameter tuning and evaluating the mannequin’s efficiency throughout coaching. 10% of the information is reserved for the take a look at set, which isn’t seen by the mannequin throughout coaching and is solely used for assessing the mannequin’s last efficiency and generalization skills.

Within the coaching section, we set our mannequin to coaching mode to make sure that any related layers or modules behave as supposed. After the ahead move, the enter knowledge is fed into the mannequin with a view to derive predicted outputs. Subsequently, we calculate the loss utilizing a binary cross-entropy loss operate, which quantifies the disparity between the mannequin’s predictions and the precise labels within the coaching knowledge. The gradients of the loss operate are then computed, sometimes utilizing backpropagation, with respect to the parameters of the mannequin. These gradients inform us of the route by which we must always regulate our mannequin’s parameters to attenuate loss. Using an optimizer, akin to stochastic gradient descent (SGD), we replace the mannequin’s parameters accordingly, nudging them in direction of values that result in decrease loss.

Following the coaching section, we consider the mannequin’s efficiency utilizing the take a look at dataset. We make use of the skilled mannequin to generate predictions from the take a look at knowledge. Relying on the issue at hand, we could must convert the mannequin’s output chances into binary values utilizing a predetermined threshold. With the binary predictions in hand, we evaluate them in opposition to the bottom reality labels from the take a look at dataset to calculate accuracy. Accuracy represents the proportion of appropriate predictions made by the mannequin out of the overall variety of predictions.

These steps enable us to coach a machine studying mannequin, fine-tune its parameters, after which confidently consider its efficiency on unseen knowledge from the take a look at set.

In our strategy, we constructed a fundamental random forest mannequin as a benchmark to supply a comparative perspective on the efficiency of our Graph Consideration Community (GAT) mannequin. The rationale behind this technique lies in understanding the strengths and weaknesses of our GAT mannequin relative to a well-established and widely-used machine studying method like random forests.

The random forest mannequin serves as a reference level, providing insights into how successfully our GAT mannequin addresses the duty at hand in comparison with a standard ensemble studying technique. By evaluating each fashions on the identical dataset and job, we are able to discern the foremost variations of their efficiency metrics, akin to accuracy, precision, recall, and F1-score.

Moreover, evaluating the output and predictions of the random forest mannequin with these of our GAT mannequin permits us to establish cases the place the fashions agree or disagree. Understanding these discrepancies can make clear the distinctive capabilities of every mannequin and spotlight eventualities the place one mannequin outperforms the opposite.

Constructing and analyzing a fundamental random forest mannequin alongside our GAT mannequin allows us to realize a complete understanding of our mannequin’s efficiency relative to a standard baseline. This comparative evaluation helped us to make knowledgeable choices concerning mannequin choice, fine-tuning, and optimization, in the end enhancing the efficacy and interpretability of our machine studying resolution.

— —

Whereas the random forest mannequin yielded superior outcomes in comparison with GAT, it’s value noting that the choice to make use of random forest was justified because of the extremely imbalanced nature of the dataset.

Whereas the present implementation achieves passable accuracy in node classification duties, there are alternatives for additional enchancment. One avenue for exploration entails addressing the imbalance at school distribution throughout the dataset. Balancing strategies throughout knowledge preprocessing could improve mannequin efficiency by mitigating the affect of the dominant class.

Moreover, we plan to research various modeling approaches past GAT, akin to conventional regression or classification fashions. Exploring numerous mannequin architectures could uncover simpler representations of the underlying knowledge, resulting in improved efficiency in anti-money laundering detection duties.

- Weber, M., Domeniconi, G., Chen, J., Weidele, D. K. I., Bellei, C., Robinson, T., & Leiserson, C. E. (2019). Anti-money laundering in bitcoin: Experimenting with graph convolutional networks for financial forensics. arXiv preprint arXiv:1908.02591.

- Johannessen, F., & Jullum, M. (2023). Finding Money Launderers Using Heterogeneous Graph Neural Networks. arXiv preprint arXiv:2307.13499.

- Toyotaro Suzumura and Hiroki Kanezashi. 2021. Anti-Money Laundering Datasets. (2021).

- Realistic Synthetic Financial Transactions for Anti-Money Laundering Models — Erik Altman Jovan Blanuša Luc von Niederhäusern Béni Egressy∗ Andreea Anghel Kubilay Atasu IBM Watson Research, Yorktown Heights, NY, USA IBM Research Europe, Zurich, Switzerland 3ETH Zurich, Switzerland)