Have you ever ever had a music caught in your head however couldn’t bear in mind its title? This widespread frustration is all too acquainted. Luckily, the mannequin we have now developed presents an answer that may put your thoughts comfortable. By getting into lyrics or phrases, the mannequin makes use of vector encodings and cosine similarity to establish songs with lyrics much like your search question. Though this method is commonly utilized in suggestion engines, the mannequin may also be likened to a semantic search engine as a result of it takes under consideration the contextual meanings of phrases in your search question relative to the lyrics of quite a few songs, thereby enhancing the accuracy and relevance of the search end result.

In earlier years, knowledge retrieval strategies have relied on an extractive strategy, which includes scanning paperwork for the presence of particular key phrases. Nonetheless, this methodology lacks consideration for phrase order, that means, and context. Because of this, in duties reminiscent of figuring out songs primarily based on lyrics, its effectiveness is restricted when phrases are absent or don’t exactly match these in music lyrics. This limitation is especially evident with the usage of slang, abbreviations, or misspelled phrases widespread when lyrics are vaguely remembered. To handle this limitation, it’s important for our strategy to encode sentences and music lyrics in a fashion that captures phrase meanings and context.

In NLP duties, sentence (or phrase) encoding refers back to the transformation of textual content knowledge into n-dimensional vectors essential for numerical evaluation and machine studying computations. With the arrival of huge knowledge, transformer fashions have emerged as superior instruments for encoding texts into vector representations whose components include contextual that means. Transformers are neural community fashions that make the most of consideration mechanisms to deal with textual content sequences. Typically, transformers include two elements: an encoder for processing textual content inputs and a decoder for writing or producing predictions primarily based on a particular process.

On this challenge, we’ll focus extra on the encoder mechanism since our purpose is to encode music lyrics and search queries. There are a number of elements that make up the encoder block of a transformer, as seen within the determine beneath:

Of the varied elements within the encoder, we’ll briefly talk about positional encoding and the eye layer, which is the core of the mannequin.

Positional Encoding:

Positional encoding is used to embed details about the place of tokens (phrases or subwords) inside enter sequence(s). These encodings have the identical dimension because the enter embeddings, permitting them to be instantly summed. Following this, the mannequin can extra successfully decide the place of every token within the sequence. The positional encoding vector is calculated utilizing sine and cosine capabilities for every dimension of the encoding vector and is outlined by the equation beneath:

the place:

pos = place of the token within the sequence;

i = index of the ith dimension

dmodel = depth of embedding dimension

Multihead Consideration:

Multihead consideration is utilized by transformer fashions to research totally different elements of the enter knowledge concurrently. The computation begins with an enter X, which consists of vectors with dimensions (batch dimension, sequence, size, embedding dimension). Afterwhich, every enter tensor X undergoes separate linear transformations to provide units of queries (Q), keys (Ok), and values (V) for every head.

These transformations permit the mannequin to focus, in parallel, on totally different representational subspaces at totally different positions. The eye scores for every head are then computed utilizing the Scaled Dot-Product Consideration mechanism. Lastly, the outputs from all of the heads are concatenated and endure one other linear transformation to provide the ultimate output vector, integrating data from numerous views and positions.

Similarity measure is used to evaluate how shut, associated or comparable two vectors are to one another. It’s essential for duties reminiscent of data retrieval, clustering and suggestion system, the place the purpose is to group comparable objects or discover objects much like a given question. Frequent strategies for calculating similarity between vectors are: Pearson Correlation, Cosine Similarity, Euclidean Distance, and Manhattan Distance.

For this challenge, cosine similarity was chosen as a consequence of its widespread use in NLP and textual content evaluation. Amongst its benefits are its symmetry — that’s, the similarity between vectors A and B is identical because the similarity between vectors B and A. As well as, it focuses on the course relatively than the magnitude of the vectors, making it appropriate when magnitude isn’t the first concern.

The cosine similarity of any two non-zero vectors is given as:

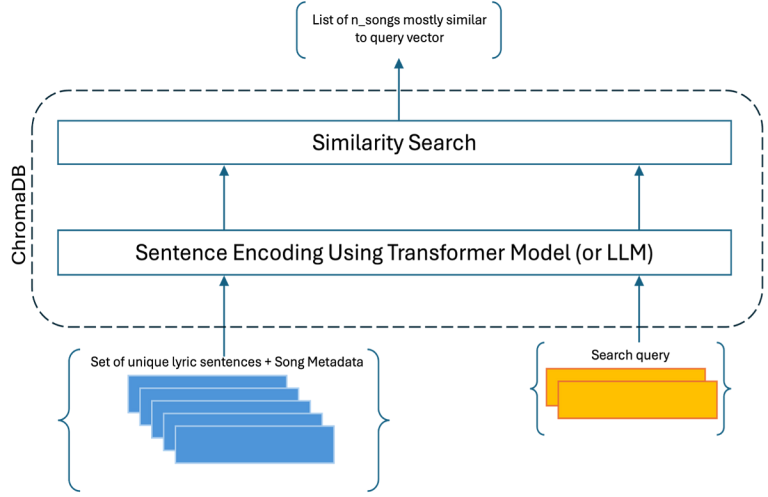

As talked about earlier, the music finder consists of two (2) elements — Sentence Encoder and Similarity Search. To carry out the sentence encoding, two massive language fashions have been utilized to match outcomes, as accuracy can’t essentially be measured utilizing floor reality. The fashions used are “all-MiniLM-L6-v2” and “BERT”.

The “all-MiniLM-L6-v2” mannequin is a model of the MiniLM mannequin, belonging to the household of Transformer-based fashions tailor-made for NLP duties. MiniLM stands for “Miniaturized Language Mannequin” and was developed to be a smaller and extra environment friendly mannequin in comparison with bigger fashions reminiscent of BERT, whereas nonetheless delivering robust efficiency throughout numerous language understanding duties.

BERT stands for Bidirectional Encoder Representations from Transformers and consists of a number of an identical layers of encoders. Every encoder block consists of a multi-head consideration mechanism that’s totally related to a feed-forward community. The outputs from this community are then handed by way of a normalization layer and are related by residual connections, which assist to stabilize the educational course of in deep architectures. Please see picture beneath.

For this challenge, BERT-Base (a smaller model of BERT) was used. The Bert-Base consists of 12 layers of encoder, every with embedding dimension of 768 and 12 consideration heads. The full variety of parameters within the Bert-Base is roughly 110 million.

An extra part of the music finder mannequin not but mentioned is a vector database. These specialised databases are designed for storing, indexing, and retrieving vector embeddings. On this challenge, the vector embeddings characterize uniquely encoded lyric sentences for every music in our dataset. Key options of the vector database, past its scalability, embody its integration with large-language-models and the environment friendly storage of high-dimensional knowledge. For this process, the vector database utillized is ChromaDB. The total music-finder mannequin structure could be seen beneath:

The info utilized for this challenge includes 10,550 songs previous to deduplication and over 170,000 distinctive lyric sentences following knowledge cleansing. The music data was sourced from AI Crowd, and the lyrics have been retrieved utilizing the Genius API.

Setting Up the Mannequin

To arrange the mannequin, the vector database (ChromaDB) was put in and initialized. Subsequently, two collections have been created utilizing the identical dataset. Nonetheless, the sentence encoder used for every assortment differed. For the primary assortment, the transformer utilized was “all-MiniLM-L6-v2”, whereas the second assortment used “BERT Base” to encode its inputs. The aim of this strategy was to match the outcomes from each collections utilizing a single question since there isn’t a outlined accuracy metric for our process. As soon as the collections have been established, the ready knowledge was loaded into every assortment.

Observe that, by default, “all-MiniLM-L6-v2” is the sentence encoder utilized by ChromaDB, and Euclidean distance is the default measure for similarity amongst vectors.

import chromadb

# initializing the chromadb occasion

db_path = '/content material/drive/My Drive/chromaDB_collections'

chroma_client = chromadb.PersistentClient(path=db_path)# creating the the primary utilizing the default encoder and cosine similarity

lyrics_library = chroma_client.create_collection(

identify = 'lyrics_library',

metadata = {'hnsw:area' : 'cosine'})

# making ready the information to be loaded into the vector database

paperwork = [x for x, y, z, a in data_]

uri = [z for x, y, z, a in data_]

metadata = [{'track_name': y, 'artist': a} for x, y, z, a in data_]

ids = ['id'+str(i+1) for i in range(len(documents))]

# loading the information into the lyrics_library assortment

batch_size = 32

for i in tqdm(vary(0, len(paperwork), batch_size)):

# creating the batches for every epoch

documents_batch = paperwork[i:i+batch_size]

uri_batch = uri[i:i+batch_size]

metadatas_batch = metadata[i:i+batch_size]

ids_batch = ids[i:i+batch_size]

# including every batch into the gathering

lyrics_library.add(

paperwork = documents_batch,

metadatas = metadatas_batch,

uris = uri_batch,

ids = ids_batch

)

Utilizing ChromaDB with Customized Embedding Operate (Bert)

ChromaDB offers the choice to make use of customized embedding functios for encoding texts. On this challenge, a pretrained BERT (base) mannequin from the transformers library is used. For the embeddings, a customized class referred to as “BertEmbeddingFunction” inherits properties from the ChromaDB superclass, “EmbeddingFunction”. This tradition class is designed to vectorize texts utilizing BERT’s transformer structure to course of textual content and generate vector representations. Within the __call__ perform of the customized class, the ultimate embeddings are derived by averaging the outputs from the final hidden state of the BERT mannequin as a abstract vector, which represents a sentence or sequence enter. Following this, the ultimate embedding is transformed to an inventory for additional computation wants, as required by ChromaDB.

# importing libraries

from transformers import TFBertModel, BertTokenizer

from chromadb.utils.embedding_functions import EmbeddingFunction# intializing the bert mannequin and tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

bert_model = TFBertModel.from_pretrained('bert-base-uncased')

# Creating the Customized Embedding Operate

class BertEmbeddingFunction(EmbeddingFunction):

def __init__(self, mannequin, tokenizer):

self.mannequin = mannequin

self.tokenizer = tokenizer

def __call__(self, enter: Paperwork) -> Embeddings:

bert_inputs = self.tokenizer(enter, padding = True, truncation = True, return_tensors = 'tf')

output = self.mannequin(bert_inputs)

embeddings = tf.reduce_mean(output.last_hidden_state, axis = 1)

return embeddings.numpy().tolist()

# creating the choice assortment utilizing Bert for Sentence Encoding and Cosine similarity

bert_library = chroma_client.create_collection(

identify = 'bert_library',

metadata = {'hnsw:area' : 'cosine'},

embedding_function = BertEmbeddingFunction(bert_model, tokenizer)

)

# loading the information into the bert_library assortment

batch_size = 32

for i in tqdm(vary(0, len(paperwork), batch_size)):

# creating the batches for every epoch

documents_batch = paperwork[i:i+batch_size]

uri_batch = uri[i:i+batch_size]

metadatas_batch = metadata[i:i+batch_size]

ids_batch = ids[i:i+batch_size]

# including every batch into the gathering

bert_library.add(

paperwork = documents_batch,

metadatas = metadatas_batch,

uris = uri_batch,

ids = ids_batch)

Outcomes and Dialogue

Evaluating the outcomes of each fashions utilizing the identical question, the ‘all-MiniLM-L6-v2’ sentence transformer seems to outperform the BERT mannequin primarily based on qualitative assessments. Though the primary results of each fashions is analogous, subsequent outcomes from ‘all-MiniLM-L6-v2’ extra carefully match the question by containing extra components of the sentence question within the returned sequences. This means that the accuracy of the music-finder mannequin may considerably rely upon the encoder’s functionality to successfully mannequin songwriting components or ‘music language.’

Future efforts would require fine-tuning the language mannequin encoders with lyric knowledge to boost the efficiency of the music-finder.