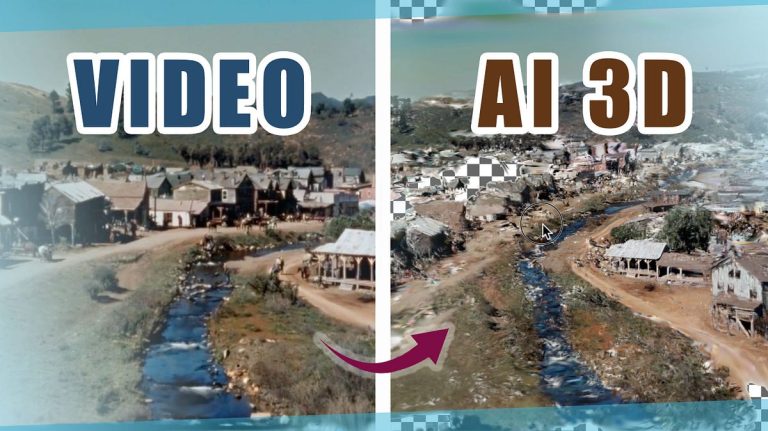

On this video, I’ll present you the best way to go from video to 3d utilizing Gaussian Splatting and share my ideas as a machine studying researcher.

Obtain a video generated by Sora.

Then, go to Polycam’s website and select Gaussian Splatting. Log in.

Add the video. Look ahead to a couple of minutes.

Word: The outcomes are Gaussian splats and may be transformed to meshes by way of instruments.

The primary view was kinda cool. The entire mannequin has a number of small items, not that steady.

This Japanese road mannequin can be fragmented, with some particulars, nonetheless not that excellent.

I feel it’s as a result of the digital camera strikes ahead within the movies aka monitoring pictures. Let’s take into consideration algorithms like NeRF and Gaussian Splatting. They require a sequence of picture views AROUND the topic to study a 3D illustration.

Let’s attempt some arc pictures, or have the digital camera rotating across the topic. We will solely decide from Sora’s present demos as a result of they’re not open to the general public but.

This appears to be like so related. The unique video was not 360 levels so the mannequin is proscribed too.

I believed this one would generate good outcomes, however the output doesn’t make sense in any respect.

To reply the query, sure, as we see, a number of the AI movies may be transformed to 3d fashions. For Polycam, it might need sampled uniformly from the enter video to create the multi-views, as if these photographs had been taken on the similar time. Then they apply Gaussian Splatting to compute the 3D illustration in a latent house.

3D consistency

An object wants to take care of its form and coloration even from completely different angles or at completely different time factors. If AI-generated movies don’t protect the trait, then will probably be onerous to place collectively inconsistent data for the 3D object.

Preserving Stationary

Bear in mind the assumption- the views are handled as if they’re taken on the similar time. It’s like once we use iPhone’s panorama to sew photos, typically it may not generate good outcomes.

Algorithm Limitations

Third, the conversion has some limitations from the algorithms used, for instance, Gaussian Splatting or NeRF. It’ll be onerous if the topic is clear.

Artwork Types

If we wish stylized 3D fashions, then the video technology pipeline wants to have the ability to generate sure artwork kinds. This shouldn’t be difficult so long as we’ve such coaching information.

Can text-to-VIDEO fashions like Sora remedy the issue of text-to-3D? From a technical perspective, there’s a chance.

Proper now, we see the scenes usually are not that nice for 3D modeling. however keep in mind, these AI movies had been for Sora’s demo functions, not particularly for 3D reconstruction. Additionally, I solely used a primary model of Gaussian splatting by way of Polycam however there are lots of different nice strategies to carry out 3D reconstruction. Sooner or later, the outcomes will look significantly better with new algorithms and category-specific remedies.

One other distinction I wanna level out is, all of the movies we tried generate 3D SCENEs, and they’re completely different than particular person 3D objects. In my earlier video, I discussed that 3D modeling is extensively used within the gaming trade. They admire having clear 3D objects with clear topologies. Machine studying fashions like Sora can’t do that but, so it could not match that properly into the trade’s wants.

Producing 3D scenes continues to be an enormous step, and will probably be tremendous useful as a result of, proper now there usually are not many algorithms to generate 3D scenes from scratch, like, with out scanning any actual objects.

In observe, recreation devs undertake a primarily procedural strategy, inserting present 3D fashions at calculated areas.

The brand new video-to-3D strategy will make it simpler to create 3D scenes, good for films and so forth. I’m not a digital camera professional however technically, administrators can generate a digital house by AI, and use a digital camera in a 3D-rendered scene for filming.

Luma permits individuals to scan objects utilizing their smartphones and remix the 3D fashions. They not too long ago employed a researcher from Google’s text-to-video staff of VideoPoet. Is Luma planning one thing in video technology too? We’ll see.

Genmo AI, one other AI startup, began with AI 3D. They quickly shifted focus to text-to-video technology. Their founders are high researchers in AI 3D. I wouldn’t be stunned if in the future they arrive again to the 3D technology house once more.

Should you’re excited by AI particularly video and 3D technology, you may comply with me, and let’s discover collectively! See you subsequent time.