AI and ML are on the high of most knowledge groups’ minds, and rightfully so. Firms are reaching actual impression from AI, and knowledge groups are sitting proper in the midst of this, giving them a much-desired means of tying their work to ROI.

In a latest instance, AI helped automate 700 full-time brokers for the Swedish ‘purchase now, pay later’ fintech Klarna. Intercom is now an AI-first customer support platform, and executives have OKRs instantly tied to implementing Gen AI use instances.

“The AI assistant has had 2.3 million conversations, two-thirds of Klarna’s customer support chats. It’s doing the equal work of 700 full-time brokers. It’s on par with human brokers in regard to buyer satisfaction scores. It’s extra correct in errand decision, resulting in a 25% drop in repeat inquiries” — Klarna

On this put up, we’ll look into what this implies in the event you work in a knowledge group.

- State of AI in knowledge groups — how knowledge practitioners use and anticipate to make use of ML and AI

- AI and ML use instances — the most well-liked methods knowledge groups can apply several types of fashions

- Information high quality in AI and ML techniques — widespread knowledge high quality points and tips on how to spot them

- The 5 steps to dependable knowledge — tips on how to construct AI and ML techniques which might be match for objective for business-critical use instances

AI is getting good. Actually good, the truth is. Stanford’s 2024 AI Index Report exhibits that AI has surpassed human efficiency on a number of benchmarks, together with some in picture classification, visible reasoning, and English understanding.

This has led to a surge within the demand for ML and AI, forcing many knowledge groups to reprioritize their work. It’s additionally left the query of the information group’s function in ML and AI unanswered. In our expertise, the strains between what’s owned by knowledge and what’s owned by engineers are nonetheless blurred.

“We have now a ratio of three to 1 machine studying engineers per knowledge scientist highlighting the share of labor that it takes to go from a prototype to a mannequin that works in manufacturing” — Chief Information Officer, UK Fintech.

dbt not too long ago surveyed 1000’s of knowledge practitioners, shedding mild on knowledge groups’ involvement in AI and ML.

There are indicators of AI adoption, however most knowledge groups are nonetheless not utilizing it of their day-to-day work. Solely a 3rd of respondents at the moment handle knowledge for AI mannequin coaching at present.

This may occasionally change quickly. 55% quickly anticipate AI to reap advantages for self-serve knowledge exploration — amongst different use instances.

This displays our expertise from speaking to greater than a thousand knowledge groups. Present efforts are concentrated primarily on making ready knowledge for evaluation, sustaining dashboards, and supporting stakeholders, however the need to spend money on AI and ML is there.

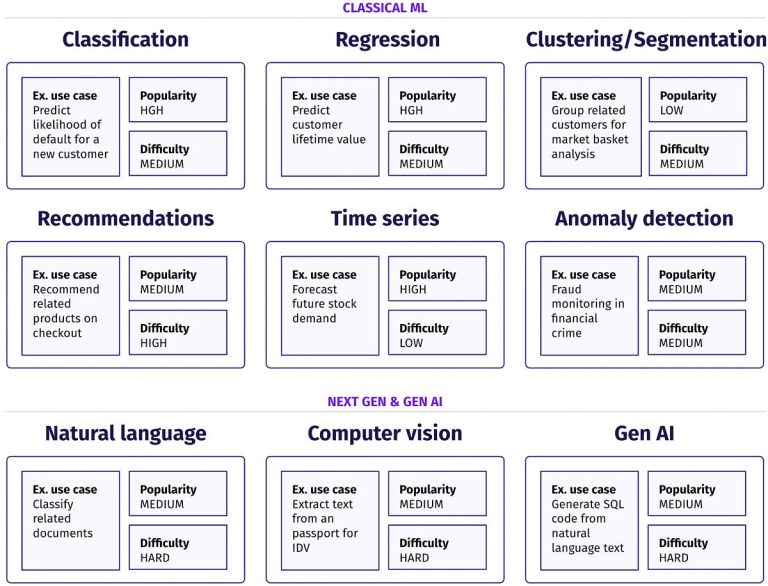

Though it could not appear so, ML and AI have existed for many years. Whereas Gen AI fashions could also be greatest suited to cutting-edge use instances reminiscent of producing SQL code from textual content or mechanically answering enterprise questions, extra confirmed strategies reminiscent of classification and regression fashions serve a objective, too.

Under are a few of the hottest strategies.

Most groups nonetheless don’t use conventional ML, reminiscent of classifications, regressions, and anomaly detection. These strategies may be highly effective, particularly for supervised studying the place you might have a transparent final result you need to predict (e.g., dangerous buyer) and predictive options (e.g., signup nation, age, earlier fraud). These techniques are sometimes simpler to elucidate, making it doable to extract the relative significance of every function, serving to clarify to stakeholders why a choice to reject a high-risk buyer was made.

The ML system beneath highlights a buyer danger scoring mannequin that predicts how possible it’s that new sign-ups are dangerous prospects and ought to be rejected.

Uncooked knowledge is collected from completely different sources and used to construct predictive options, combining the information scientist’s area experience with sudden patterns the mannequin identifies. Key ideas are:

- Sources & knowledge marts — uncooked and unprocessed knowledge extracted from the system deemed to be related by the information scientist

- Options — preprocessed knowledge which might be fed into the ML mannequin (e.g., distance of a big metropolis, age, earlier fraud)

- Labels — goal outputs primarily based on earlier dangerous prospects (sure/no)

- Coaching — the iterative strategy of educating a machine studying mannequin to make correct predictions by adjusting its inside parameters or weights primarily based on labeled examples

- Inference — utilizing the educated machine studying mannequin to make predictions or classifications on new, unseen knowledge after the coaching part

From our work with knowledge groups, we see a bigger a part of the classical ML workflow transferring to the information warehouse, serving because the spine for knowledge sources and have shops. Main knowledge warehouses have began integrating this instantly of their providing (e.g., BigQuery ML), hinting at a future the place the end-end ML workflow strikes totally to the information warehouse.

Typical challenges for the success of classical ML fashions are:

- Can the mannequin predict the specified final result with the correct stage of accuracy and precision given the information that’s accessible

- Is the ROI to the enterprise of the achieved ranges of accuracy and precision ample

- What’s the trade-off we now have to make to make this work (e.g., extra operational folks to overview dangerous prospects)

- What’s the maintenance in sustaining and monitoring the mannequin

Subsequent-gen, and notably Gen AI use instances, have been the discuss of the city the previous few years and have been made well-liked due to the effectiveness of ChatGPT 3. Whereas the house is new and enterprise ROI continues to be to be confirmed, the potential is large.

Under are a few of the hottest Gen AI use instances we’ve seen for knowledge groups.

Right now’s use instances can broadly be grouped into two areas.

- Enterprise worth positive factors — Automate or optimize a enterprise course of, reminiscent of automating easy buyer interactions in a buyer assist chatbot or matching buyer solutions to associated information base articles.

- Information group productiveness positive factors–Simplify core knowledge workflows, enabling much less tech-savvy analysts to put in writing SQL code with ‘textual content to SQL’ or decreasing advert hoc requests from enterprise stakeholders by producing solutions from pure language questions.

Under is a pattern structure for organising your individual model of ChatGPT over a selected corpus of knowledge related to your online business. The system consists of two components: (1) knowledge ingestion of your area knowledge and (2) querying the information to reply questions in real-time.

Instance data retrieval system (supply: Langchain)

Step one is loading your paperwork right into a vector retailer. This may occasionally embrace combining knowledge from completely different sources, working with engineers on uncooked exports, and manually eradicating knowledge that you simply don’t need the mannequin to coach on (e.g., assist solutions with low buyer satisfaction)

- Load knowledge sources to textual content out of your particular textual content corpus

- Preprocess and chunk textual content into smaller bites

- Create embeddings to create a vector house of phrases primarily based on their similarity

- Load embeddings right into a vector retailer

In case you’re unfamiliar with embeddings, they’re numerical representations of phrases or paperwork that seize semantic which means and relationships between them in a high-dimensional vector house. In case you run the code snippet beneath, you’ll be able to see what it appears like in follow.

from gensim.fashions import Word2Vec# Outline a corpus of sentences

corpus = [

"the cat sat on the mat",

"the dog barked loudly",

"the sun is shining brightly"

]# Tokenize the sentences

tokenized_corpus = [sentence.split() for sentence in corpus]# Prepare Word2Vec mannequin

mannequin = Word2Vec(sentences=tokenized_corpus, vector_size=3, window=5, min_count=1, sg=0)# Get phrase embeddings

word_embeddings = {phrase: mannequin.wv[word].tolist() for phrase in mannequin.wv.index_to_key}# Print phrase embeddings

for phrase, embedding in word_embeddings.objects():

print(f"{phrase}: {embedding}")

When you’ve ingested your area knowledge into the vector retailer, you’ll be able to lengthen the system to reply questions associated to your area by fine-tuning a pre-trained LLM.

Instance data retrieval system (supply: Langchain)

- The person can ask follow-up questions by combining the chat and the brand new questions.

- Utilizing the embeddings and the vector retailer from above, discover related paperwork.

- Generate a response utilizing related paperwork utilizing the big language mannequin reminiscent of ChatGPT.

Fortunately, corporations like Meta and Databricks have educated and open-sourced fashions you should use (Huggingface at the moment has 1000+ Llama 3 open-source models). So that you don’t need to spend tens of millions of {dollars} coaching your individual. As a substitute, simply fine-tune an present mannequin along with your knowledge.

The effectiveness of LLM-based techniques, such because the one above, is just pretty much as good as the information they’re being fed. Due to this fact, knowledge practitioners are inspired to feed as a lot knowledge as doable from varied sources. A high precedence ought to be to hint the place these sources come from and whether or not knowledge flows as anticipated.

Typical challenges for the success of Gen AI fashions are:

- Do you might have ample knowledge to coach a mannequin that’s adequate, and is a few knowledge off-limits on account of privateness issues

- Does the mannequin should be interpretable and explainable to, e.g., prospects or regulators

- What are the potential prices of coaching and fine-tuning the LLM? Do the advantages outweigh these prices?

In case your main knowledge supply is producing ad-hoc insights or knowledge powering BI dashboards for decision-making, there’s a human within the loop. Resulting from human instinct and expectations, knowledge points and unexplainable traits are sometimes caught — and almost definitely inside just a few days.

ML and AI techniques are completely different.

It’s not unusual for ML techniques to depend upon options from a whole lot and even 1000’s of various sources. What might appear to be easy knowledge points — lacking knowledge, duplicates, null or empty values, or outliers — may cause business-critical points. You possibly can take into consideration these in three other ways.

- Enterprise outages — A crucial error, reminiscent of all user_ids being empty, may cause a 90% drop within the new person signup approval charge. All these points are expensive however typically noticed early.

- Drift or ‘silent’ points–These can contain a change in buyer distribution or a lacking worth for a selected section, which causes systematically incorrect predictions. They’re laborious to identify and should persist for months and even years.

- Systematic bias–With Gen AI, human judgment or choices on knowledge assortment may cause bias. Latest examples, reminiscent of biases in Google’s Gemini model, have highlighted the results this will have.

Whether or not you’re supporting a regression mannequin or constructing a brand new corpus of textual content for an LLM, until you’re a researcher growing new fashions, chances are high that the majority of your work will evolve round knowledge assortment and preprocessing.

ML techniques are giant ecosystems of which the mannequin is only a single half — Google on Manufacturing ML Techniques

“You’re to date downstream because the ML group. Virtually any upstream concern will impression us, however we’re typically the final to know.” — Mobility scaleup.

As a rule of thumb, the additional to the left you might be, the tougher it’s to observe for errors. With a whole lot of enter and uncooked sources, typically outdoors the information practitioners’ management, knowledge can go flawed in 1000’s of how.

Mannequin efficiency can extra simply be monitored with well-established metrics reminiscent of ROC, AUC, and F1 scores, which provide you with a single-metric benchmark of your mannequin’s efficiency.

Examples of upstream knowledge high quality points

- Lacking knowledge: Incomplete or lacking values within the dataset can impression the mannequin’s capacity to generalize and make correct predictions.

- Inconsistent knowledge: Variability in knowledge codecs, models, or representations throughout completely different sources or over time can introduce inconsistencies, resulting in confusion and errors throughout mannequin coaching and inference.

- Outliers: Anomalies or outliers within the knowledge, considerably completely different from most observations, can affect mannequin coaching and end in biased or inaccurate predictions.

- Duplicate information: Duplicate entries within the dataset can skew the mannequin’s studying course of and result in overfitting, the place the mannequin performs effectively on coaching knowledge however poorly on new, unseen knowledge.

Examples of knowledge drift

- Seasonal product preferences: Shifts in buyer preferences throughout completely different seasons impression e-commerce suggestions.

- Monetary market adjustments: Sudden fluctuations available in the market on account of financial occasions impacting inventory worth prediction fashions.

Examples of knowledge high quality points with textual content knowledge for an LLM

- Low-quality enter knowledge: A chatbot depends on correct historic case resolutions. The bot’s effectiveness is dependent upon the accuracy of this knowledge to keep away from deceptive or damaging recommendation. Solutions with a low buyer satisfaction or decision rating can point out that the mannequin might have discovered the flawed data.

- Outdated knowledge: A medical recommendation bot might depend on outdated data, leading to much less related suggestions. Analysis created earlier than a selected date might point out that it’s now not match for objective.

We imagine that knowledge groups are sometimes not trusted to ship dependable knowledge techniques in comparison with their counterparts in software program engineering. The AI wave is exponentially scaling the “rubbish in, rubbish out” mannequin and all its implications — when each firm is below stress to seek out new methods to activate knowledge for aggressive benefit.

Whereas there are particular instruments and techniques to observe mannequin efficiency, these typically don’t think about upstream sources and knowledge transformations within the knowledge warehouse. Information reliability platforms are constructed for this.

To assist and keep high-quality production-grade ML and AI techniques, knowledge groups should undertake greatest practices from engineers.

- Diligent testing — upstream sources and outputs feeding into ML and AI techniques should be deliberately examined (e.g., outliers, null values, distribution shifts, and freshness)

- Possession administration — ML and AI techniques should have clear house owners assigned who’re notified of points and are anticipated to behave

- Incident course of — extreme points ought to be handled as incidents with clear SLAs and escalation paths

- Information product mindset — all the worth chain feeding into ML and AI techniques ought to be thought of as one product

- Information high quality metrics — the information group ought to be capable of report on key metrics reminiscent of uptime, errors, and SLAs of their ML and AI techniques

Simply specializing in one of many pillars is never ample. Points might slip by the cracks in the event you over-invest in testing with out clear possession. In case you spend money on possession however don’t deliberately handle incidents, extreme points might go unsolved for too lengthy.

Importantly, in the event you achieve implementing the 5 knowledge reliability pillars, it doesn’t imply you gained’t have points. But it surely means you’re extra more likely to proactively uncover them, construct confidence, and talk to your stakeholders the way you’re bettering over time.

Whereas solely 33% of knowledge groups at the moment assist AI and ML fashions, most anticipate to within the close to future. This shift means knowledge groups should alter to a brand new world of supporting business-critical techniques and work extra like software program engineers.

- State of AI in knowledge groups–AI techniques are bettering, with a number of benchmarks reminiscent of picture classification, visible reasoning, and English understanding surpassing the human-level benchmark. 33% of knowledge groups at the moment use AI and ML in manufacturing, however 55% of groups anticipate to.

- AI use instances — There are a wealth of various ML and AI use instances, starting from classification and regression to Gen AI. Every system poses challenges, however the distinction between “classical ML” and Gen AI is stark. We seemed into this by the lens of a classical buyer danger prediction mannequin and an data retrieval chatbot.

- Information high quality in AI and ML techniques–Information high quality is likely one of the most important dangers to the success of ML and AI tasks. With AI and ML fashions typically counting on a whole lot of knowledge sources, manually detecting points is sort of inconceivable.

- The 5 steps to dependable knowledge–To assist and keep ML and AI techniques, knowledge groups should work extra like engineers. This contains diligent testing, clear possession, incident administration processes, a knowledge product mindset, and with the ability to report on metrics reminiscent of uptime and SLAs.