Essentially the most pure means to make use of a mannequin to construct an output sequence is to regularly predict the next-best token, append it to a generated sequence, and proceed till the top of era. That is known as grasping search, and is the simplest and environment friendly strategy to generate textual content from an LLM (or different mannequin). In its most simple type, it seems to be one thing like this:

sequence = ["<start>"]

whereas sequence[-1] != "<finish>":

# Given the enter context, and seq to this point, append almost definitely subsequent token

sequence += mannequin(enter, sequence)

return "".be a part of(sequence)

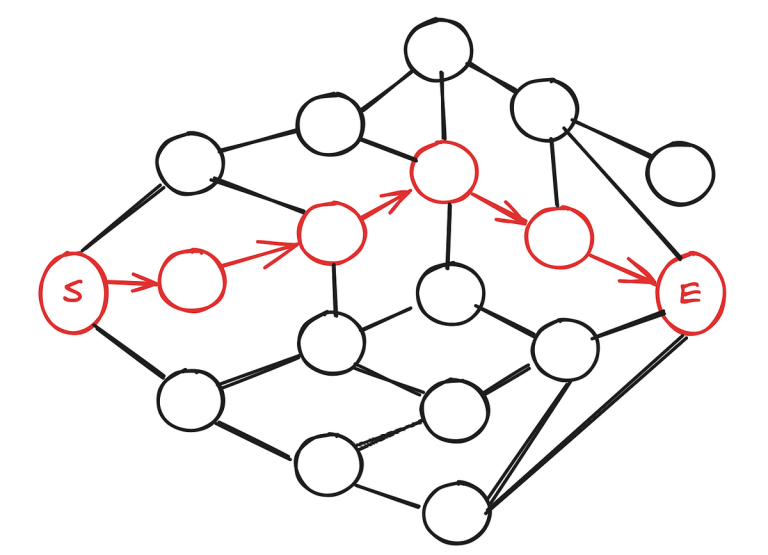

Undergrad Laptop Science algorithms courses have a piece on graph traversal algorithms. If you happen to mannequin the universe of potential LLM output sequences as a graph of tokens, then the issue of discovering the optimum output sequence, given enter context, carefully resembles the issue of traversing a weighted graph. On this case, the sting “weights” are possibilities generated from consideration scores, and the purpose of the traversal is to reduce the general price (maximize the general likelihood) from starting to finish.

Out of all attainable textual content era strategies, that is probably the most computationally environment friendly — the variety of inferences is 1:1 with the variety of output tokens. Nonetheless, there are some issues.

At each step of token era, the algorithm selects the highest-probability token given the output sequence to this point, and appends it to that sequence. That is the simplicity and flaw of this strategy, together with all different grasping algorithms — it will get trapped in local minima. Which means, what seems to be the next-best token proper now might not, actually, be the next-best token for the generated output total.

"We will deal with it as a matter of"

[course (p=0.9) | principle (p=0.5)] | trigger (p=0.2)]"

Given some enter context and the generated string to this point, We will deal with it as a matter after all looks like a logical and possible sequence to generate.

However what if the contextually-accurate sentence is We will deal with it as a matter of trigger and impact? Grasping search has no strategy to backtrack and rewrite the sequence token course with trigger and impact. What appeared like the very best token on the time really trapped output era right into a suboptimal sequence.

The necessity to account for lower-probability tokens at every step, within the hope that higher output sequences are generated later, is the place beam search is helpful.

Returning to the graph-search analogy, with a view to generate the optimum textual content for any given question and context, we’d have to completely discover the universe of potential token sequences. The answer resembles the A* search algorithm (extra carefully than Dijkstra’s algorithm, since we don’t essentially need shortest path, however lowest-cost/highest-likelihood).

Since we’re working with pure language, the complexity concerned is much too excessive to exhaust the search area for each question in most contexts. The answer is to trim that search area all the way down to an affordable variety of candidate paths by way of the candidate token graph; perhaps simply 4, 8, or 12.

Beam search is the heuristic typically used to approximate that ultimate A*-like consequence. This system maintains ok candidate sequences that are incrementally constructed up with the respective top-k almost definitely tokens. Every of those tokens contributes to an total sequence rating, and after every step, the whole set of candidate sequences are pruned all the way down to the best-scoring prime ok.

The “beam” in beam search borrows the analogy of a flashlight, whose beam could be widened or narrowed. Taking the instance of producing the fast brown fox jumps over the lazy canine with a beam width of 2, the method seems to be one thing like this:

At this step, two candidate sequences are being maintained: “the” and “a”. Every of those two sequences want to judge the top-two almost definitely tokens to observe.

After the subsequent step, “the speedy” has been eradicated, and “the fast” has been chosen as the primary candidate sequence. For the second, “a lazy” has been eradicated, and “a fast” has been chosen, because it has the next cumulative likelihood. Be aware that if each candidates above the road have the next chance that each candidates under the road, then they’ll symbolize the 2 candidate sequences after the next step.

This course of continues till both a most token size restrict has been reached, or all candidate sequences have appended an end-of-sequence token, that means we’ve concluded producing textual content for that sequence.

Growing the beam width will increase the search area, growing the chance of a greater output, however at a corresponding enhance area and computational price. Additionally be aware {that a} beam search with beam_width=1 is successfully an identical to grasping search.

Now, what does temperature need to do with all of this? As I discussed above, this parameter doesn’t actually inject randomness into the generated textual content sequence, however it does modify the predictability of the output sequences. Borrowing from information theory: temperature can enhance or lower the entropy related to a token prediction.

The softmax activation function is often used to transform the uncooked outputs (ie, logits) of a mannequin’s (together with LLMs) prediction right into a likelihood distribution (I walked by way of this slightly here). This perform is outlined as follows, given a vector Z with n components:

This perform emits a vector (or tensor) of possibilities, which sum to 1.0 and can be utilized to obviously assess the mannequin’s confidence in a category prediction in a human-interpretable means.

A “temperature” scaling parameter T could be launched which scales the logit values previous to the applying of softmax.

The applying of T > 1.0 has the impact of cutting down logit values and produces the impact of the muting the biggest variations between the chances of the assorted courses (it will increase entropy throughout the mannequin’s predictions)

Utilizing a temperature of T < 1.0 has the other impact; it magnifies the variations, that means probably the most assured predictions will stand out much more in comparison with alternate options. This reduces the entropy throughout the mannequin’s predictions.

In code, it seems to be like this:

scaled_logits = logits_tensor / temperature

probs = torch.softmax(scaled_logits, dim=-1)

Check out the impact over 8 attainable courses, given some hand-written logit values:

The above graph was plotted utilizing the next values:

ts = [0.5, 1.0, 2.0, 4.0, 8.0]

logits = torch.tensor([3.123, 5.0, 3.234, 2.642, 2.466, 3.3532, 3.8, 2.911])

probs = [torch.softmax(logits / t, dim=-1) for t in ts]

The bars symbolize the logit values (outputs from mannequin prediction), and the traces symbolize the likelihood distribution over these courses, with possibilities outlined on the right-side label. The thick crimson line represents the anticipated distribution, with temperature T=1.0, whereas the opposite traces reveal the change in relative chance with a temperature vary from 0.5 to 8.0.

You’ll be able to clearly see how T=0.5 emphasizes the chance of the largest-magnitude logit index, whereas T=8.0 reduces the distinction in possibilities between courses to virtually nothing.

>>> [print(f' t={t}n l={(logits/t)}n p={p}n') for p,t in zip(probs, ts)]

t=0.5

l=tensor([6.2460, 10.000, 6.4680, 5.2840, 4.9320, 6.7064, 7.6000, 5.8220])

p=tensor([0.0193, 0.8257, 0.0241, 0.0074, 0.0052, 0.0307, 0.0749, 0.0127])t=1.0

l=tensor([3.1230, 5.0000, 3.2340, 2.6420, 2.4660, 3.3532, 3.8000, 2.9110])

p=tensor([0.0723, 0.4727, 0.0808, 0.0447, 0.0375, 0.0911, 0.1424, 0.0585])

t=2.0

l=tensor([1.5615, 2.5000, 1.6170, 1.3210, 1.2330, 1.6766, 1.9000, 1.4555])

p=tensor([0.1048, 0.2678, 0.1108, 0.0824, 0.0754, 0.1176, 0.1470, 0.0942])

t=4.0

l=tensor([0.7807, 1.2500, 0.8085, 0.6605, 0.6165, 0.8383, 0.9500, 0.7278])

p=tensor([0.1169, 0.1869, 0.1202, 0.1037, 0.0992, 0.1238, 0.1385, 0.1109])

t=8.0

l=tensor([0.3904, 0.6250, 0.4042, 0.3302, 0.3083, 0.4191, 0.4750, 0.3639])

p=tensor([0.1215, 0.1536, 0.1232, 0.1144, 0.1119, 0.1250, 0.1322, 0.1183])

Now, this doesn’t essentially change the relative chance between any two courses (numerical stability points apart), so how does this have any sensible impact in sequence era?

The reply lies again within the mechanics of beam search. A temperature worth higher than 1.0 makes it much less possible a high-scoring particular person token will outweigh a sequence of slightly-less-likely tokens, which in conjunction lead to a better-scoring output.

>>> sum([0.9, 0.3, 0.3, 0.3]) # uncooked possibilities

1.8 # dominated by first token

>>> sum([0.8, 0.4, 0.4, 0.4]) # temperature-scaled possibilities

2.0 # extra possible total consequence

Beam search implementations usually work with log-probabilities of the softmax possibilities, which is frequent within the ML area amongst many others. The explanations embody:

- The chances in use are sometimes vanishingly small; utilizing log probs improves numerical stability

- We will compute a cumulative likelihood of outcomes by way of the addition of logprobs versus the multiplication of uncooked possibilities, which is barely computationally sooner in addition to extra numerically secure. Recall that

p(x) * p(y) == log(p(x)) + log(p(y)) - Optimizers, corresponding to gradient descent, are easier when working with log probs, which makes spinoff calculations extra easy and loss capabilities like cross-entropy loss already contain logarithmic calculations

This additionally implies that the values of the log probs we’re utilizing as scores are detrimental actual numbers. Since softmax produces a likelihood distribution which sums to 1.0, the logarithm of any class likelihood is thus ≤ 1.0 which ends up in a detrimental worth. That is barely annoying, nevertheless it’s in keeping with the property that higher-valued scores are higher, whereas drastically detrimental scores mirror extraordinarily unlikely outcomes:

>>> math.log(3)

1.0986122886681098

>>> math.log(0.99)

-0.01005033585350145

>>> math.log(0.98)

-0.020202707317519466

>>> math.log(0.0001)

-9.210340371976182

>>> math.log(0.000000000000000001)

-41.44653167389282

Right here’s many of the instance code, extremely annotated, additionally accessible on Github. Definitions for GeneratedSequence and ScoredToken could be found here; these are principally easy wrappers for tokens and scores.

# The preliminary candidate sequence is solely the beginning token ID with

# a sequence rating of 0

candidate_sequences = [

GeneratedSequence(tokenizer, start_token_id, end_token_id, 0.0)

]for i in tqdm.tqdm(vary(max_length)):

# Short-term checklist to retailer candidates for the subsequent era step

next_step_candidates = []

# Iterate by way of all candidate sequences; for every, generate the subsequent

# almost definitely tokens and add them to the next-step sequnce of candidates

for candidate in candidate_sequences:

# skip candidate sequences which have included the end-of-sequence token

if not candidate.has_ended():

# Construct a tensor out of the candidate IDs; add a single batch dimension

gen_seq = torch.tensor(candidate.ids(), machine=machine).unsqueeze(0)

# Predict subsequent token

output = mannequin(input_ids=src_input_ids, decoder_input_ids=gen_seq)

# Extract logits from output

logits = output.logits[:, -1, :]

# Scale logits utilizing temperature worth

scaled_logits = logits / temperature

# Assemble likelihood distribution in opposition to scaled

# logits by way of softmax activation perform

probs = torch.softmax(scaled_logits, dim=-1)

# Choose prime ok (beam_width) possibilities and IDs from the distribution

top_probs, top_ids = probs.topk(beam_width)

# For every of the top-k generated tokens, append to this

# candidate sequence, replace its rating, and append to the checklist of subsequent

# step candidates

for i in vary(beam_width):

# the brand new token ID

next_token_id = top_ids[:, i].merchandise()

# log-prob of the above token

next_score = torch.log(top_probs[:, i]).merchandise()

new_seq = deepcopy(candidate)

# Provides the brand new token to the top of this sequence, and updates its

# uncooked and normalized scores. Scores are normalized by sequence token

# size, to keep away from penalizing longer sequences

new_seq.append(ScoredToken(next_token_id, next_score))

# Append the up to date sequence to the subsequent candidate sequence set

next_step_candidates.append(new_seq)

else:

# Append the canddiate sequence as-is to the next-step candidates

# if it already incorporates an end-of-sequence token

next_step_candidates.append(candidate)

# Type the next-step candidates by their rating, choose the top-k

# (beam_width) scoring sequences and make them the brand new

# candidate_sequences checklist

next_step_candidates.kind()

candidate_sequences = checklist(reversed(next_step_candidates))[:beam_width]

# Break if all sequences within the heap finish with the eos_token_id

if all(seq.has_ended() for seq in candidate_sequences):

break

return candidate_sequences

Within the subsequent part, you’ll find some outcomes of operating this code on a couple of completely different datasets with completely different parameters.

As I discussed, I’ve published some example code to Github, which makes use of the t5-small transformer model from Hugging Face and its corresponding T5Tokenizer. The examples under have been run by way of the T5 mannequin in opposition to the quick brown fox etc Wikipedia web page, sanitized by way of an extractor script.

Grasping Search

Operating --greedy mode:

$ python3 src/principal.py --greedy --input ./wiki-fox.txt --prompt "summarize the next doc"grasping search era outcomes:

[

the phrase is used in the annual Zaner-Bloser National Handwriting Competition.

it is used for typing typewriters and keyboards, typing fonts. the phrase

is used in the earliest known use of the phrase.

]

This output summarizes a part of the article properly, however total just isn’t nice. It’s lacking preliminary context, repeats itself, and doesn’t state what the phrase really is.

Beam Search

Let’s strive once more, this time using beam search for output era, utilizing an preliminary beam width of 4 and the default temperature of 1.0

$ python3 src/principal.py --beam 4 --input ./wiki-fox.txt --prompt "summarize the next doc"[lots of omitted output]

beam search (ok=4, t=1.0) era outcomes:

[

"the quick brown fox jumps over the lazy dog" is an English-language pangram.

the phrase is commonly used for touch-typing practice, typing typewriters and

keyboards. it is used in the annual Zaner-Bloser National

Handwriting Competition.

]

This output is far superior to the grasping output above, and probably the most exceptional factor is that we’re utilizing the identical mannequin, immediate and enter context to generate it.

There are nonetheless a pair errors in it; for instance “typing typewriters”, and maybe “keyboards” is ambiguous.

The beam search code I shared will emit its decision-making progress because it progresses by way of the textual content era (full output here). For instance, the primary two steps:

starting beam search | ok = 4 bos = 0 eos = 1 temp = 1.0 beam_width = 4

0.0: [], subsequent token possibilities:

p: 0.30537632: ▁the

p: 0.21197866: ▁"

p: 0.13339639: ▁phrase

p: 0.13240208: ▁subsequent step candidates:

-1.18621039: [the]

-1.55126965: ["]

-2.01443028: [phrase]

-2.02191186: []

-1.1862103939056396: [the], subsequent token possibilities:

p: 0.61397356: ▁phrase

p: 0.08461960: ▁

p: 0.06939770: ▁"

p: 0.04978605: ▁time period

-1.5512696504592896: ["], subsequent token possibilities:

p: 0.71881396: the

p: 0.08922042: qui

p: 0.05990228: The

p: 0.03147057: a

-2.014430284500122: [phrase], subsequent token possibilities:

p: 0.27810165: ▁used

p: 0.26313403: ▁is

p: 0.10535818: ▁was

p: 0.03361856: ▁

-2.021911859512329: [], subsequent token possibilities:

p: 0.72647911: earliest

p: 0.19509122: a

p: 0.02678721: '

p: 0.00308457: s

subsequent step candidates:

-1.67401379: [the phrase]

-1.88142237: ["the]

-2.34145740: [earliest]

-3.29419887: [phrase used]

-3.34952199: [phrase is]

-3.65579963: [the]

-3.65619993: [a]

Now if we take a look at the set of candidates within the final step:

subsequent step candidates:

-15.39409454: ["the quick brown fox jumps over the lazy dog" is an English-language pangram. the phrase is commonly used for touch-typing practice, typing typewriters and keyboards. it is used in the annual Zaner-Bloser National Handwriting Competition.]

-16.06867695: ["the quick brown fox jumps over the lazy dog" is an English-language pangram. the phrase is commonly used for touch-typing practice, testing typewriters and keyboards. it is used in the annual Zaner-Bloser National Handwriting Competition.]

-16.10376084: ["the quick brown fox jumps over the lazy dog" is an English-language pangram. the phrase is commonly used for touch-typing practice, typing typewriters and keyboards. it is used in the annual Zaner-Bloser national handwriting competition.]

You’ll be able to see that the top-scoring sentence containing typing typewriters outscored the sentence containing testing typewriters by -15.39 to -16.06, which, if we increase to Euler’s constant to transform again into cumulative possibilities, is a probabilistic distinction of simply 0.00001011316%. There have to be a strategy to overcome this tiny distinction!

Beam Search with Temperature

Let’s see if this summarization could possibly be improved by making use of a temperature worth to clean over a number of the log likelihood scores. Once more, the whole lot else, the mannequin, and the enter context, will in any other case be an identical to the examples above.

$ python3 src/principal.py --beam 4 --temperature 4.0 --input ./wiki-fox.txt --prompt "summarize the next doc"[lots of omitted output]

beam search (ok=4, t=4.0) era outcomes:

[

"the quick brown fox jumps over the lazy dog" is an English-language pangram.

it is commonly used for touch-typing practice, testing typewriters and

computer keyboards. earliest known use of the phrase started with "A"

]

This output appropriately emitted “testing typewriters” slightly than “typing typewriters” and specified “pc keyboards”. It additionally, curiously, selected the historic indisputable fact that this phrase initially began with “a fast brown fox” over the Zaner-Bloser competitors reality above. The total output can also be accessible here.

Whether or not or not this output is best is a subjective matter of opinion. It is completely different in a couple of nuanced methods, and the utilization and setting of temperature values will differ by software. I feel its higher, and once more, its fascinating as a result of no mannequin weights, mannequin structure, or immediate was modified to acquire this output.

Buffalo buffalo Buffalo buffalo buffalo buffalo Buffalo buffalo and Scoring Penalties

Let’s see if the beam search, with temperature settings used above, works correctly for my favourite English-language linguistic assemble: Buffalo buffalo Buffalo buffalo buffalo buffalo Buffalo buffalo.

$ python3 src/principal.py --beam 4 --temperature 4.0 --input ./wiki-buffalo.txt --prompt "summarize the linguistic assemble within the following textual content"[lots of omitted outputs]

beam search (ok=4, t=4.0) era outcomes:

[

"Buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo

buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo

buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo

buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo

buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo

buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo

buffalo buffalo buffalo buffalo buffalo buffalo

]

Utter catastrophe, although a predictable one. Given the complexity of this enter doc, we want extra strategies to deal with contexts like this. Curiously, the ultimate iteration candidates didn’t embody a single rational sequence:

subsequent step candidates:

-361.66266489: ["Buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo]

-362.13168168: ["buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo]

-362.22955942: ["Buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo.]

-362.60354519: ["Buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo]

-363.03604889: ["Buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo,]

-363.07167459: ["buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo]

-363.14155817: ["Buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo Buffalo]

-363.28574753: ["Buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo. the]

-363.35553551: ["Buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo buffalo a]

[more of the same]

We will apply a token-specific score decay (extra like a penalty) to repeated tokens, which makes them seem much less engaging (or extra precisely, much less possible options) to the beam search algorithm:

token_counts = Counter(t.token_id for t in candidate)# For every of the top-k generated tokens, append to this candidate sequence,

# replace its rating, and append to the checklist of subsequent step candidates

for i in vary(beam_width):

next_token_id = top_ids[:, i].merchandise() # the brand new token ID

next_score = torch.log(top_probs[:, i]).merchandise() # log-prob of the above token

# Optionally apply a token-specific rating decay to repeated tokens

if decay_repeated and next_token_id in token_counts:

rely = token_counts[next_token_id]

decay = 1 + math.log(rely + 1)

next_score *= decay # inflate the rating of the subsequent sequence accordingly

new_seq = deepcopy(candidate)

new_seq.append(ScoredToken(next_token_id, next_score))

Which leads to the next, extra cheap output:

$ python3 src/principal.py --decay --beam 4 --temperature 4.0 --input ./wiki-buffalo.txt --prompt "summarize the linguistic assemble within the following textual content"[lots of omitted outputs]

beam search (ok=4, t=4.0) era outcomes:

[

"Buffalo buffalo" is grammatically correct sentence in English, often

presented as an example of how homophonies can be used to create complicated

language constructs through unpunctuated terms and sentences. it uses three

distinct meanings:An attributive noun (acting

]

You can see the place the place the scoring penalty pulled the infinite buffalos sequence under the sequence ensuing within the above output:

subsequent step candidates:

-36.85023594: ["Buffalo buffalo Buffalo]

-37.23766947: ["Buffalo buffalo"]

-37.31325269: ["buffalo buffalo Buffalo]

-37.45994210: ["buffalo buffalo"]

-37.61866760: ["Buffalo buffalo,"]

-37.73602080: ["buffalo" is]

[omitted]-36.85023593902588: ["Buffalo buffalo Buffalo], subsequent token possibilities:

p: 0.00728357: ▁buffalo

p: 0.00166316: ▁Buffalo

p: 0.00089072: "

p: 0.00066582: ,"

['▁buffalo'] rely: 1 decay: 1.6931471805599454, rating: -4.922133922576904, subsequent: -8.33389717334955

['▁Buffalo'] rely: 1 decay: 1.6931471805599454, rating: -6.399034023284912, subsequent: -10.834506414832013

-37.237669467926025: ["Buffalo buffalo"], subsequent token possibilities:

p: 0.00167652: ▁is

p: 0.00076465: ▁was

p: 0.00072227: ▁

p: 0.00064367: ▁used

-37.313252687454224: ["buffalo buffalo Buffalo], subsequent token possibilities:

p: 0.00740433: ▁buffalo

p: 0.00160758: ▁Buffalo

p: 0.00091487: "

p: 0.00066765: ,"

['▁buffalo'] rely: 1 decay: 1.6931471805599454, rating: -4.905689716339111, subsequent: -8.306054711921485

['▁Buffalo'] rely: 1 decay: 1.6931471805599454, rating: -6.433023929595947, subsequent: -10.892056328870039

-37.45994210243225: ["buffalo buffalo"], subsequent token possibilities:

p: 0.00168198: ▁is

p: 0.00077098: ▁was

p: 0.00072504: ▁

p: 0.00065945: ▁used

subsequent step candidates:

-43.62870741: ["Buffalo buffalo" is]

-43.84772754: ["buffalo buffalo" is]

-43.87371445: ["Buffalo buffalo Buffalo"]

-44.16472149: ["Buffalo buffalo Buffalo,"]

-44.30998302: ["buffalo buffalo Buffalo"]

So it seems we want extra hacks (strategies) like this, to deal with particular sorts of edge instances.

This turned out to be for much longer than what I used to be planning to put in writing; I hope you will have a couple of takeaways. Except for merely understanding how beam search and temperature work, I feel probably the most fascinating illustration above is how, even given the unimaginable complexity and capabilities of LLMs, implementation decisions affecting how their predictions are used have an enormous impact on the standard on their output. The applying of easy undergraduate Laptop Science ideas to sequence development can lead to dramatically completely different LLM outputs, even with all different enter being an identical.

After we encounter hallucinations, errors, or different quirks when working with LLMs, its solely attainable (and maybe possible) that these are quirks with the output sequence development algorithms, slightly than any “fault” of the skilled mannequin itself. To the person of an API, it’s virtually not possible to inform the distinction.

I feel that is an fascinating instance of the complexity of the equipment round LLMs which make them such highly effective instruments and merchandise immediately.