Manufacturing-grade Mamba-style mannequin provides unparalleled throughput , solely mannequin in its dimension class that matches 140K context on a single GPU

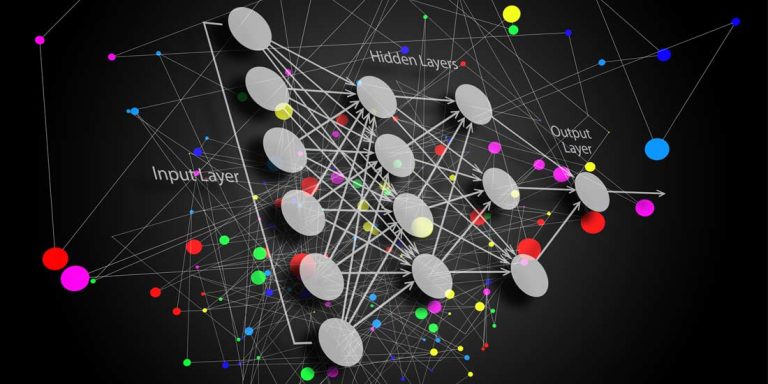

AI21, a frontrunner in AI programs for the enterprise, unveiled Jamba, the production-grade Mamba-style mannequin – integrating Mamba Structured State Area mannequin (SSM) know-how with components of conventional Transformer structure. Jamba marks a big development in giant language mannequin (LLM) improvement, providing unparalleled effectivity, throughput, and efficiency.

Jamba revolutionizes the panorama of LLMs by addressing the constraints of pure SSM fashions and conventional Transformer architectures. With a context window of 256K, Jamba outperforms different state-of-the-art fashions in its dimension class throughout a variety of benchmarks, setting a brand new customary for effectivity and efficiency.

Jamba includes a hybrid structure that integrates Transformer, Mamba, and mixture-of-experts (MoE) layers, optimizing reminiscence, throughput, and efficiency concurrently. Jamba additionally surpasses Transformer-based fashions of comparable dimension by delivering 3 times the throughput on lengthy contexts, enabling sooner processing of large-scale language duties that clear up core enterprise challenges.

Scalability is a key function of Jamba, accommodating as much as 140K contexts on a single GPU, facilitating extra accessible deployment and inspiring experimentation inside the AI group.

Jamba’s launch marks two vital milestones in LLM innovation – efficiently incorporating Mamba alongside the Transformer structure plus advancing the hybrid SSM-Transformer mannequin, delivering a smaller footprint and sooner throughput on lengthy context.

“We’re excited to introduce Jamba, a groundbreaking hybrid structure that mixes the most effective of Mamba and Transformer applied sciences,” stated Or Dagan, VP of Product, at AI21. “This permits Jamba to supply unprecedented effectivity, throughput, and scalability, empowering builders and companies to deploy important use instances in manufacturing at document velocity in essentially the most cost-effective approach.”

Jamba’s launch with open weights underneath the Apache 2.0 license explores collaboration and innovation within the open supply group, and invitations additional discoveries from them. And Jamba’s integration with the NVIDIA API catalog as a NIM inference microservice streamlines its accessibility for enterprise purposes, guaranteeing seamless deployment and integration.

To be taught extra about Jamba, learn the blog post out there on AI21’s web site. The Jamba analysis paper can be accessed HERE.

Join the free insideBIGDATA newsletter.

Be a part of us on Twitter: https://twitter.com/InsideBigData1

Be a part of us on LinkedIn: https://www.linkedin.com/company/insidebigdata/

Be a part of us on Fb: https://www.facebook.com/insideBIGDATANOW