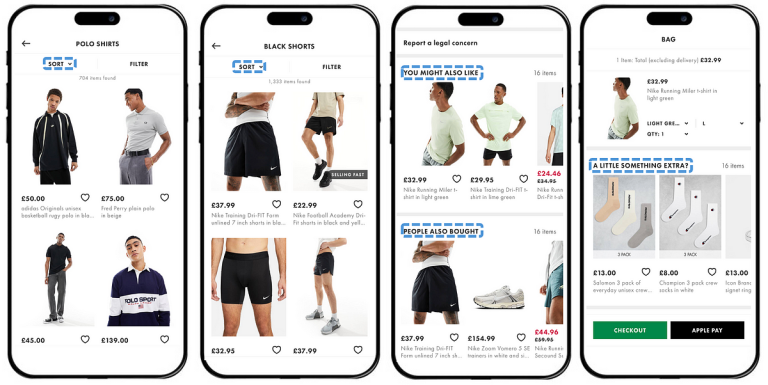

With a list of practically 50,000 merchandise at any given time — and with tons of of latest merchandise being launched each week — our personalisation system is crucial to surfacing the fitting product, to the fitting buyer, on the proper time. We leverage machine studying to assist our prospects discover their dream product by offering high-quality personalisation by means of a number of contact factors in the course of the prospects’ journey (see determine beneath).

Our purpose is to optimally rank merchandise to extend buyer engagement, which we measure by means of clicks and purchases. We do that by utilizing large quantities of buyer interplay information (e.g. purchases/saved for later/added to bag) along with deep studying to provide a personalised rating of merchandise, suggestions, for every buyer¹.

Whereas our present recommender achieves good on-line outcomes and scales effectively, serving 5 billion requests a day by means of quite a lot of totally different contact factors², its efficiency could be improved by extracting extra which means from the customer-product interactions.

On this publish we clarify how we’re levelling up our trend recommender by utilizing leading edge transformer know-how to higher seize a buyer’s fashion and infer the relative significance of interactions over time.

Transformers, the T in ChatGPT, is a mannequin structure that revolutionised a mess of areas of machine studying from pure language processing (NLP) to laptop imaginative and prescient. Whereas transformers had been designed to unravel duties in Pure Language, latest analysis has prolonged their use onto recommender methods. Transformer fashions allow us to higher seize a buyer’s fashion with a mechanism known as self-attention and infer the relative significance of a buyer’s interactions over time utilizing positional consciousness.

Self-attention allows a machine studying mannequin to construct context-aware representations of inputs inside a sequence. By weighing the significance of all different inputs inside a sequence with respect to itself when processing, the mannequin is ready to construct a richer understanding of every enter and thus the entire sequence. As an example a pair of heels could be interpreted in another way when grouped with a set of causal garments vs extra formal put on. This extra context permits the mannequin to higher interpret a product and seize the essence of a buyer’s fashion.

Positional consciousness permits the mannequin to interpret the order of a buyer’s previous product interactions and decipher the relative significance of a product given its place within the sequence e.g. a mannequin could give extra significance to a product bought yesterday vs a product considered three months in the past. This allows the mannequin to higher serve our prospects related suggestions as their context switches between looking classes and as their fashion evolves over time.