Drawback assertion:

Within the rapidly creating subject of machine studying, good accuracy and generalization require algorithms that successfully optimize difficult fashions. For this, the basic optimization technique often known as gradient descent is regularly employed. Gradient Descent implementation, nonetheless, shouldn’t be with out its difficulties. These embody figuring out the perfect studying fee, avoiding native minima, and avoiding overfitting. This case examine investigates these points in a sensible setting and appears at how totally different Gradient Descent setups have an effect on the performance of the mannequin. We hope to help practitioners with insights on find out how to enhance their machine studying workflows by highlighting vital issues and potential options.

Intention:

The purpose of this case examine is to look at how Gradient Descent can optimize machine studying fashions. Via exploring varied configurations and strategies, we intend to establish finest practices for enhancing accuracy and effectivity. The objective is to supply insights for practitioners to boost mannequin efficiency utilizing Gradient Descent..

Goal:

· Consider Gradient Descent Strategies: Assess totally different Gradient Descent approaches to find out their effectiveness in optimizing machine studying fashions.

· Analyze Convergence and Studying Charges: Look at the affect of assorted studying charges on convergence pace and mannequin accuracy.

· Establish Frequent Challenges: Spotlight potential points, comparable to native minima and overfitting, and counsel methods to mitigate them.

· Suggest Greatest Practices: Present actionable suggestions for practitioners to enhance mannequin efficiency and stability utilizing Gradient Descent.

Introduction:

Gradient Descent is a basic optimization algorithm used extensively in machine studying and deep studying. It varieties the spine of coaching many machine studying fashions, from easy linear regression to advanced neural networks. The algorithm works by iteratively adjusting mannequin parameters to attenuate a loss perform, guiding the mannequin towards optimum options.

The recognition of Gradient Descent stems from its simplicity, versatility, and scalability, however implementing it successfully requires cautious consideration. Challenges comparable to deciding on an acceptable studying fee, avoiding native minima, and managing overfitting can affect the efficiency and reliability of fashions.

On this case examine, we discover the sensible functions of Gradient Descent, specializing in its position in optimizing machine studying fashions. By analyzing real-world eventualities, we purpose to uncover widespread points, consider totally different approaches, and suggest finest practices. This examine is meant to assist machine studying practitioners perceive the intricacies of Gradient Descent and apply it efficiently to their tasks.

Algorithm used:

On this case examine, we used a spread of algorithms and strategies to discover Gradient Descent and its variants. Beneath are the important thing algorithms used:

· Batch Gradient Descent:

This algorithm calculates the gradient of the loss perform utilizing the complete coaching dataset for every iteration. It supplies a secure gradient estimate however could be computationally costly, particularly for big datasets.

· Stochastic Gradient Descent (SGD):

SGD updates the mannequin parameters primarily based on the gradient from a single information level or a random pattern from the coaching set. This method introduces noise into the updates, which may result in sooner convergence but in addition instability

.

Methodology/Pseudocode:

- Initialize weight w and bias b and studying fee alpha.

2. Choose a worth for the educational fee α. The educational fee determines how massive the step could be on every iteration.

If α could be very small, it could take very long time to converge and turn into computationally costly.

If α is massive, it might fail to converge and overshoot the minimal.

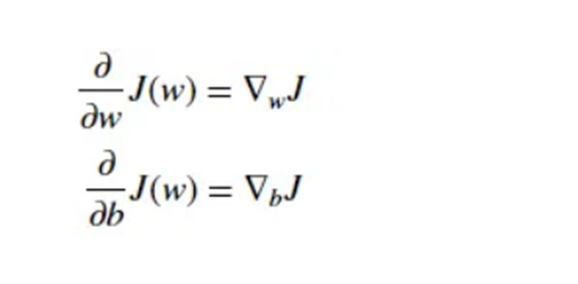

3. On every iteration, take the partial spinoff of the price perform J(w) w.r.t every parameter (gradient):

4. The replace equations are:

Code:

Purposes:

· Linear Regression

· Neural networks

· SVM

Abstract

On this case examine, we explored the appliance of Gradient Descent in a machine studying context, specializing in its position in optimizing mannequin efficiency. Gradient Descent is a basic optimization algorithm used to search out the optimum parameters that reduce a loss perform, which is essential in coaching machine studying fashions. Via a complete examination, we analyzed varied Gradient Descent configurations, studying charges, and strategies to deal with widespread challenges comparable to native minima and overfitting.

Key findings from the case examine embody:

The vital position of studying charges in figuring out the pace and stability of convergence.

Variations in convergence conduct relying on the complexity of the loss perform panorama.

The effectiveness of regularization strategies in decreasing overfitting and enhancing generalization.

The flexibility of Gradient Descent variants, with Mini-Batch Gradient Descent rising as a balanced alternative for effectivity and stability.

These findings spotlight the significance of rigorously tuning Gradient Descent parameters and making use of extra optimization methods to realize the most effective outcomes.

Conclusion

Gradient Descent stays a cornerstone of machine studying optimization, providing a easy but highly effective method to coaching fashions. This case examine demonstrates that whereas Gradient Descent could be extremely efficient, its success relies on understanding the nuances of studying charges, convergence, and regularization. By implementing finest practices and leveraging superior strategies, practitioners can overcome widespread challenges and obtain improved accuracy and mannequin stability.

In the end, this case examine emphasizes that profitable software of Gradient Descent requires a stability between theoretical data and sensible experimentation. By making use of the insights from this examine, machine studying practitioners could make knowledgeable selections and optimize their fashions successfully, contributing to the general development of machine studying and deep studying applied sciences.

References:

https://towardsdatascience.com/gradient-descent-algorithm-and-its-variants-10f652806a3

https://www.geeksforgeeks.org/gradient-descent-algorithm-and-its-variants/