Introduction

The necessity for efficient dimensionality discount methods has grow to be paramount for knowledge scientists and analysts. One such highly effective technique, Domestically Preserving Projections (LPP), has gained vital consideration for its means to keep up the native construction of knowledge whereas decreasing its dimensionality. Originating from the sphere of manifold studying, LPP presents a nuanced strategy to dealing with high-dimensional datasets generally present in fields like picture processing, speech recognition, and bioinformatics.

Knowledge speaks volumes within the whispers of dimensions; pay attention intently with Domestically Preserving Projections.

Background

Domestically Preserving Projections (LPP) is a dimensionality discount approach usually utilized in machine studying and sample recognition. It’s just like different strategies like Principal Element Evaluation (PCA) however with a distinct focus. Right here’s an in depth overview of LPP and the way it works:

LPP, also referred to as Laplacian Eigenmaps in some contexts, goals to protect the native construction of knowledge whereas decreasing its dimensionality. It’s notably efficient for knowledge that lies on a manifold inside a higher-dimensional house.

How LPP Works

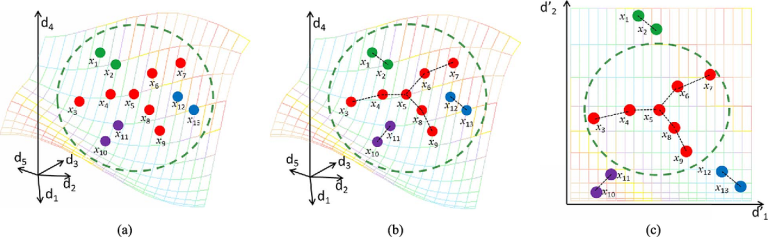

- Neighborhood Graph Development: LPP begins by setting up a neighborhood graph the place every knowledge level is related to its nearest neighbors. This may be primarily based on the Euclidean distance or another metric appropriate for the info.

- Weight Matrix Creation: As soon as the graph is constructed, a weight matrix is created. Weights on this matrix are sometimes assigned utilizing the warmth kernel (exponential perform of the detrimental Euclidean distance) or set to 1 for related factors and 0 for unconnected factors.

- Laplacian Matrix Formation: The Laplacian matrix is then shaped, which is the distinction between the diploma matrix (a diagonal matrix containing the sum of the weights for every level) and the burden matrix.

- Eigenvalue Decomposition: The following step entails fixing a generalized eigenvector drawback involving the Laplacian matrix. The objective is to seek out the eigenvectors related to the smallest non-zero eigenvalues. These eigenvectors present the brand new axes for the reduced-dimensional house.

- Projection: The information is lastly projected onto these axes, that are chosen to protect the native neighborhood construction of the info.

LPP is a part of a broader class of methods generally known as manifold studying, which incorporates different strategies like Isomap and Domestically Linear Embedding (LLE). These strategies share the objective of uncovering the underlying low-dimensional manifold in high-dimensional knowledge.

Understanding the Mechanics of LPP

LPP operates on a easy but profound precept: protect native neighbor relationships within the lower-dimensional house. The method begins with setting up a neighborhood graph the place every knowledge level is related to its nearest neighbors, usually decided by k-nearest neighbors or a hard and fast radius. This step is vital because it defines the native construction that LPP goals to protect.

Subsequently, a weight matrix is established, reflecting the proximity between neighboring factors. Commonplace practices contain assigning weights utilizing the warmth kernel on the Euclidean distances amongst factors. The essence of LPP lies in its means to transform these relationships right into a mathematical framework by setting up the Laplacian matrix, which highlights the distinction between native and particular person level connectivity.

The core mathematical operation in LPP is fixing for the eigenvectors of the generalized eigenvalue drawback shaped by the Laplacian matrix and the info’s covariance matrix. The ensuing eigenvectors equivalent to the smallest non-zero eigenvalues present the brand new axes for projecting the high-dimensional knowledge right into a lower-dimensional house.

Sensible Functions and Advantages

The utility of LPP extends throughout numerous domains. In picture processing, LPP is instrumental in facial recognition techniques, serving to distinguish options by preserving the important traits of various faces. In bioinformatics, it assists within the visualization and clustering of gene expression knowledge, thereby aiding within the identification of biologically related patterns that aren’t obvious in greater dimensions.

The flexibility of LPP to cut back dimensionality whereas sustaining the native knowledge construction makes it a wonderful instrument for preprocessing knowledge for machine studying fashions. By specializing in essentially the most informative options, LPP can improve the efficiency of subsequent algorithms, probably resulting in extra correct predictions and classifications.

Comparative Benefits and Limitations

Whereas LPP shares similarities with different dimensionality discount methods like PCA, its concentrate on native buildings relatively than international variance units it aside. This native strategy allows LPP to carry out extra successfully with non-linear knowledge distributions, that are generally encountered in real-world datasets.

Nonetheless, LPP has its limitations. The approach’s effectiveness closely will depend on the preliminary steps of neighborhood graph building and the choice of parameters just like the variety of neighbors and the type of the burden matrix. Furthermore, LPP can wrestle with datasets the place the manifold is sparsely sampled or has irregular density, probably resulting in deceptive reductions.

Code

Beneath is a complete Python code instance that makes use of Domestically Preserving Projections (LPP) for dimensionality discount on an artificial dataset. This code consists of the creation of the artificial dataset, characteristic engineering, hyperparameter tuning, cross-validation, and evaluating outcomes with acceptable metrics and plots. For simplicity, we’ll use the sklearn library and its modules, together with the manifold module for a similar approach (Spectral Embedding, which has similarities to LPP), for instance the whole course of:

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.manifold import SpectralEmbedding

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import classification_report, confusion_matrix

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler# Generate an artificial dataset

X, y = datasets.make_circles(n_samples=1000, issue=.5, noise=.05, random_state=42)

# Cut up dataset into coaching and check units

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Pipeline setup: Commonplace Scaler and Spectral Embedding for dimensionality discount

pipeline = Pipeline([

('scaler', StandardScaler()),

('embedder', SpectralEmbedding(n_components=2, random_state=42))

])

# Hyperparameter house for tuning

param_grid = {

'embedder__n_neighbors': [5, 10, 15, 20],

'embedder__gamma': [None, 0.1, 1, 10]

}

# Grid search with cross-validation

grid_search = GridSearchCV(pipeline, param_grid, cv=5, scoring='accuracy')

grid_search.match(X_train, y_train)

# Greatest parameters and finest mannequin from grid search

print("Greatest parameters:", grid_search.best_params_)

best_model = grid_search.best_estimator_

# Remodel knowledge

X_train_transformed = best_model.fit_transform(X_train)

X_test_transformed = best_model.named_steps['embedder'].fit_transform(X_test)

# Classifier after dimensionality discount

knn_classifier = KNeighborsClassifier(n_neighbors=3)

knn_classifier.match(X_train_transformed, y_train)

# Predictions and analysis

y_pred = knn_classifier.predict(X_test_transformed)

print(classification_report(y_test, y_pred))

print("Confusion Matrix:n", confusion_matrix(y_test, y_pred))

# Plotting

plt.determine(figsize=(12, 6))

plt.subplot(1, 2, 1)

plt.scatter(X_train_transformed[:, 0], X_train_transformed[:, 1], c=y_train, cmap='viridis', s=50, alpha=0.6)

plt.title('Prepare Set Transformation')

plt.subplot(1, 2, 2)

plt.scatter(X_test_transformed[:, 0], X_test_transformed[:, 1], c=y_test, cmap='viridis', s=50, alpha=0.6)

plt.title('Check Set Transformation')

plt.present()

Rationalization of the Code:

- Dataset Era: An artificial dataset is created utilizing

make_circles, preferrred for demonstrating non-linear separability. - Pipeline Setup: Incorporates knowledge scaling (

StandardScaler) and dimensionality discount (SpectralEmbeddingwhich stands in for LPP). - Hyperparameter Tuning: Makes use of

GridSearchCVto seek out the optimum parameters for the variety of neighbors and the gamma worth inSpectralEmbedding. - Mannequin Coaching and Analysis: One of the best mannequin from the grid search is used to rework the info, after which a Ok-Nearest Neighbors classifier is educated on the reworked knowledge. Efficiency is evaluated utilizing accuracy, a confusion matrix, and a classification report.

- Plotting: Visualizations of the reworked practice and check units assist perceive how LPP-like dimensionality discount clusters and separates the info in decrease dimensions.

This script covers the whole workflow from knowledge creation to mannequin analysis, showcasing the sensible utility of LPP for dimensionality discount in an artificial situation.

precision recall f1-score assist0 0.65 1.00 0.79 156

1 1.00 0.42 0.59 144

accuracy 0.72 300

macro avg 0.82 0.71 0.69 300

weighted avg 0.82 0.72 0.69 300

Confusion Matrix:

[[156 0]

[ 84 60]]

The plots point out the outcomes of reworking the coaching and check units utilizing Spectral Embedding, analogous to Domestically Preserving Projections (LPP). The plots present that the transformation has resulted in a near-linear separation between courses within the coaching set. Nonetheless, the check set transformation plot reveals a major overlap between the 2 courses.

Analyzing the classification report and confusion matrix:

Class 0 (Presumably the outer circle in make_circles):

- Precision is 0.65, indicating that when the mannequin predicts class 0, it’s right 65% of the time.

- Recall is 1.00, that means the mannequin efficiently identifies all precise situations of sophistication 0.

- The F1-score, the harmonic imply of precision and recall, is 0.79, suggesting a great stability between precision and recall for this class.

Class 1 (Presumably the interior circle in make_circles):

- The precision is 1.00, that means there have been no false positives for sophistication 1.

- Recall is 0.42, which is comparatively low, indicating that the mannequin missed many situations of sophistication 1 (false negatives).

- The F1-score is 0.59, decrease than class 0’s F1-score, reflecting the poor recall fee.

General Accuracy:

- The mannequin has an total accuracy of 0.72, which implies it appropriately categorized 72% of the check set.

Confusion Matrix:

- The mannequin predicted all situations of sophistication 0 appropriately (156 true positives and 0 false negatives).

- Nonetheless, for sophistication 1, there have been 84 false negatives and 60 true positives, indicating that the mannequin struggled to determine this class appropriately.

The mannequin’s excessive precision for sophistication 1 however low recall means that it is extremely assured and sometimes right when it predicts an occasion as class 1. Nonetheless, it tends to categorise many precise class 1 situations as class 0 as an alternative, resulting in many false negatives.

The discrepancy between the coaching and testing plots and the classification metrics might recommend overfitting, the place the mannequin is just too tailor-made to the coaching knowledge and fails to generalize nicely to the check knowledge. The reworked options’ linear nature might not successfully seize the underlying round distribution of the info.

The grid search over the hyperparameters might have led to a mannequin that’s too simplistic or particular to the coaching set. Changes to the manifold studying parameters or exploring various dimensionality discount methods that may seize the info’s construction extra successfully may enhance the mannequin’s efficiency. Moreover, amassing extra knowledge or making an attempt completely different fashions may assist enhance the mannequin’s generalization means.

The outcomes underscore the necessity for cautious consideration when making use of manifold studying methods. Guaranteeing that the decreased dimensions seize the precise construction of the info is essential for the next success of any classification fashions utilized to the info.

Right here’s a plot of the unique artificial dataset. It clearly reveals two distinct courses, every forming a circle with class 0 because the outer circle and sophistication 1 because the interior circle. The dataset captures the non-linear separability that you’d count on from the make_circles perform, with some added noise for complexity.

Conclusion

Domestically Preserving Projections characterize a major development within the toolbox of knowledge analysts aiming to extract significant insights from complicated datasets. By specializing in native knowledge relationships, LPP supplies a nuanced strategy that accommodates the intricacies of real-world knowledge. As with all analytical technique, its profitable utility requires cautious consideration of its assumptions and limitations. Nonetheless, when utilized judiciously, LPP can unlock new dimensions of knowledge understanding and facilitate the invention of insights that might in any other case stay obscured within the maze of high-dimensional areas. This mixing of mathematical rigor with sensible applicability makes LPP a precious part of recent knowledge evaluation, promising to boost our means to make knowledgeable selections primarily based on complete data-driven proof.

As we unravel the layers of complexity in knowledge with methods like Domestically Preserving Projections, we invite you to mirror on the broader implications of dimensionality discount. How do you see LPP remodeling your discipline of labor or examine? Please share your insights or pose new questions under, and let’s foster a collaborative setting the place every perspective brings us nearer to the entire image hidden inside our knowledge.