Introduction

Embark on an exciting journey into the area of Convolutional Neural Networks (CNNs) and Skorch, a revolutionary fusion of PyTorch’s deep studying prowess and the simplicity of scikit-learn. Discover how CNNs emulate human visible processing to crack the problem of handwritten digit recognition whereas Skorch seamlessly integrates PyTorch into machine studying pipelines. Be a part of us as we resolve the mysteries of superior deep learning strategies and discover the facility of CNNs for real-world purposes.

Studying Outcomes

- Achieve a deep understanding of Convolutional Neural Networks and their software in handwritten digit recognition.

- Find out how Skorch bridges PyTorch’s deep studying capabilities with scikit-learn’s user-friendly interface.

- Uncover the structure of CNNs, together with convolutional layers, pooling layers, and totally linked layers.

- Discover sensible strategies for coaching and evaluating CNN fashions utilizing Skorch and PyTorch.

- Grasp important abilities in information preprocessing, mannequin definition, hyperparameter tuning, and mannequin persistence for CNN-based duties.

- Purchase insights into superior deep studying ideas corresponding to hyperparameter optimization, cross-validation, information augmentation, and ensemble studying.

This text was revealed as part of the Data Science Blogathon.

Overview of Convolutional Neural Networks (CNNs)

Image your self sifting by a stack of scribbled numbers. Precisely figuring out and classifying every digit is your job; whereas this will appear simple for people, it could be actually tough for machines. That is the basic concern within the area of synthetic intelligence, that’s, handwritten digit recognition.

With the intention to deal with this concern utilizing machines, researchers have utilized Convolutional Neural Networks (CNNs), a strong class of deep studying fashions that draw inspiration from the complicated human visible system. CNNs resemble how layers of neurons in our brains analyze visible information, figuring out objects and patterns at numerous scales.

Convolutional layers, the brains of CNNs, search enter information for distinctive traits like edges, corners, and textures. Stacking these layers permits CNNs to study summary representations, capturing hierarchical patterns for purposes like digital quantity identification.

CNNs use convolutions, pooling layers, down sampling, and backpropagation to cut back spatial dimension and enhance computing effectivity. They’ll acknowledge handwritten numbers with precision, usually outperforming standard algorithms. CNNs open the door to a future the place robots can decode and perceive handwritten numbers utilizing deep studying, mimicking human imaginative and prescient’s complexities.

What’s Skorch and Its Advantages ?

With its intensive library and framework ecosystem, Python has emerged as the popular language for configuring deep learning fashions. TensorFlow, PyTorch, and Keras are a couple of well-known frameworks that give programmers a set of chic instruments and APIs for successfully creating and coaching CNN fashions.

Each framework has its personal distinctive advantages and options that meet the wants and tastes of varied builders.

PyTorch’s success is attributed to its “define-by-run” semantics, which dynamically creates the computational graph throughout operations, enabling extra environment friendly debugging, mannequin customization, and quicker prototyping.

Skorch connects PyTorch and scikit-learn, permitting builders to make use of PyTorch’s deep studying capabilities whereas utilizing the user-friendly scikit-learn API. This enables builders to combine deep studying fashions into their current machine studying pipelines.

Skorch is a wrapper that integrates with scikit-learn, permitting builders to make use of PyTorch’s neural community modules for coaching, validating, and making predictions. It helps options like grid search, cross-validation, and mannequin persistence, permitting builders to maximise their current information and workflows. Skorch is straightforward to make use of and adaptable, permitting builders to make use of PyTorch’s deep studying capabilities with out intensive coaching. This mix presents alternatives to create superior CNN fashions and implement them in sensible eventualities.

Tips on how to Work with Skorch?

Allow us to now undergo some steps on find out how to set up Skorch and construct a CNN Mannequin:

Step1: Putting in Skorch

We’ll use the pip command to put in the Skorch library. It’s required solely as soon as.

The essential command to put in a package deal utilizing pip is:

pip set up skorchAlternatively, use the next command inside Jupyter Pocket book/Colab:

!pip set up skorchStep2: Constructing a CNN mannequin

Be happy to make use of the supply code obtainable here.

The very first step in coding is to import the required libraries. We would require NumPy, Scikit-learn for dataset dealing with and preprocessing, PyTorch for constructing and coaching neural networks, torch imaginative and prescient

for performing picture transformations as we’re coping with picture information, and Skorch, after all, for integration of Pytorch with Scikit-learn.

print('Importing Libraries... ',finish='')

import numpy as np

from sklearn.datasets import fetch_openml

from sklearn.model_selection import train_test_split

from skorch import NeuralNetClassifier

from skorch.callbacks import EarlyStopping

from skorch.dataset import Dataset

import torch

from torch import nn

import torch.nn.purposeful as F

import matplotlib.pyplot as plt

import random

print('Executed')Step3: Understanding the Knowledge

The dataset we selected known as the USPS digit dataset. It’s a assortment of 9,298 grayscale samples. These samples are mechanically scanned from envelopes by the U.S. Postal Service. Every pattern is a 16×16 pixel picture.

This dataset is freely obtainable at OpenML for experimentation. We’ll use Scikit-learn’s fetch_openml technique to load the dataset and print the dataset statistics.

# Loading the information

print('Loading information... ',)

X, y = fetch_openml('usps', return_X_y=True)

print('Executed')

# Get dataset statistics

print('Dataset statistics... ')

print(X.form,y.form)Subsequent, we’ll carry out commonplace information preprocessing adopted by standardization. Subsequent, we’ll break up the dataset within the ratio of 70:30 for coaching and testing, respectively.

# Preprocessing

X = X / 16.0 # Scale the enter to [0, 1] vary

X = X.values.reshape(-1, 1, 16, 16).astype(np.float32) # Reshape for CNN enter

y = y.astype('int')-1

# Cut up train-test information in 70:30

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=11)Defining CNN Structure Utilizing PyTorch

Our CNN mannequin consists of three convolution blocks and two totally linked layers. The convolutional layers are stacked to extract the options hierarchically, whereas the totally linked layers, typically known as dense layers, are used to carry out the classification job. Because the convolution operation generates excessive dimensional information, pooling is carried out to downsize it. Max pooling is without doubt one of the most used operations, which we have now used. A kernel of dimension 3×3 is used with stride=1. Padding preserves the knowledge on the edges; therefore, padding of dimension one is used. Every layer applies the ReLU activation operate apart from the output layer.

To maintain the mannequin easy, we aren’t utilizing batch normalization. Nonetheless, one might want to use it. To forestall overfitting, we use dropout and early stopping.

# Outline CNN mannequin

class DigitClassifier(nn.Module):

def __init__(self):

tremendous(DigitClassifier, self).__init__()

self.conv1 = nn.Conv2d(1, 32, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(32, 64, kernel_size=3, padding=1)

self.conv3 = nn.Conv2d(64, 128, kernel_size=3, padding=1)

self.fc1 = nn.Linear(128 * 4 * 4, 256)

self.dropout = nn.Dropout(0.2)

self.fc2 = nn.Linear(256, 10)

def ahead(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2)

x = F.relu(self.conv3(x))

x = x.view(-1, 128 * 4 * 4)

x = F.relu(self.fc1(x))

x = self.dropout(x)

x = self.fc2(x)

return xUtilizing Skorch to Encapsulate CNN Mannequin

Now comes the central half: find out how to wrap the PyTorch mannequin in Skorch for Sckit-learn type coaching.

For this goal, allow us to outline the hyperparameters as:

# Hyperparameters

max_epochs = 25

lr = 0.001

batch_size = 32

persistence = 5

gadget="cuda" if torch.cuda.is_available() else 'cpu'Subsequent, this code creates a wrapper round a neural community mannequin known as DigitClassifier utilizing Skorch. The wrapped mannequin is configured with settings corresponding to the utmost variety of coaching epochs, studying price, batch dimension for coaching and validation information, loss operate, optimizer, early stopping callback, and the gadget to run the computations, that’s, CPU or GPU.

# Wrap the mannequin in Skorch NeuralNetClassifier

digit_classifier = NeuralNetClassifier(

module = DigitClassifier,

max_epochs = max_epochs,

lr = lr,

iterator_train__batch_size = batch_size,

iterator_train__shuffle = True,

iterator_valid__batch_size = batch_size,

iterator_valid__shuffle = False,

criterion = nn.CrossEntropyLoss,

optimizer = torch.optim.Adam,

callbacks = [EarlyStopping(patience=patience)],

gadget = gadget

)Code Evaluation

Allow us to dig into the code with a radical evaluation:

- Skorch, a wrapper for PyTorch that manages neural community fashions, accommodates the `NeuralNetClassifier` class as one in every of its parts. It permits for utilizing PyTorch fashions in a user-friendly interface just like scikit-learn, making the coaching and analysis of neural networks simpler.

- The `module` parameter signifies the neural community mannequin that might be employed. On this specific occasion, the PyTorch module “DigitClassifier” encapsulates the definition of the CNN’s structure and performance.

- The `max_epochs` parameter units the higher restrict on the variety of epochs for coaching the neural community.

- The `lr` parameter controls the training price, which determines the step dimension throughout optimization. The step dimension is significant in fine-tuning the mannequin’s parameters and lowering the loss operate.

- The parameters `iterator_train__batch_size` and `iterator_valid__batch_size` are accountable for setting the batch dimension for the coaching and validation information, respectively. The batch dimension determines the variety of samples processed earlier than updating the mannequin’s parameters.

- The parameters `iterator_train__shuffle` and `iterator_valid__shuffle` decide how the coaching and validation datasets are shuffled earlier than every epoch. Reorganizing the information helps shield the mannequin from memorizing the order of the samples.

- The parameter

optimizer = torch.optim.Adamdetermines the optimizer that may replace the mannequin’s parameters with the calculated gradients. - The `callbacks` parameter consists of utilizing callbacks throughout coaching. Within the instance, EarlyStopping is used to cease coaching early if the validation loss stops bettering inside a set variety of epochs (on this instance, persistence=5).

- The ‘gadget’ parameter specifies the gadget, corresponding to CPU or GPU, on which the computations might be executed.

# Prepare the mannequin

print('Utilizing...', gadget)

print("Coaching began...")

digit_classifier.match(X_train, y_train)

print("Coaching accomplished!")

# Consider the mannequin

# Consider on take a look at information

y_pred = digit_classifier.predict(X_test)

accuracy = digit_classifier.rating(X_test, y_test)

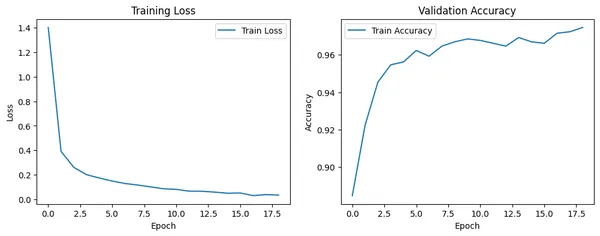

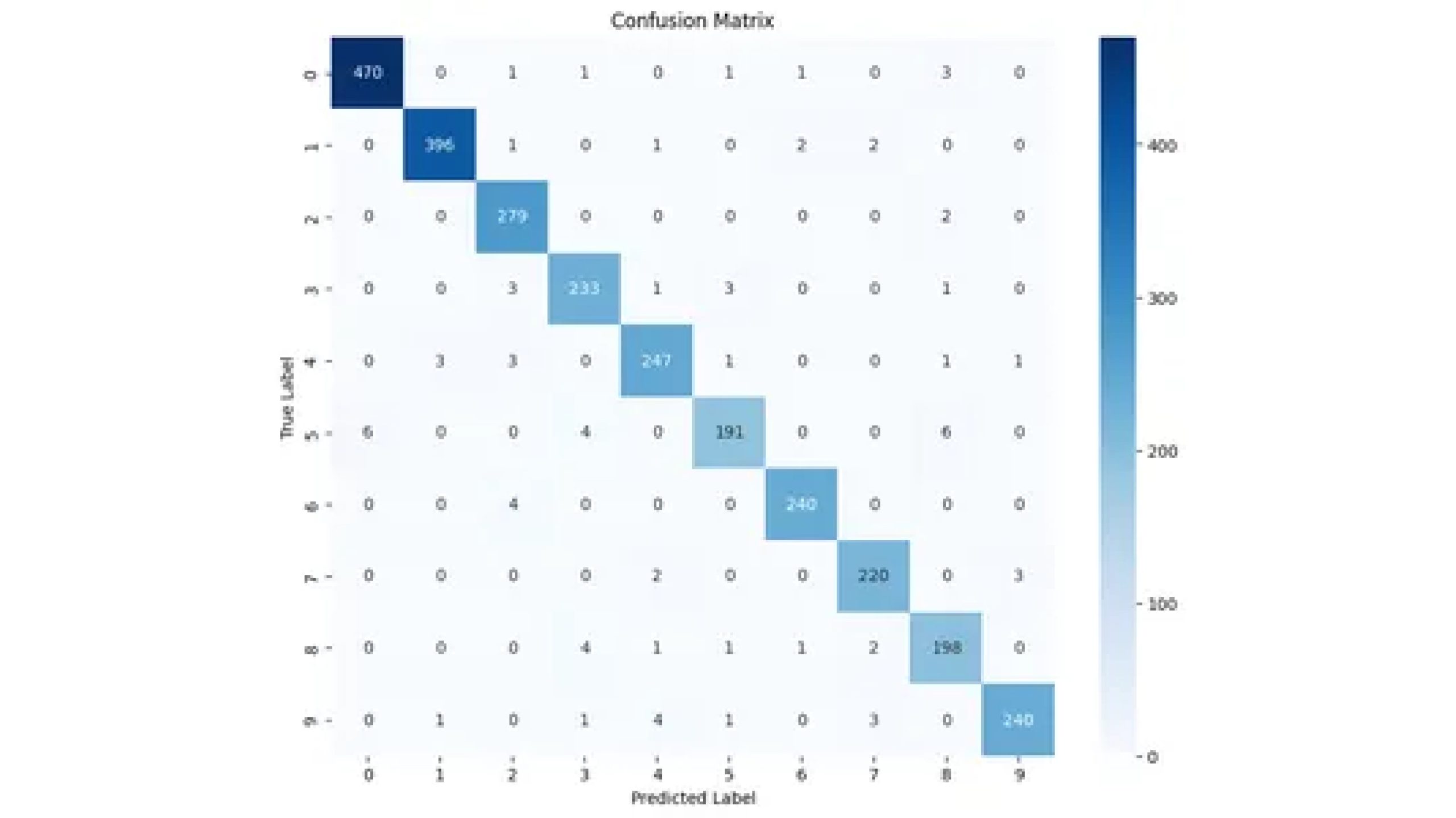

print(f'Check accuracy: {accuracy:.4f}')Subsequent, practice the mannequin utilizing the Scikit-learn type match operate. Our mannequin achieves greater than 96% accuracy on take a look at information.

Further Experiments

The above code consists of a easy CNN mannequin. Nonetheless, you might contemplate incorporating the next points to make sure a extra complete method.

Hyperparameters

Hyperparameters regulate how a machine-learning mannequin trains. Correctly tuning them can have a major impression on the efficiency of the mannequin. Make use of numerous strategies to optimize hyperparameters, together with grid search or random search. These strategies might help fine-tune studying price, batch dimension, community structure, and different tunable parameters and return an optimum mixture of hyperparameters.

Cross-Validation

Cross-validation is a invaluable approach for enhancing the reliability of mannequin efficiency analysis. It entails dividing the dataset into a number of subsets and coaching the mannequin on numerous mixtures of those subsets. Carry out k-fold cross-validation to guage the mannequin’s efficiency extra successfully.

Mannequin Persistence

Mannequin persistence entails the method of saving the educated mannequin to disk for future reuse, eliminating the necessity for retraining. By using instruments corresponding to joblib or torch.save, carrying out this job turns into comparatively simple.

Logging and Monitoring

Preserving observe of essential data through the coaching course of, corresponding to loss and accuracy metrics, is essential. There are instruments obtainable that may help in visualizing coaching metrics, corresponding to TensorBoard or Weights & Biases (wandb).

Knowledge Augmentation

Deep studying fashions rely closely on information. The provision of coaching information instantly influences efficiency. Knowledge augmentation entails producing new coaching samples by making use of transformations

to current ones, corresponding to rotations, translations and flips.

Ensemble Studying

Ensemble studying is a method that leverages the facility of a number of fashions to boost total efficiency. One technique is to coach a number of fashions utilizing numerous initializations or subsets of the information after which common their predictions. Discover ensemble strategies corresponding to bagging or boosting

to boost efficiency by coaching a number of fashions and merging their predictions.

Conclusion

W explored into Convolutional Neural Networks and Skorch reveals the highly effective synergy between superior deep studying strategies and environment friendly Python frameworks. By leveraging CNNs for handwritten digit recognition and Skorch for seamless integration with scikit-learn, we’ve demonstrated the potential to bridge cutting-edge expertise with user-friendly interfaces. This journey underscores the transformative impression of mixing PyTorch’s strong capabilities with scikit-learn’s simplicity, empowering builders to implement refined fashions with ease. As we navigate by the realms of deep studying and machine learning, the collaboration between CNNs and Skorch heralds a future the place complicated duties develop into accessible and options develop into attainable.

Key Takeaways

- Discovered Skorch facilitates seamless integration of PyTorch fashions into Scikit-learn workflows, optimizing productiveness in machine studying duties.

- With Skorch, customers can harness PyTorch’s deep studying capabilities throughout the acquainted and environment friendly setting of Scikit-learn.

- Skorch bridges the hole between PyTorch’s flexibility and Scikit-learn’s ease of use, providing a strong device for coaching complicated fashions.

- By leveraging Skorch, builders can practice and deploy PyTorch fashions utilizing Scikit-learn’s strong ecosystem and intuitive API.

- Skorch permits the coaching of PyTorch fashions with Scikit-learn’s grid search, cross-validation, and mannequin persistence functionalities, enhancing mannequin efficiency and reliability.

References

Regularly Requested Questions

A. Skorch is a Python library that seamlessly integrates PyTorch with Scikit-learn, permitting customers to coach PyTorch fashions utilizing Scikit-learn’s acquainted interface and instruments.

A. Skorch gives a wrapper for PyTorch fashions, enabling customers to make the most of Scikit-learn’s strategies corresponding to match, predict, and rating for coaching, analysis, and prediction duties.

A. Skorch simplifies the method of constructing and coaching PyTorch fashions by offering a higher-level interface just like Scikit-learn. This makes it simpler for customers accustomed to Scikit-learn to transition to PyTorch.

A. Sure, Skorch seamlessly integrates with current Scikit-learn workflows, permitting customers to include PyTorch fashions into their machine studying pipelines with out vital modifications.

A. Sure, Skorch helps hyperparameter tuning and cross-validation utilizing Scikit-learn’s instruments corresponding to GridSearchCV and RandomizedSearchCV, enabling customers to optimize their PyTorch fashions effectively.

The media proven on this article is just not owned by Analytics Vidhya and is used on the Creator’s discretion.