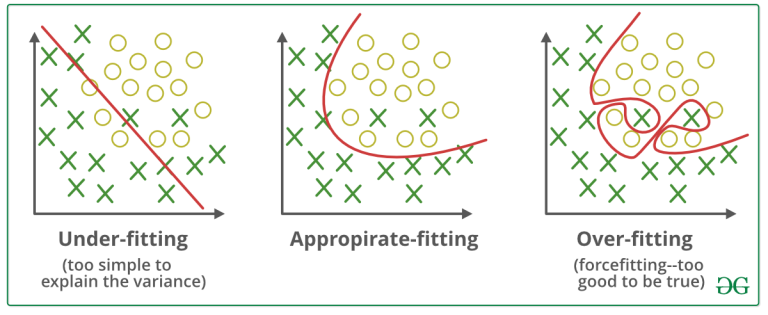

On the core of overfitting and underfitting lies the essential trade-off between bias and variance. Bias represents the error stemming from simplifying a real-world drawback with a mannequin, whereas variance measures the mannequin’s sensitivity to fluctuations within the coaching information.

In easier phrases, an overfitting mannequin shows excessive variance, whereas an underfitting mannequin showcases excessive bias.

Reaching the optimum equilibrium between these two points is akin to strolling a tightrope — discovering the exact level the place the mannequin discerns the inherent patterns within the information with out falling prey to overcomplication or oversimplification.

Balancing Bias and Variance

To deal with the challenges posed by overfitting and underfitting, we should purpose for a fragile concord between bias and variance. This entails choosing a mannequin complexity that successfully captures the underlying information patterns whereas resisting the temptation to both overcomplicate or oversimplify. Within the subsequent part, we are going to discover methods to realize this equilibrium.

Within the realm of machine studying, quite a few strategies exist to deal with the challenges of overfitting and underfitting. On this discourse, we’ll concentrate on two distinguished methods: regularization and cross-validation. It’s essential to notice that whereas these strategies are broadly relevant and efficient, there are additionally quite a few different methods tailor-made for particular algorithms or eventualities, that are price exploring.

Regularization

Regularization serves as a strong device in mitigating overfitting by imposing constraints on mannequin complexity. Via the mixing of penalty phrases into the optimization course of, regularization methods discourage the event of overly intricate fashions that will match noise or irrelevant patterns within the coaching information. This fosters the creation of a extra generalized mannequin able to performing effectively not solely on the coaching dataset but in addition on unseen information.

One such regularization methodology is L1 regularization, also referred to as Lasso. Lasso achieves regularization by introducing a penalty proportional to the sum of absolutely the values of the mannequin coefficients. This encourages sparsity within the coefficient vector, successfully selling function choice. Consequently, Lasso drives some coefficients to zero, thereby eliminating irrelevant options from the mannequin and lowering its complexity.

To delve deeper into the intricacies of Lasso Regression, one can discover the insightful explanations offered by statistics professional Josh Starmer. The primary takeaway right here is that Lasso regression suppresses the magnitude of the coefficients, thereby stabilizing the mannequin’s predictions and making certain that they don’t fluctuate considerably with minor modifications within the explanatory variables.

Equally, L2 regularization, often known as Ridge, additionally applies a penalty on the sum of squared coefficients. Not like Lasso, Ridge doesn’t induce sparsity within the coefficient vector. Nevertheless, it successfully mitigates the chance of overfitting by constraining the magnitude of the coefficients. This constraint prevents particular person coefficients from reaching excessively giant values, thereby enhancing the steadiness and generalization efficiency of the mannequin.

For additional insights into Ridge Regression, one can confer with Josh’s rationalization. The excellence between Ridge and Lasso could seem refined, however each strategies purpose to attain the identical final result: penalizing high-magnitude coefficients to stabilize the mannequin and forestall overfitting.

By incorporating regularization methods reminiscent of L1 and L2 into the coaching course of, practitioners can strike a stability between bias and variance, yielding fashions that seize the underlying patterns within the information with out succumbing to the temptation of overfitting. Regularization thus serves as an important mechanism for enhancing the robustness and predictive accuracy of machine studying fashions, notably in eventualities the place the chance of overfitting is excessive.

Cross-Validation

One other methodology that helps mitigate overfitting is cross-validation. Cross-validation serves as a sturdy methodology for assessing a mannequin’s generalization efficiency and figuring out overfitting. By splitting the info into a number of train-test splits and evaluating the mannequin’s efficiency on every break up, we acquire insights into its capability to generalize to new information.

Instance: In k-fold cross-validation, the info is split into okay subsets or folds. The mannequin is educated on k-1 folds and evaluated on the remaining fold. This course of is repeated okay occasions, with every fold serving because the check set as soon as. The typical efficiency throughout all folds serves as an estimate of the mannequin’s generalization efficiency.