If you first begin exploring the world of machine studying, you rapidly be taught that constructing fashions that carry out effectively on coaching knowledge is simply a part of the journey. The actual take a look at of a mannequin’s success is how effectively it performs on new, unseen knowledge. That is the place regularization turns out to be useful.

On this weblog publish, we’ll break down what regularization is, why it’s necessary, and the way it can enhance your machine-learning fashions.

Regularization is a way utilized in machine studying to stop overfitting — when a mannequin learns the noise and idiosyncrasies within the coaching knowledge as a substitute of the underlying patterns. This could result in poor efficiency on new, unseen knowledge. Regularization helps information a mannequin to generalize higher by introducing a penalty for overly complicated fashions.

The important thing concept behind regularization is to discover a stability between a mannequin’s skill to suit the coaching knowledge and its skill to generalize to new, unseen knowledge. By including a regularization time period to the mannequin’s loss operate, we discourage the mannequin from changing into too complicated and as a substitute encourage it to be taught crucial patterns within the knowledge.

There are a number of frequent situations the place regularization could be significantly helpful:

- Excessive Characteristic Rely: When you’ve many options (variables) in your dataset, there’s a threat of overfitting. Regularization helps management the complexity of your mannequin and prevents it from changing into too delicate to the specifics of the coaching knowledge.

- Small Dataset: In case your dataset is small, it’s simpler for a mannequin to overfit the restricted knowledge. Regularization can assist stability the mannequin’s match to the coaching knowledge and its skill to generalize to new, unseen examples.

- Noisy Information: Information with numerous noise may cause fashions to seize the noise as a substitute of the true sign. Regularization helps by protecting the mannequin’s complexity in verify, stopping it from memorizing the noise.

- Excessive Mannequin Complexity: Extremely complicated fashions, akin to deep neural networks, can simply overfit the coaching knowledge. Regularization strategies like dropout and weight decay can assist these fashions generalize higher.

There are a number of standard regularization strategies utilized in machine studying. Let’s discover the most typical ones:

L1 Regularization (Lasso):

- What It Is: Provides a penalty to the loss operate primarily based on absolutely the worth of the mannequin’s coefficients.

- Impact: Drives some coefficients to zero, which successfully removes much less necessary options from the mannequin. This could result in a sparser mannequin.

- Instance: In a linear regression mannequin, utilizing L1 regularization can assist determine which options (e.g., measurement of the home, variety of bedrooms) have little influence on home costs, probably resulting in an easier mannequin.

L2 Regularization (Ridge):

- What It Is: Provides a penalty to the loss operate primarily based on the sq. of the mannequin’s coefficients.

- Impact: Encourages smaller coefficients, serving to to stop massive swings in predictions and bettering mannequin stability.

- Instance: In logistic regression, L2 regularization can assist management the coefficients to keep away from excessive values, making the mannequin extra strong and fewer delicate to outliers.

Elastic Internet:

- What It Is: Combines L1 and L2 regularization to get the advantages of each strategies.

- Impact: Offers a stability between sparsity (L1) and stability (L2), permitting you to fine-tune your mannequin.

- Instance: In regression fashions, Elastic Internet could be useful once you wish to management mannequin complexity whereas nonetheless permitting some options to be eradicated.

Whereas L1, L2, and Elastic Internet are all glorious instruments, there are numerous different regularization methods that may be equally (or much more) efficient, relying in your use case.

Some examples to contemplate embrace:

- Group Lasso Regularization

- Dropout Regularization

- Tikhonov Regularization

- Fractional Regularization

- Sturdy Regularization

Every of those strategies could be utilized to varied machine studying fashions, akin to linear regression, logistic regression, and neural networks, to enhance their generalization efficiency.

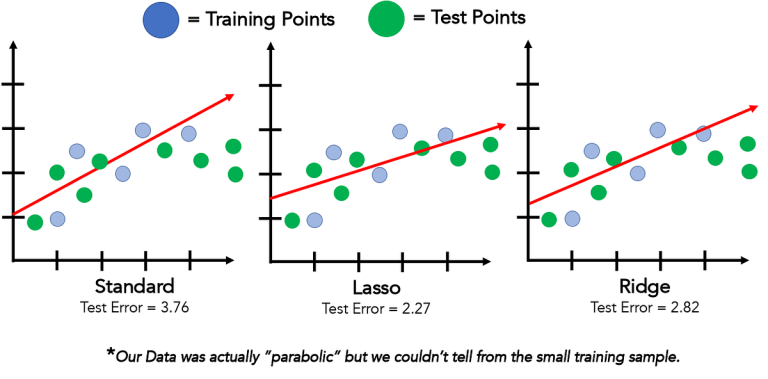

Let’s take the case of a easy linear regression mannequin. The mannequin tries to seek out the best-fit line for the information by minimizing the error between its predictions and the precise values. With out regularization, the loss operate (imply squared error) is simply the sum of squared variations between predictions and precise values.

If you apply regularization:

- With L1 Regularization (Lasso): The loss operate consists of absolutely the worth of the mannequin’s coefficients as a penalty. This encourages some coefficients to change into zero, simplifying the mannequin.

- With L2 Regularization (Ridge): The loss operate consists of the sq. of the mannequin’s coefficients as a penalty. This encourages the mannequin to maintain the coefficients small, decreasing the influence of every function.

- With Elastic Internet: The loss operate features a mixture of absolutely the worth and the sq. of the coefficients, balancing sparsity and stability.

Regularization is usually carried out by including a penalty time period to the mannequin’s loss operate. The energy of the regularization is managed by a hyperparameter, sometimes called “alpha” or “C” (the inverse of alpha).

Right here’s an instance of easy methods to implement L1, L2, and Elastic Internet regularization in Python utilizing the scikit-learn library:

from sklearn.linear_model import Lasso, Ridge, ElasticNet

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error# Pattern knowledge: X (options), y (goal)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# L1 Regularization (Lasso)

lasso_model = Lasso(alpha=0.1)

lasso_model.match(X_train, y_train)

y_pred_lasso = lasso_model.predict(X_test)

mse_lasso = mean_squared_error(y_test, y_pred_lasso)

print(f"Lasso Regularization - Imply Squared Error: {mse_lasso}")

# L2 Regularization (Ridge)

ridge_model = Ridge(alpha=0.1)

ridge_model.match(X_train, y_train)

y_pred_ridge = ridge_model.predict(X_test)

mse_ridge = mean_squared_error(y_test, y_pred_ridge)

print(f"Ridge Regularization - Imply Squared Error: {mse_ridge}")

# Elastic Internet

elastic_net_model = ElasticNet(alpha=0.1, l1_ratio=0.5)

elastic_net_model.match(X_train, y_train)

y_pred_elastic_net = elastic_net_model.predict(X_test)

mse_elastic_net = mean_squared_error(y_test, y_pred_elastic_net)

print(f"Elastic Internet Regularization - Imply Squared Error: {mse_elastic_net}")

When constructing a machine studying mannequin, you usually have to specify the regularization method and its energy by parameters:

- Energy of Regularization:

- The energy of regularization is normally managed by a parameter referred to as alpha (or C, which is the inverse of alpha).

- The next alpha worth means stronger regularization, because it will increase the penalty time period within the mannequin’s loss operate.

- Selecting the suitable alpha worth is essential, because it determines the stability between becoming the coaching knowledge and the mannequin’s skill to generalize.

2. Selecting the Proper Regularization:

- Choosing the optimum regularization method and its hyperparameters (like alpha) typically requires experimentation and tuning utilizing validation knowledge.

- Methods like grid search or cross-validation (which we’ll cowl in future articles) could be very useful to find one of the best mixture of regularization parameters on your particular drawback.

- These strategies help you systematically discover completely different regularization approaches and hyperparameter values, and consider the mannequin’s efficiency on the validation knowledge to determine the optimum configuration.

Regularization may also be mixed with different strategies to additional enhance mannequin efficiency and interpretability:

3. Combining Regularization with Different Methods:

- Regularization can be utilized along with function choice strategies to determine and deal with crucial options in your dataset.

- It may also be mixed with dimensionality discount strategies, akin to Principal Element Evaluation (PCA) or t-SNE, to scale back the variety of options whereas preserving essentially the most informative ones.

- By integrating regularization with these complementary strategies, you may typically construct extra strong, interpretable, and high-performing machine studying fashions.

Mastering the artwork of controlling mannequin complexity by regularization is an important ability for any knowledge scientist. By experimenting with completely different regularization approaches and tuning the hyperparameters, you may unlock the true potential of your machine studying fashions and ship correct, dependable predictions, even within the face of difficult knowledge situations.

Lastly,

I hope this weblog publish has outfitted you with a deeper understanding of regularization and impressed you to discover its full potential in your machine studying tasks. Be at liberty to share your ideas, insights, and experiences within the feedback under. I’m all the time desirous to be taught from the neighborhood!