In the case of studying, people and synthetic intelligence (AI) techniques share a standard problem: tips on how to overlook data they shouldn’t know. For quickly evolving AI packages, particularly these skilled on huge datasets, this problem turns into essential. Think about an AI mannequin that inadvertently generates content material utilizing copyrighted materials or violent imagery – such conditions can result in authorized problems and moral considerations.

Researchers at The College of Texas at Austin have tackled this downside head-on by making use of a groundbreaking idea: machine “unlearning.” Of their latest examine, a workforce of scientists led by Radu Marculescu have developed a way that permits generative AI fashions to selectively overlook problematic content material with out discarding the complete data base.

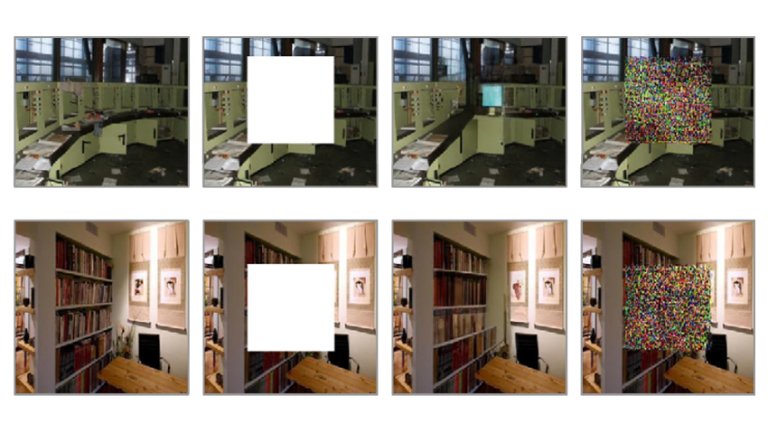

On the core of their analysis are image-to-image fashions, able to reworking enter photographs based mostly on contextual directions. The novel machine “unlearning” algorithm equips these fashions with the flexibility to expunge flagged content material with out present process in depth retraining. Human moderators oversee content material removing, offering a further layer of oversight and responsiveness to person suggestions.

Whereas machine unlearning has historically been utilized to classification fashions, its adaptation to generative fashions represents a nascent frontier. Generative fashions, particularly these coping with picture processing, current distinctive challenges. Not like classifiers that make discrete selections, generative fashions create wealthy, steady outputs. Guaranteeing that they unlearn particular features with out compromising their artistic skills is a fragile balancing act.

As the following step the scientists plan to discover applicability to different modality, particularly for text-to-image fashions. Researchers additionally intend to develop some extra sensible benchmarks associated to the management of created contents and defend the information privateness.

You’ll be able to learn the complete examine within the paper revealed on the arXiv preprint server.

As AI continues to evolve, the idea of machine “unlearning” will play an more and more very important function. It empowers AI techniques to navigate the high-quality line between data retention and accountable content material technology. By incorporating human oversight and selectively forgetting problematic content material, we transfer nearer to AI fashions that study, adapt, and respect authorized and moral boundaries.